/*Emma Shi

eyshi@andrew.cmu.edu

Section B

Final Project

*/

var midC;

var D;

var E;

var F;

var G;

var A;

var B;

var hiC;

var pad = 38;

var space = 75;

var col = 0;

var thickness = 1;

var rD = 7;

var gD = 80;

var bD = 6;

var rE = 243;

var gE = 170;

var bE = 0;

var rF = 83;

var gF = 38;

var bF = 0;

var rA = 256;

var gA = 246;

var bA = 227;

var rB = 46;

var gB = 39;

var bB = 118;

var eraser;

function preload(){

A = loadSound('https://courses.ideate.cmu.edu/15-104/f2016/wp-content/uploads/2016/12/A.mp3');

B = loadSound('https://courses.ideate.cmu.edu/15-104/f2016/wp-content/uploads/2016/12/B.mp3');

midC = loadSound('https://courses.ideate.cmu.edu/15-104/f2016/wp-content/uploads/2016/12/middleC.mp3');

hiC = loadSound('https://courses.ideate.cmu.edu/15-104/f2016/wp-content/uploads/2016/12/highC.mp3');

D = loadSound('https://courses.ideate.cmu.edu/15-104/f2016/wp-content/uploads/2016/12/D.mp3');

E = loadSound('https://courses.ideate.cmu.edu/15-104/f2016/wp-content/uploads/2016/12/E.mp3');

F = loadSound('https://courses.ideate.cmu.edu/15-104/f2016/wp-content/uploads/2016/12/F.mp3');

G = loadSound('https://courses.ideate.cmu.edu/15-104/f2016/wp-content/uploads/2016/12/G.mp3');

}

function setup(){

createCanvas(800, 300);

background(0);

}

function draw(){

noStroke();

middleC();

noteD();

noteE();

noteF();

noteG();

noteA();

noteB();

highC();

paint();

eraser = createEraser();

if (mouseIsPressed) {

if ((10 <= mouseX) & (mouseX <= 70) && (8 <= mouseY) && (mouseY <= 70)){

col = "red";//if user presses mouse within the upper right red circle, the stroke is red

midC.play();

} else if ((10 <= mouseX) & (mouseX <= 70) && (80 <= mouseY) && (mouseY <= 142)){

col = color(rE, gE, bE);//if user presses mouse within the mid right yellow circle, the stroke is yellow

E.play();

} else if ((10 <= mouseX) & (mouseX <= 70) && (155 <= mouseY) && (mouseY <= 220)){

col = "white";//if user presses mouse within the mid right white circle, the stroke is white

G.play();

} else if((10 <= mouseX) & (mouseX <= 70) && (230 <= mouseY) && (mouseY <= height-8)){

col = color(rB, gB, bB);//if user presses mouse within the bottom right blue circle, the stroke is blue

B.play();

} else if ((80 <= mouseX) & (mouseX <= 140) && (8 <= mouseY) && (mouseY <= 70)){

col = color(rD, gD, bD);//if user presses mouse within the upper left green circle, the stroke is green

D.play();

} else if ((80 <= mouseX) & (mouseX <= 140) && (80 <= mouseY) && (mouseY <= 142)){

col = color(rF, gF, bF);//if user presses mouse within the mid left brown circle, the stroke is brown

F.play();

} else if ((80 <= mouseX) & (mouseX <= 140) && (155 <= mouseY) && (mouseY <= 220)){

col = color(rA, gA, bA);//if user presses mouse within the mid left beige circle, the stroke is beige

A.play();

} else if ((80 <= mouseX) & (mouseX <= 140) && (230 <= mouseY) && (mouseY <= height-8)){

col = "red";//if user presses mouse within the bottom left red circle, the stroke is red

hiC.play();

} else if ((120 <= mouseX) & (mouseX >= 160) && (50 <= mouseY) && (mouseY <= 70)){

col = 0;

}

push();

stroke(col);

strokeWeight(thickness);

line(pmouseX, pmouseY, mouseX, mouseY);

pop();

}

fill("white");

textStyle(NORMAL);

text('Click on a circle and drag your cursor to draw', 160, 20);

text('Press A to increase brush size', 160, 32);

text('Press B to decrease brush size', 160, 44);

}

function middleC(){

fill("red");

ellipse(40, pad, 60, 60);//upper right red circle

}

function noteD(){

fill(rD, gD, bD);

ellipse(110, pad, 60, 60);//upper left green circle

}

function noteE(){

fill(rE, gE, bE);

ellipse(40, pad + space, 60, 60);//mid right yellow circle

}

function noteF(){

fill(rF, gF, bF);

ellipse(110, pad + space, 60, 60);//mid left brown circle

}

function noteG(){

fill("white");

ellipse(40, pad + 2*space, 60, 60);//mid right white circle

}

function noteA(){

fill(rA, gA, bA);

ellipse(110, pad + 2*space, 60, 60);//mid left off-white circle

}

function noteB(){

fill(rB, gB, bB);

ellipse(40, height-pad, 60, 60);//bottom right blue-violet circle

}

function highC(){

fill("red")

ellipse(110, height-pad, 60, 60);//bottom left red circle

}

function paint(){

line(pmouseX, pmouseY, mouseX, mouseY);//allows user to draw

}

function createEraser() {

noFill();

stroke("white");

rect(160, 50, 40, 20);

noFill();

text('Erase', 165, 65);//creates Erase button

}

function keyTyped(){

if (key == 'a'){

thickness += 0.5;//strokeweight increases by 0.5 if user presses a

} else if (key == 'b'){

thickness -= 0.5;//strokeweight decreases by 0.5 is user presses b

}

}

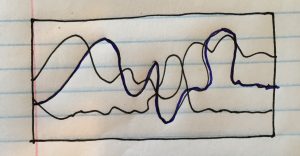

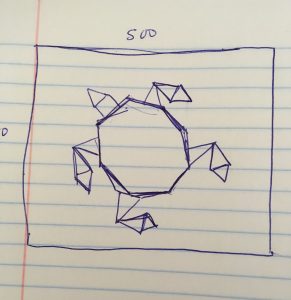

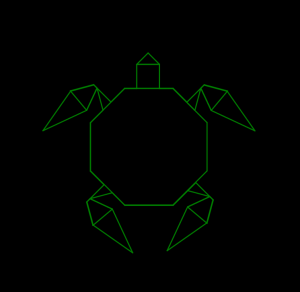

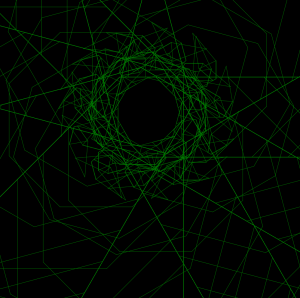

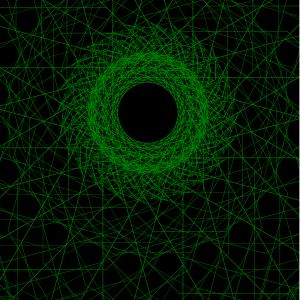

I originally wanted to create a program that would essentially create a “music video” for a song, and have notes in the song represented through colors that I associate with specific notes (I have perfect pitch, and perhaps as a result, synesthesia with music notes and color). However, I ended up making a different project in the end, but still wanted to incorporate audiovisual elements that reflect my own experiences in having synesthesia and perfect pitch.

In essence, this project is a simple drawing tool where there is a given color palette; specifically, one that represents the C-major scale for me. Each color in the palette references a different note — D is represented by dark green, E is represented by mustard yellow, F is represented by brown, G is represented by white, A is represented by off-white, B is represented by blue-violet, and you may notice that middle C and high C are represented by the same red color (I don’t make a distinction in color between the same note in different octaves). Every time the user draws, the corresponding note is played.

Although these aren’t the prettiest of colors, these are pretty much the exact shades that I refer to in my mind when I think of these music notes, and I believe there is some natural logic to them. For example, if you take a C-major chord (C, E, G), it translates to red, yellow, and white. These are all warm colors. However, if you take a G-major chord (G, B, D), it translate to white, blue-violet, and green. These are all cool colors. Additionally, the notes G, A, and F are all “neutral” colors (white, off-white, brown), because they can be matched up with more chords, and thus more color combinations. I didn’t take music theory back in the day, so I am probably using the wrong terms, but hopefully you get the idea.

Through this project, I hope that viewers can get some perspective of what it is like to have synesthesia for music and color.

![[OLD – FALL 2016] 15-104 • COMPUTING for CREATIVE PRACTICE](https://courses.ideate.cmu.edu/15-104/f2016/wp-content/uploads/2020/08/stop-banner.png)