Daniel Franke and Cedric Kiefer

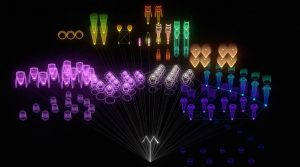

‘Unnamed SoundSculpture’ is a computational design project operating at the intersection of sound, art, and technology. The sound sculpture is the result of recorded motion data of a real person. Laura Keil, a Berlin-based dancer, to interpret a musical piece – Kreukeltape by Machinefabriek – as closely as possible with the movement of her own body. She was recorded by three depth cameras (Kinect). The intersection of the images was later put together to a three-dimensional volume (3d point cloud), which was later put into 3D max for further rendering. A three-dimensional scene was created including the camera movement controlled by the audio. Through this process, the digital body, consisting of 22,000 points, comes to life.

I couldn’t find much on the code or algorithms used in the creation of this project, but it doesn’t seem any custom-made software was needed for them to achieve their goals. With the sculpture based on the music and movement of the dancer, it captures the artistic sensibility of the performer. And the rendering of the 3D environment, and points making up the human form is truly evocative when in motion. I admire the emotional response of the project, in being able to capture an essence of performance, both with the music and the performer.

![[OLD FALL 2017] 15-104 • Introduction to Computing for Creative Practice](https://courses.ideate.cmu.edu/15-104/f2017/wp-content/uploads/2020/08/stop-banner.png)