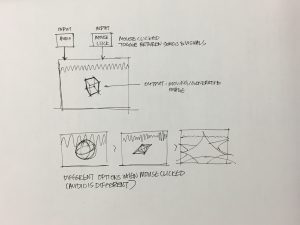

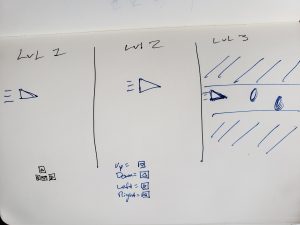

I would like to create a game where you are a paper airplane. Because you have no on-board propulsion, you are constantly trying to fly through hoops that give you more energy. Beyond this, you go through levels that each have their own quirks. One level might require backwards controls, and another might require you to mash buttons instead of actually pressing the arrow keys. There might be some obstacles for the player to dodge, and their will probably be some meters on-screen saying how much energy you have left and how far you’ve gone.

Beyond this, I would like to show the data collected from keypresses, just because I think this would be interesting. It might give the player insights as to how they can do better, if they’re pressing keys too much, etc.

![[OLD FALL 2018] 15-104 • Introduction to Computing for Creative Practice](https://courses.ideate.cmu.edu/15-104/f2018/wp-content/uploads/2020/08/stop-banner.png)