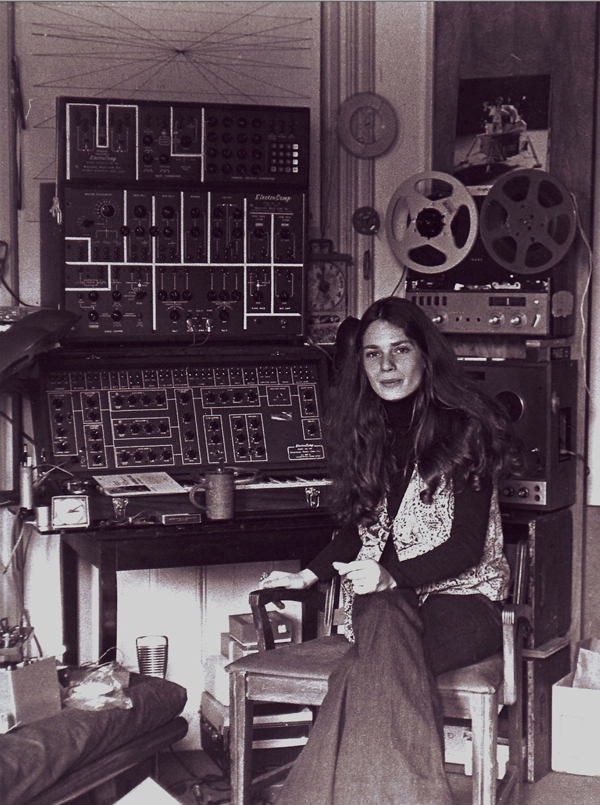

For this week’s LO, I decided to look into Imogen Heap’s “Mi Mu Gloves”, an idea of hers that was originally conceived in 2010 but only recently was able to be fabricated and prototyped for public consumption. Heap, originally only a musician, wanted to create the product to bring electronic music to another level, allowing artists to step away from their immobile instruments and connect with the audience. The gloves themselves have a mass amount of flex sensors, buttons, and vibrators that come together to simulate the feeling and sounds of real instruments. The gloves correspond over wifi with a dedicated software and algorithm that reads the movements of the wearer and translates them into sounds, depending on what the user records as inputs.

It was interesting to see how technology has come as far to allow a musician to play instruments without having the physical instrument in front of them. I admire Heap’s ability to bring her dreams, which she dreamt up in 2010, to reality, even with barriers that existed, and the flexibility that the gloves could provide even to people with physical disabilities that don’t allow them to play instruments. This is demonstrated by Kris Halpen, whose life as a musician has been dramatically affected as a result of the Mi Mu gloves.

![[OLD FALL 2020] 15-104 • Introduction to Computing for Creative Practice](https://courses.ideate.cmu.edu/15-104/f2020/wp-content/uploads/2021/09/stop-banner.png)