I wanted to make an interesting convolution effect such that the kernel was applied at a single point and then at all the other points it exponentially decays till the edge of the image, or a given radius. Unfortunately I was doing a lot of pixel by pixel calculation and, as I found out much later, jitter doesn’t really like that (apparently I would have had to write my own shader).

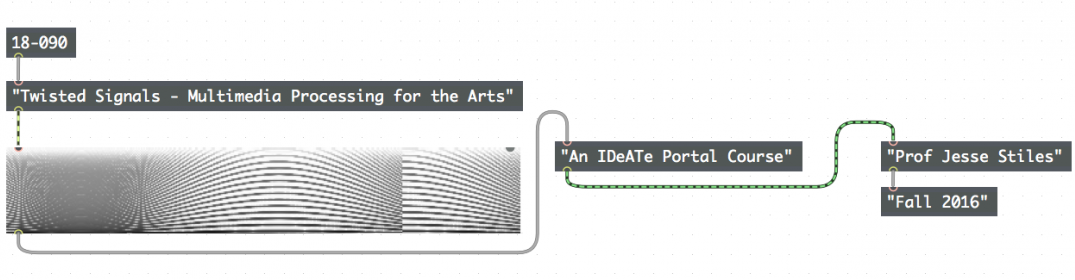

So I settled for this trippy video processor that takes video and audio, feeds the video through some delay lines and then convolves them with randomly generated kernels weighted towards specific effects, based on thresholds in the audio signal. I figured it would be fine, since people at raves don’t usually tend to be too picky.

For the video, I used one of my favorite songs, Figure 8 by FKA Twigs, paired with one of my favorite dance clips, an excerpt from the pas de deux of Matthew Bourne’s all-male Swan Lake. I hope you enjoy!