Problem: Waking up is hard. Lights don’t work. Alarms don’t work. Being yelled at doesn’t work. You have to be moved to be woken up. But what if no one is there to shake you awake?

Target Audience: Deaf/hearing-impaired children. Proper sleep is a habit that needs to be taught from a young age to help lead to healthier lifestyles later in life. Children with hearing impairments face another barrier to “learning how and when” to sleep because they miss some of the important audio cues that trigger and aid sleep. First, there is a high level of sleep insecurity for children with hearing impairment. Children that can hear are usually comforted by their parents’ voices during a bedtime story or by the normal sounds they hear around the house; however, children that cannot hear do not have that luxury – they have to face total silence and darkness alone, which is a scary thing for people of all ages. In addition, some of these children use hearing aids during the day which gives them the even sharper/more noticeably disturbing reality of some noise throughout the day and then total silence.

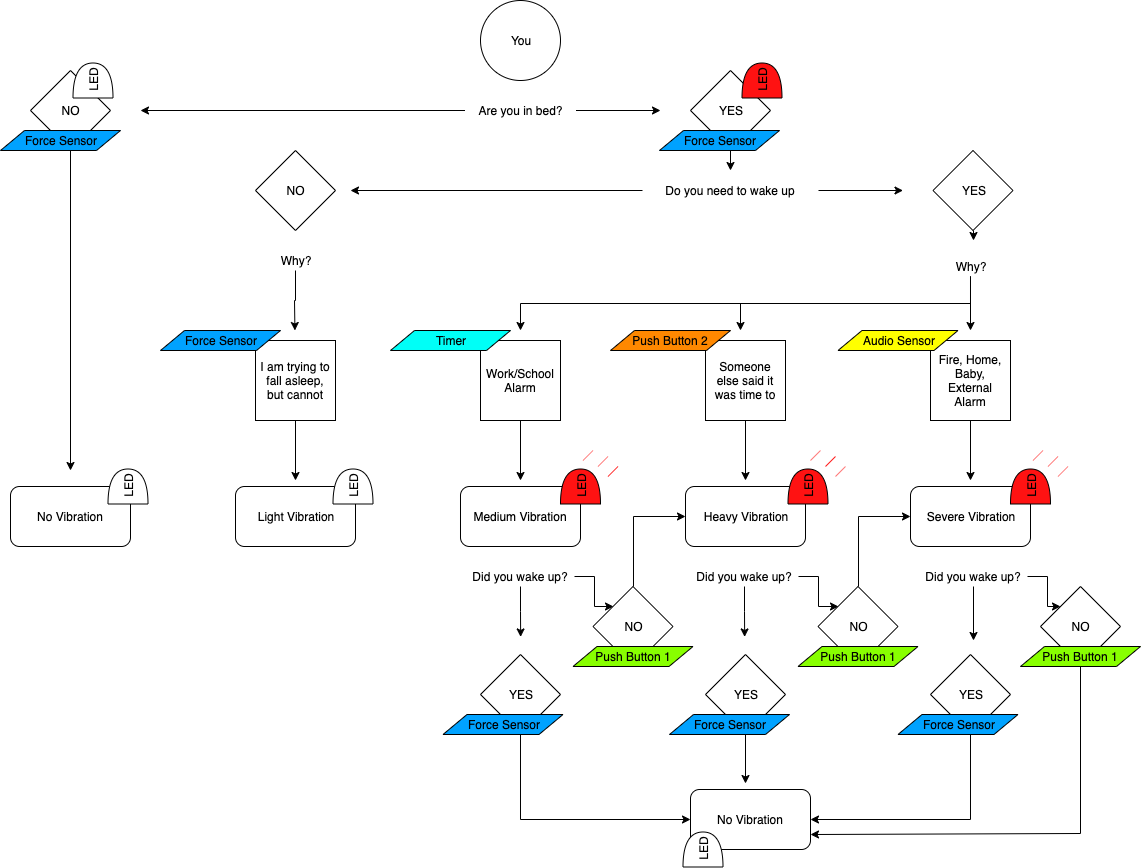

General solution: A vibrating bed that takes in various sources of available data and inputs to get you out of bed. Everyone has different sleep habits and different life demands, so depending on why you are being woken up, the bed will shake in a certain way. How?

- Continuous data streams

- Google Calendar: if an accurate calendar is kept by a child’s parents and they can program certain morning routines like cleaning up and eating breakfast, your bed could learn when it should wake you up for work/school

- Can make this decision depending on traffic patterns/weather/other peoples’ events (kids, friends, etc.)

- This process could also teach kids how to plan their mornings and establish a routine.

- Sleep data: lots of research has been done on sleep cycles and various pieces of technology can track biological data like heart rate and REM stage, your bed could learn your particular patterns over time and wake you up at a time that is optimal within your sleep cycle

- Google Calendar: if an accurate calendar is kept by a child’s parents and they can program certain morning routines like cleaning up and eating breakfast, your bed could learn when it should wake you up for work/school

- Situational

- High Frequency noises: if a fire alarm or security alarm goes off, a child that cannot hear would usually be forced to wait for their parents to grab them and go. This feature could wake them up sooner and help expedite a potential evacuation process

- “Kitchen wake-up button”: Kids do not always follow directions… so here, a parent can tap a button in a different room to shake the bed without having to go into their child’s room

- Button system also has a status LED that shows

- Off = not in bed

- On = in bed

- Flashing = in bed, but alarm activated

- Button system also has a status LED that shows

- Interacting with User

- Snooze: if sleeper hits a button next to their bed three times in a row, then the alarm will turn off and not turn back on

- Insecure Sleep Aid: if the bed senses that a child is tossing and turning for a certain amount of time as they get into bed, then it can lightly rumble to simulate rubbing a child’s back or “physical white noise feedback”

- Parents: if your kid gets out of bed, you can have your bed shake as well if this is linked throughout the house

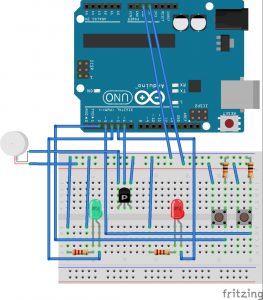

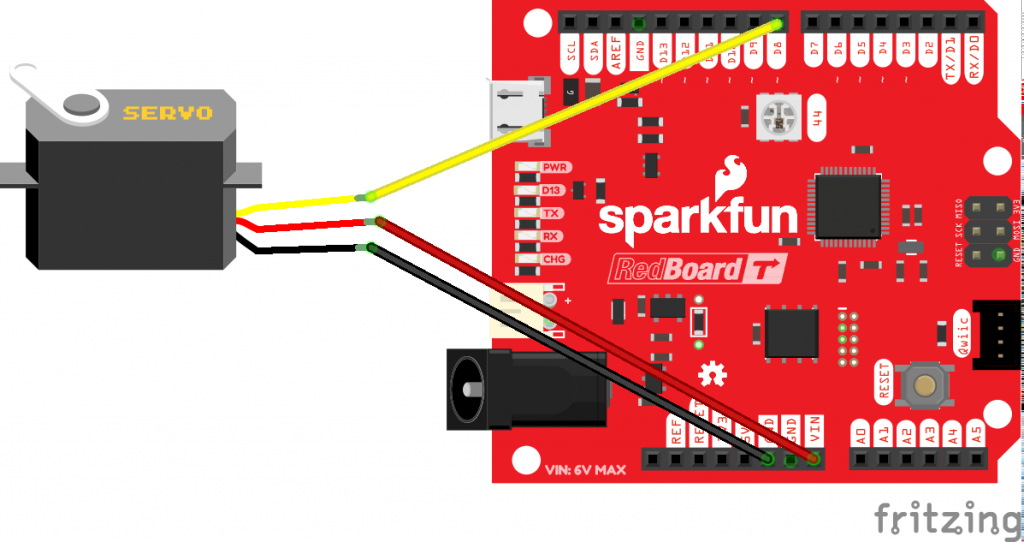

Proof of Concept: I connected the following pieces of hardware to create this demo:

- Transducers: shakes bed at a given frequency

- One located by the sleeper’s head

- One located by the sleeper’s feet

- Potentiometer: represents an audio source

- The transduer turns on to different intensities depending on where the potentiometer is set to

- Push button 1: represents the “kitchen wake-up button”, works as an interrupt within the program

- LED : a part of “kitchen wake-up button” that represents status of bed

- Push button 2: represents the “snooze” feature of the bed, where a sleeper can turn off the rumbling to go back to sleep

- Flex Resistor: represents a sensor in the bed that determines if someone is in the bed or not

Summary: A device like this could help children (and adults) who cannot hear to feel more secure in their sleep and encourage healthier sleep patterns. One of the biggest potential challenges with the device would be finding a powerful enough motor/transducer that can produce a variety of vibrations across a heavy bed/frame (and the quieter the better, for the rest of the home’s sake). Even with that challenge, though, everyone should have a good night’s sleep and this could be a way to provide that.

Files: KineticsCrit