Problem

I started with a few problems related to balance for especially visually impaired people. Firstly, when they are moving a pot with hot soup inside, it is really dangerous because they cannot check the balance of it. Another situation will be that, when they are building furniture, especially shelves, it is important to maintain the horizontal balance to keep things safe.

Also, for sighted people, there might be many situations when the balance is important. For example, when we are taking a photo.

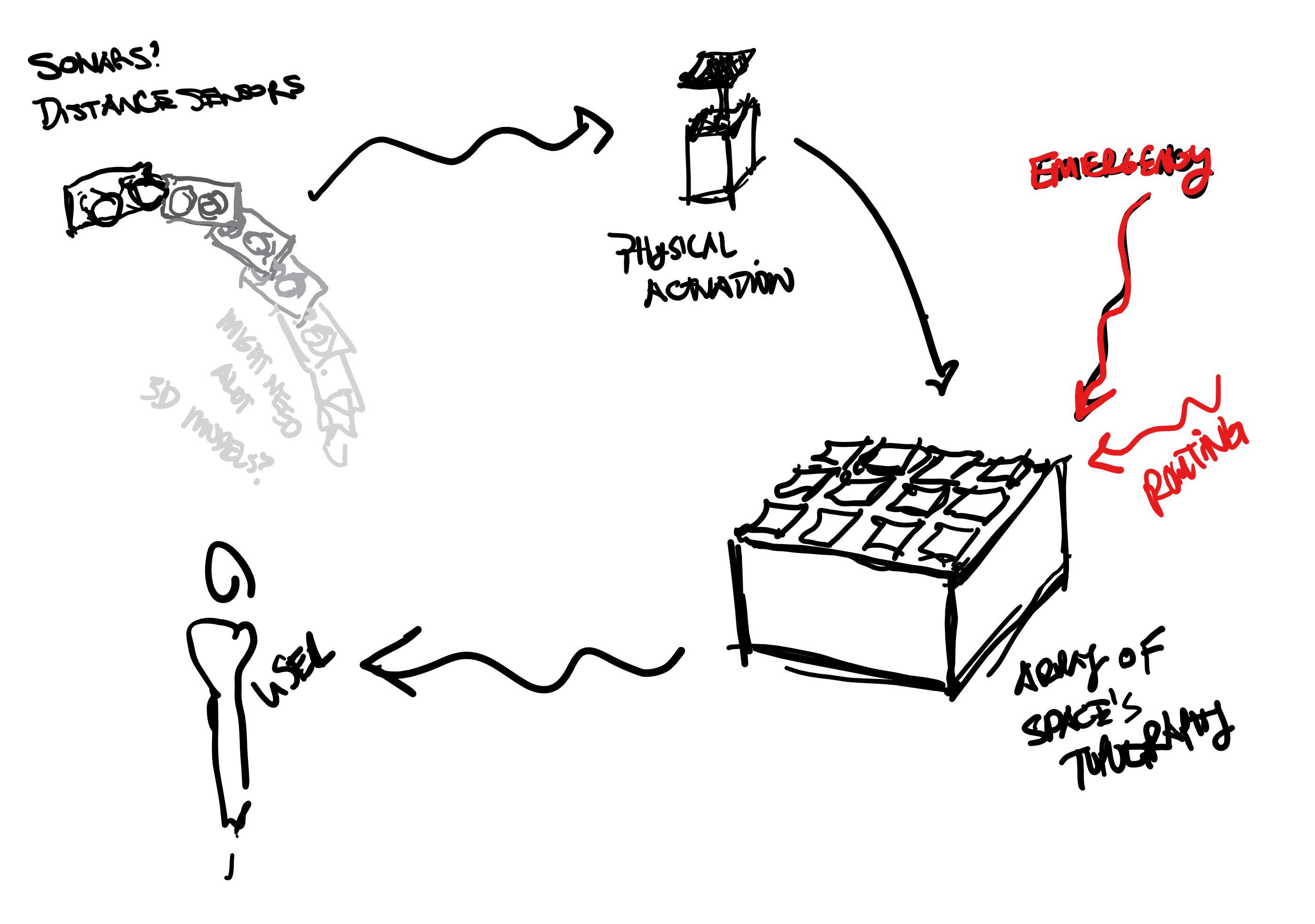

General Solution

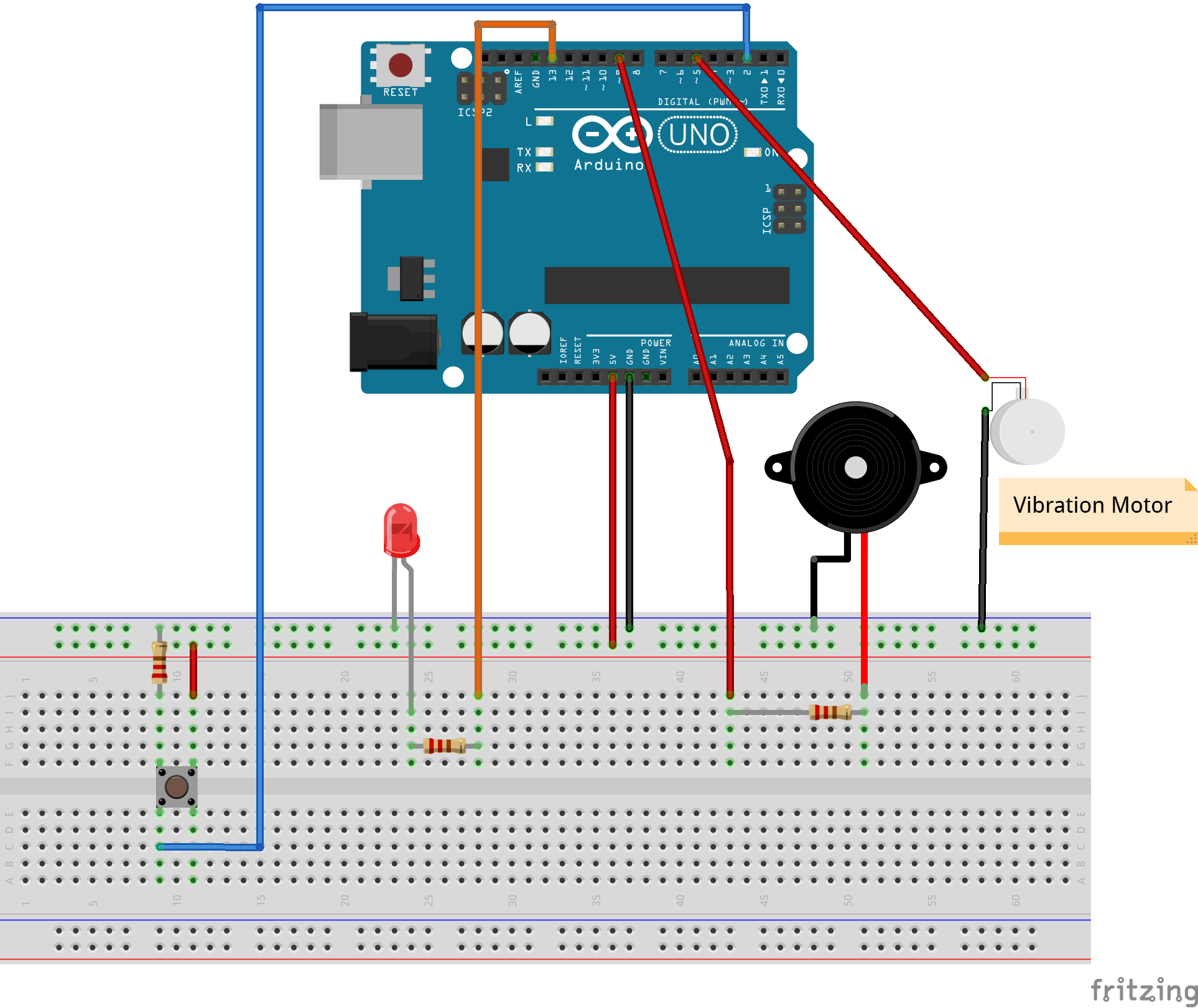

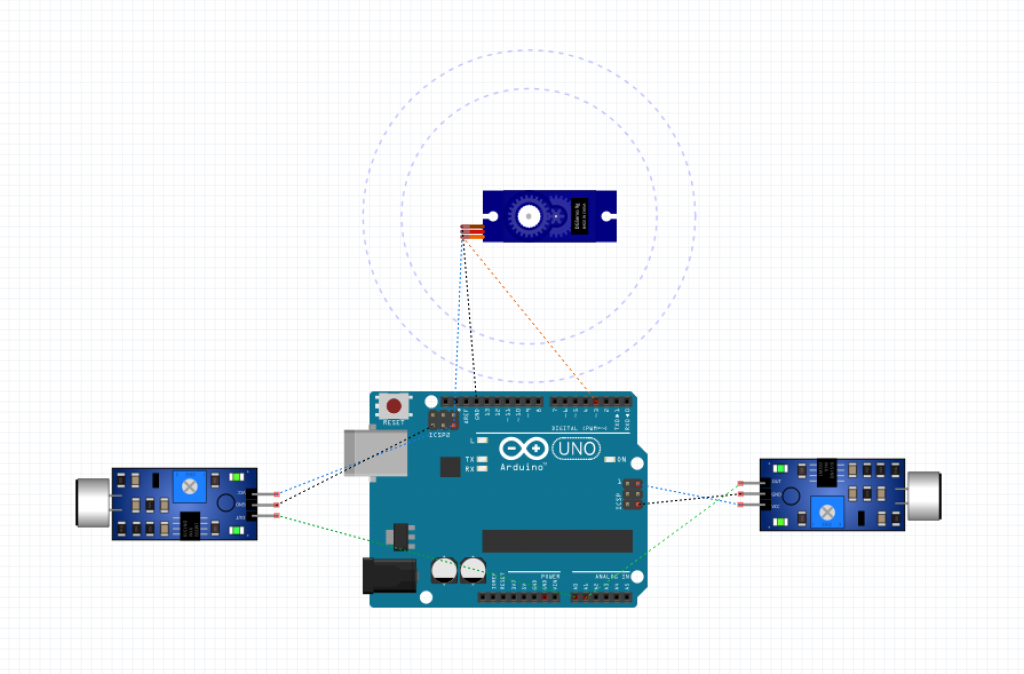

How might we use tactile feedback to let them feel the tilt or unbalanced things? I thought that the vibration with various intensity would be a great way to do it.

Proof of Concept

I decided to use the iPhone for two reasons. First, I realized that it has capabilities to generate a variety of diverse tactile feedbacks using different patterns and intensity, I found it useful to take advantage of its embedded sensors. Lastly, I thought that making a vibrating application will be useful to provide higher accessibility to many people.

I categorized three different groups of the degree to provide different tactile feedback in terms of intensity. When a user tilts the phone 5~20 degrees, it makes light vibration. From 21~45 degrees, it generates medium vibration. From 46~80 degrees, it generates intense vibration. Lastly, from 81~90 degrees, it vibrates the most intensely (just like when it receives a call). I also assigned the degree numbers to RGB code, to change the colors accordingly.

Video & Codes