IGOE

I agreed with many of his positions on overused trends and have personally seen many iterations of them in my time exploring the field of physical computing. I felt, however, that he threw blankets over some really cool potential ideas. Yes, single-input single-output networked devices such as the “Remote Hugs” project can definitely be considered to be overused, however I feel that throwing a blanket over “technologically enabled gloves” is a bit of a misdiagnosis, as there are dozens of possibilities of what the gloves can be tracking, whether they are monitoring or being used as inputs, and what types of devices they are controlling, whether the output is digital or mechanical.

DESCENDANTS

I found the idea of an intelligent machine having personality and even lying to its user amusing and a little terrifying. A seemingly seamless conversational user interface, the suit walks a fine line between person and device. It has necessary functionalities that are conveniently accessible through speech, however, making a device too “human” seems to leave a lot of room for unintended consequences in execution, such as the suit being able to lie to its user (as insinuated by the user believing the suit to be lying about their chances of survival). In a seemingly “perfect” design, new problems seem to have arisen.

SKILLS AND BACKGROUND

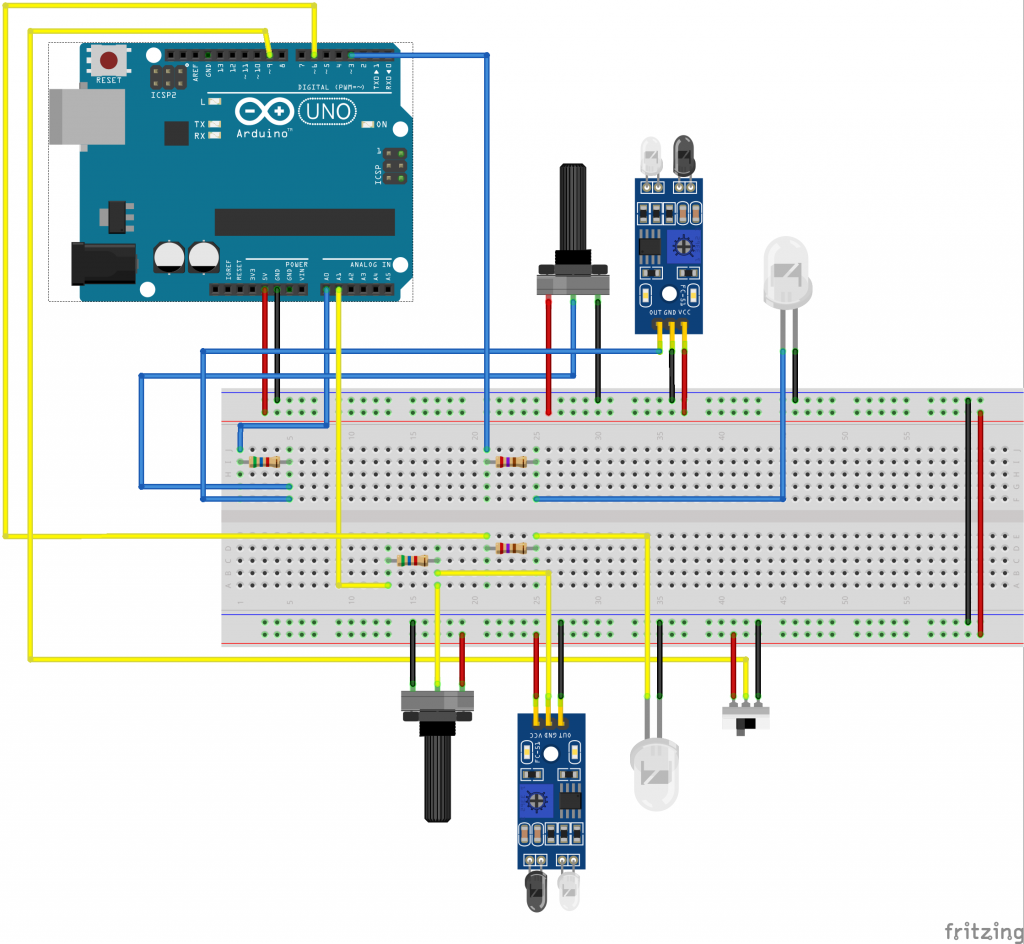

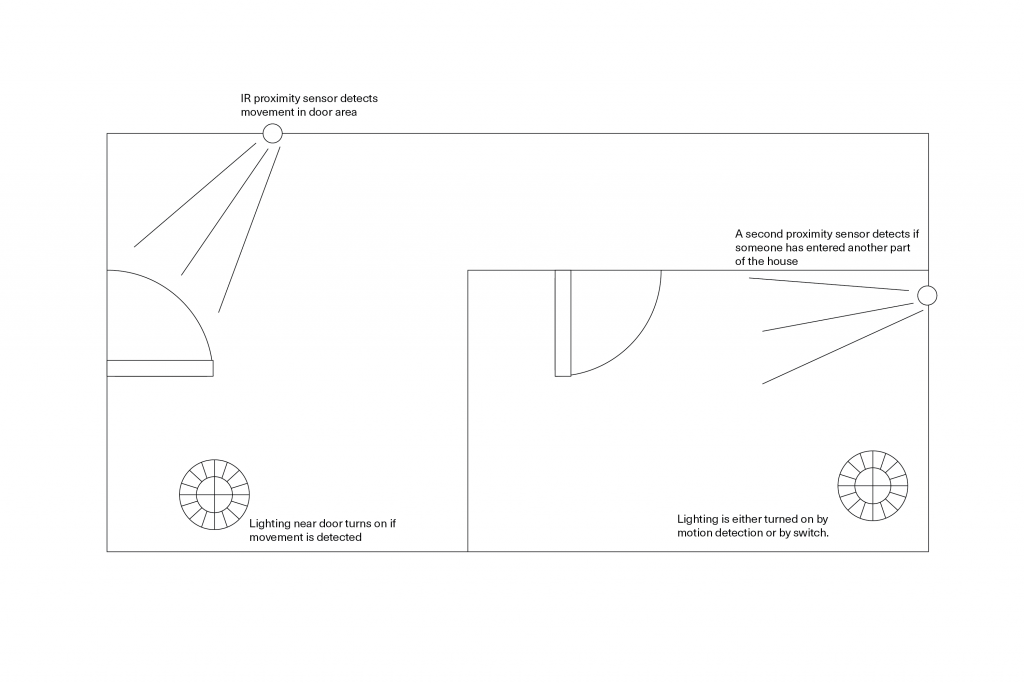

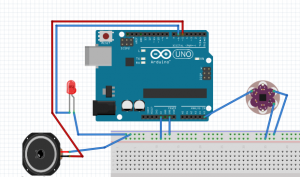

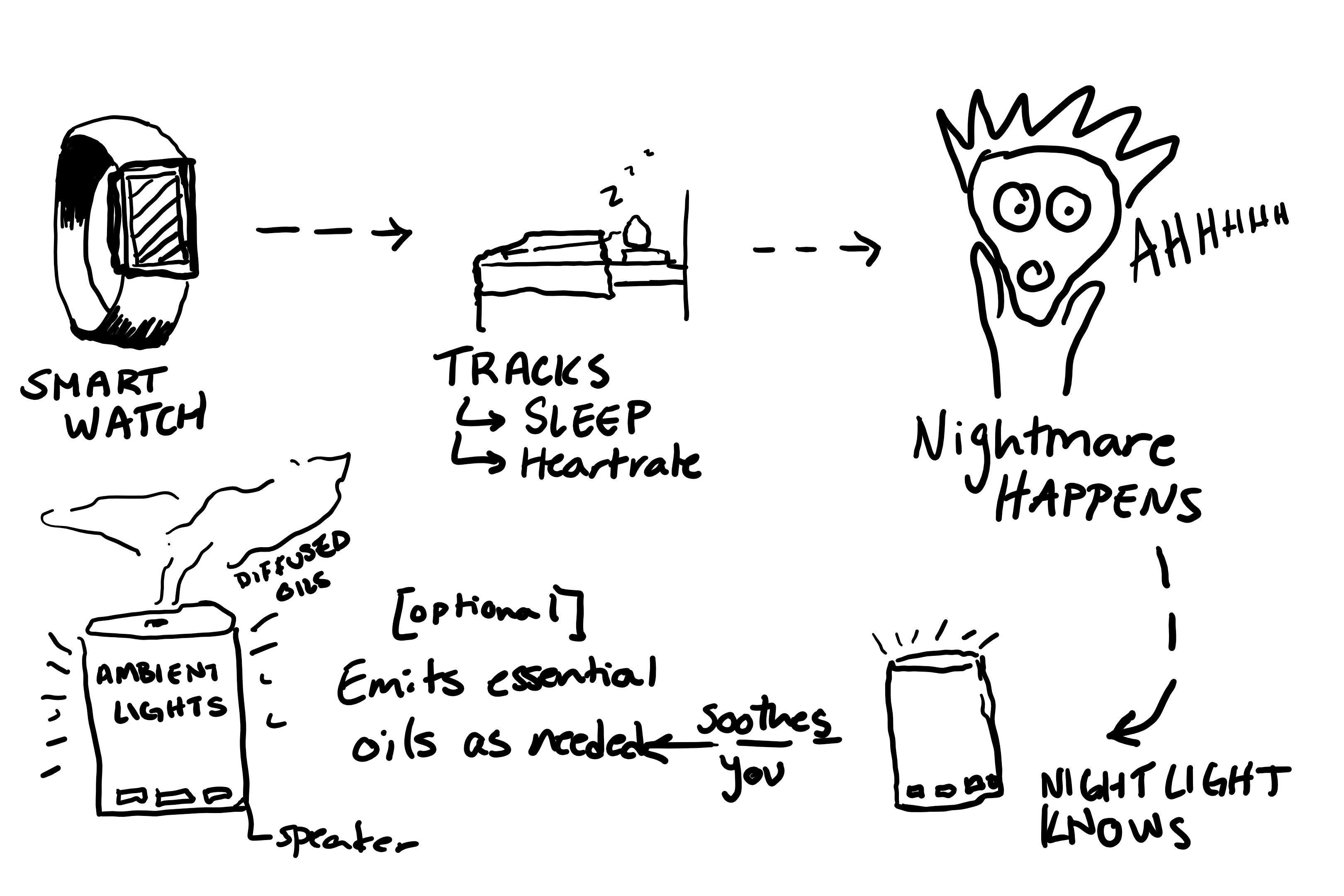

I am an Information Systems and Human Computer Interaction undergraduate. I focus my studies on full stack application design, and small device design and programming.

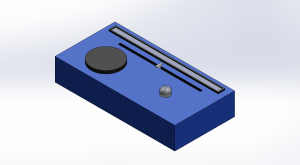

I have experience with Arduino, 3D printing, laser cutting, CAD, Rhino, Solidworks, Photoshop, InDesign, and various other softwares.

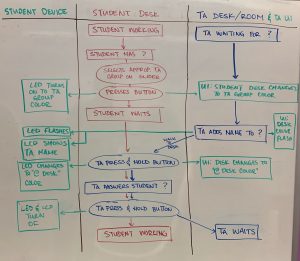

I have worked on a few physical computing projects before, including being a part of a team designing wearables and applications for alzheimers/dementia patients, built interactive displays, and helped design a temporary display at the Computer History Museum in Mountain View, CA.