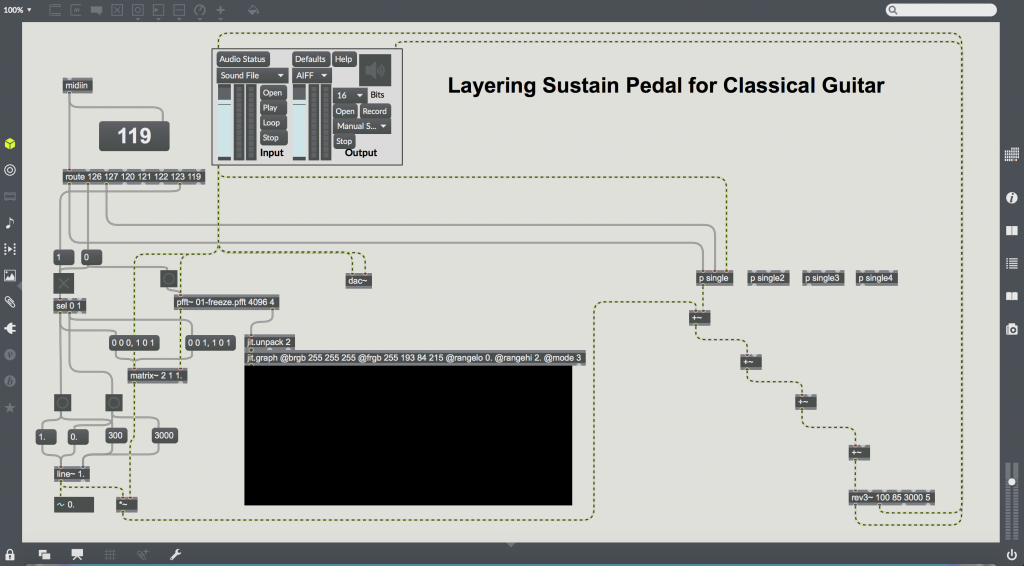

Because the sound produced by a nylon string guitar decays quickly after a string is plucked, the instrument is not very useful for long sustained notes. Inspired by the sustain pedal present on most pianos, I created a software patch in Max 7 that allows the users to use a MIDI foot medal as an enhanced sustain pedal for guitar. By pressing on the foot pedal buttons, a guitarist can freeze their current sound and artificially sustain it, and overlay up to 5 sustained sounds on top of each other. The guitarist can also use the pedal to slowly fade out all currently sustained sounds, or just the last one they added.

Hardware For this project I attached a bridge pickup to a classical guitar, and sent the analog signal through a MOTU 4pre and into my laptop. For the foot pedal, I used a Logidy UMI3 MIDI foot controller.

Software I used Jean Francois Charles’ freeze patch as a starting point for my patch to save a matrix of FFT data for a given sample. This sample is then repeatedly resythesized using an inverse FFT to produce the drone, with some rev3 reverb added on top. Several drones are able to be sustained at once by adding the signals. Starting and stoping the drones is done gradually using the line object.