Abstract:

The goal of this ambisonic soundscape was to explore the perception of time passing as one ages. This was accomplished through compositional layering of ambient environmental samples with an underlying spin concept as well as grounding the foundational pulse to the progressive acceleration of a ticking clock. The climax of the piece crescendos at the highest RPM of the clock as the interplay of sounds weaves a chaotic background symbolic to the whirlwind of life itself. Ultimately the tension resolves with the removal of the chaotic elements representing a self discovered inner peace and harmony found at the end of the journey.

Ideas:

The idea behind this work was to create a soundscape that utilized many rotating sources, and convolve them in an interesting way. Multiple recordings of many different rotating objects such as clocks, fingers across a wine glass with water, and diesel engines were used. The work was to begin with the sound of the clock ticking away around the listeners at one rotation per minute, the standard angular velocity of a second hand. As time progressed, the work includes more samples at varying rotation velocities and stationary recordings as the second hand ticked at an increasing rate. This acceleration of time brings in more and more different sound recordings until an appropriate balance of sound and space is found, then the recordings disperse.

A draft of our compositional outline can be found here: https://docs.google.com/a/andrew.cmu.edu/document/d/13lr_reW6H9SErvx6deQELith79aUhBvXnYFV_HIASJk/edit?usp=sharing

Max Patch:

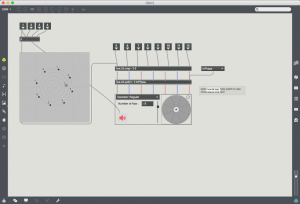

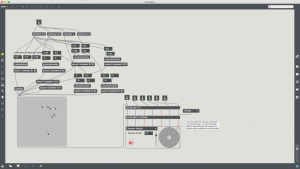

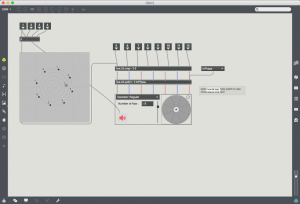

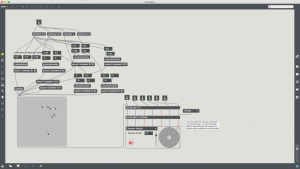

The programming in Max/MSP consists of two main patches. A rotational patch handles moving sources rotating at a specified rotational velocity, and an oscillating patch which methodically moves sources in a pre-defined path. An additional patch was also used so that the novation LaunchKey 49 could be used as a MIDI controller to control levels for rotational sound sources.

The rotational patch was written onto the HOA.2d.map patch from the HOA library. Cartesian coordinates are calculated for eight separate sources, including a function for magnitude. All sources spin at a pre-defined rotational velocity, which is controlled as a linearly increasing function over the course of the performance. Multiple rotational patches were used during the course of the piece.

The oscillating patch also used the hoa.2d.map patch, modified with sinusoid oscillators on x and y positions of sound sources. This moved the left and right channels of stereo audio as point sources in lissajous patterns around the central space.

The max patch can be downloaded here: https://cmu.box.com/s/kzeykyxpw39smgzolv2gdpuwt8soag5n

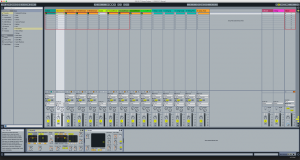

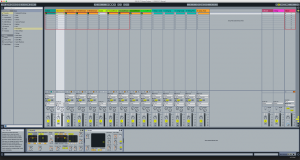

Ableton Live Samples:

A solid foundation of sounds were chosen to be the base in the composition. The higher frequency layers consisted of wind chimes with a little EQ and reverb, a culmination of crystal glass recorded at separate water levels. A dolly cart rolling on wheels was filtered with a low pass and loaded into the Push as a polyphonic tone and gave a sense of tension with the abrupt and uneasy sounds. Lastly, a recorded segment of guitar and flute added to the tranquility of the wind chimes/glass sounds and helped to establish the tone and resolution of the environment.

Mixing:

A preset was made on the uTRACKX32 that set the first 24 inputs to take audio from the computer or “card” as it was listed on the mixer. Ins 1-8 were one DCA group and the rest were the second group. DCA group 1 was all Max and DCA group 2 was Ableton. This made it easier to keep the balance and bring multiple things in and out simultaneously.

The recording can be found here: https://cmu.box.com/s/jhiodbx2os4la7ao0iacb6c00kvt1ye6

Team Functionality:

Gladstone Butler: Live mixing Luigi Cannatti: Oscillating Max Patch/Max Midi Mapping Nick Pourazima: Ableton Live Samples/Push performance Garrett Osborne: Rotational Max Patch