Technical:

We used OpenCV to process a live stream of a Go board captured with a Logitech HD webcam. The OpenCV program masked out the black and the white in the stream in order to isolate the black and white go pieces on the board. It then used OpenCV’s blob detection algorithm to find the center of each piece on the board. The program detected the edges of the board to interpolate the location of each intersection on the board, and compared the blob centers to intersection positions in order to output two continuously updating binary matrices that indicate where all the black and white pieces are on the board. The matrix data was then send to Max using OSC.

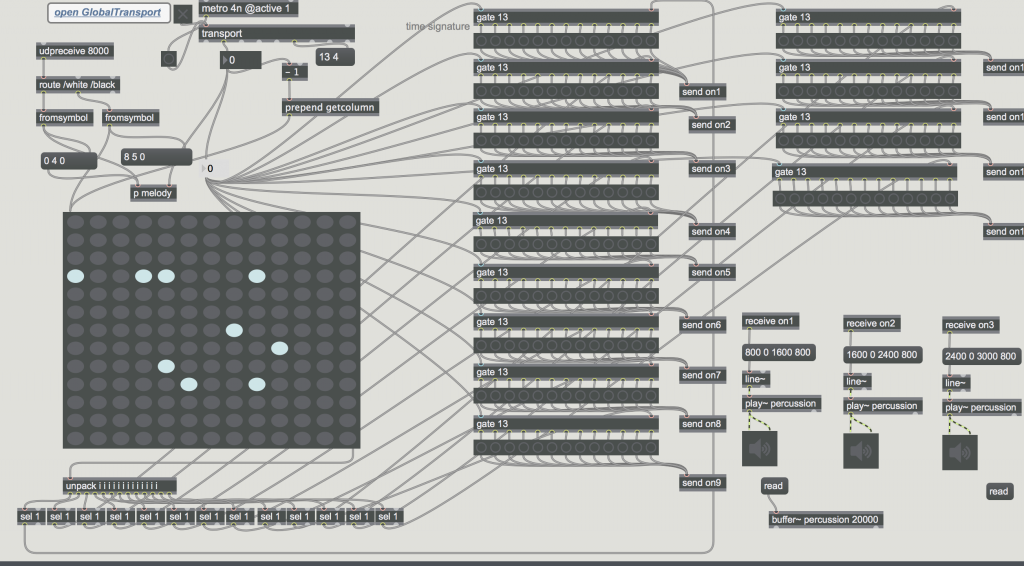

Within the Max patch, the matrix of black pieces was used to control a step sequencer of percussion sounds. The matrix of white pieces was split in half, where the left half controlled 7 drone sounds and the right half controlled 6 melody lines. Depending on where the white pieces were, drones would sound at different points in the measure and certain notes of the melodies would play.

Compositional:

We used Logic Pro X to create all the sounds that were heard on the board. The percussive sounds came from numerous drum patches designed by Logic ranging from kick drums to frog noises. The melodic pieces was based off of A Major suspended drone(ADEFA#C#E), synth bass noises and LFO sound effects. Since the drones were created in a loop, there was a bit of a problem with clipping at the end of 4 bars, so we created swells within the drones so they would fade out by the time the loop would begin to clip. The melody was on a fixed loop as soon as a stone was put down and faded naturally while the percussion sounds were based on a 3+3+2+2+3 rhythm based on the 13 spaces of the board.

Video:

Patch:

https://drive.google.com/open?id=0B3dc0Zpl8OsBNTAwZEJnczF3Q1k