The ambisonic Squad Five project focuses on letting a user control the sounds he/she experiences. We accomplished this by linking TouchOSC to a Max Patch running sounds each group member recorded. An iPhone, running TouchOSC, was passed around the class allowing other students to control the location of pre-recorded sounds. Each group member created sounds to play using a technique that interests each of us. We decided on a fundamental structure for each of us to follow, tempo, key, and meter.

Abby’s Section, Violin loops:

For the violin Section of the controller, I created a set of 7 loops. Using Logic and a studio projects microphone, I set the tempo at 120 bpm and played a repeating G octave rhythm. I then recorded melodic lines, percussive lines, and different chord progressions over an 8 bar period. I originally recorded 14 separate tracks, but we were only able to fit 7 on a page. I also recorded odd violin noises that I meant to edit together making a melodic line out of it, but it was a little tricky because of all the other variables in all of our other loops. If we were able to continue this project, I would think about the length of the loops because if you play them too many times it can get a bit repetitive. While the diversity of loops available helps, there could be parameters set so after a certain amount of loops are triggered you can’t add more, or if you trigger certain loops, you can’t trigger others. I also think designing loops with basic parameters makes working on each of our sections easier, but has limitations in making the piece a whole unit.

Ty’s section, percussion:

For the percussion page of the TouchOSC controller, I created a Max Patch that reads two photoshop files then uses the images to sequence sounds. The first file is an RGB image, and each color value determines the volume level of a pre-recorded percussive sound. The second image file is a black & white bitmap; this image triggers the sounds to play on black pixels. Originally, I used a drum synth, rather than pre-recorded files, but found using RGB to control the synths difficult because of my limited synth knowledge. For a next step in this project, I would use my TouchOSC page to let the user build their own sequencer. Additionally, I would change the sounds I recorded. It would be interesting to find four sounds that fit well with the other two musician’s pages, and let user control the sequence and ambisonic location of those sounds.

image2beatSequencer:

For the section by Steven:

I made seven other recordings following Abby’s lead. We wanted to make these recording harmonic with each other and all stayed in G minor. We also wanted to stay in time so we all recorded at 120 BPM. For my recordings I played my electric guitar using my AC30 guitar amp. The electric guitar recordings were chords, the first one was just Gm repeated, and the second one was Gm and Dm repeated. Then I also did a Gm arpeggio on the electric guitar as well. I also did one recording using the acoustic guitar. The other three recordings were done using the Korg Monologue synthesizer. The first one was a Gm arpeggio. The second one was an analog drum patch I made. The final recording was a bass Gm patch.

On the Max side, I used the HOA library, and I did all of my panning in polar form. Doing everything in polar made everything very straightforward, and easy to visualize. From the UDPreceive object I routed the magnitude and phase information into the HOA map message. The magnitude information was very straight forward 0 to 1 in float decimal format mapping directly to the HOA map message. The phase information however had to be converted from a 0 to 1 float to a 0 to 1 float times 2*Pi. That would give the output to the HOA map of 0 to ~6.3 in float decimal format. I had to repeat these steps for all 21 recordings, so I created an encapsulation called “pie_n_pak” that does the magnitude and phase calculation, and packs all of the proper outputs together. All of the recordings are triggered from one toggle box. The UDPreceive object also gets the state of the recording being triggered from TouchOSC. The state is a toggle box from TouchOSC, if it is active it sends a 1, and if it is not toggled is it a 0. The fader encapsulation sub-patch fades the audio track in or out depending on the toggle present. It is important to note that all of the audio files are always playing in the background, and are just faded in and out depending on the current state being sent from TouchOSC.

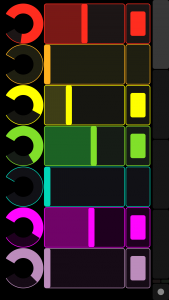

In TouchOSC, I made three different pages for each of our recordings. The top gray bar is each of our pages. The toggle buttons on the top fade in (or out) for each recording. The long fader is the magnitude of the panning in the HOA panner. The encoder underneath is the phase information being sent to the HOA panner.

Here is a link to the code:

Here is a link to all the supporting audio files:

https://drive.google.com/open?id=0B6W0i2iSS2nVZ1RobkRQUzA0RlU