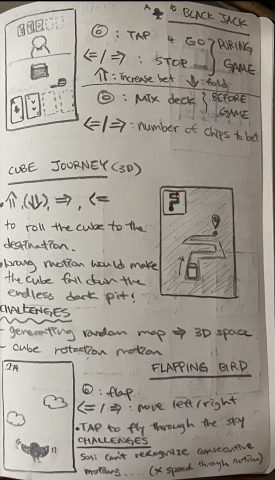

I want to have more options and hear others opinion on these so I came up with more than 3 ideas. I’m not sure which idea is most interesting/ possible to implement in a week of a timeframe so I’ll include sketches to all, but I’ll only explain the three ideas I find most suitable/interesting for this project.

1. Virtual Bookshelf

Idea:

Often times I find myself not reading enough books. Libraries are either closed or operating with limited hours due to covid19, making reading books even harder for everyone. To be honest, reading a book is totally achievable even without having libraries to be open, but our book options are relatively limited. I wanted to recreate the experience of having some options to choose from when reading a book/searching for a certain topic-related book.

How it works:

I’m thinking to have “swipe” to shift through the options on the bookshelf and “tap” to select the book.

Each title of the book will be a broad category, and the content of the book will be some random information of that topic. For example, “recipes” would give a recipe of a random dish, and “motivation” would give some random quote that motivates you. If the first content doesn’t satisfy you, then you can “swipe” to generate another one.

Hopefully I could connect to some sort of data set/API (if there is one) that I could potentially draw a random piece of information from.

Potential Challenges:

- COLLECTING DATA: I’m currently unaware of any big data/API of random facts/information/quotes of a certain category.

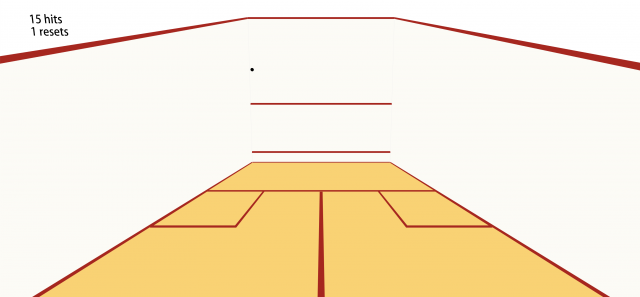

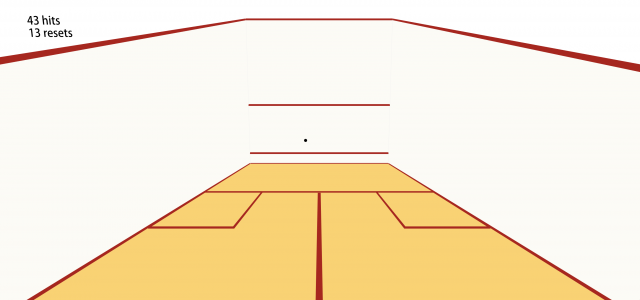

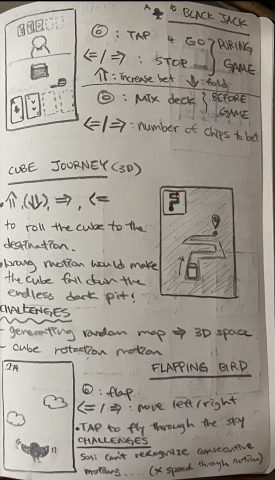

2. Flapping Bird (bottom sketch)

Idea:

Exercising is not happening in my life these days and I’ve been losing a lot of muscles.. A lot of my friends also related to the lack of movement due to covid19 and social distancing, so I wondered if I could make something that at least works out my arm. So I thought of this idea, where the player would be required to move their arm to “tap” to make the bird fly through the sky.

How it works:

“Tap” to make the bird flap. The bird might fall (i.e. game over) if you don’t “tap” often, but you don’t have to “tap” all the time because oftentimes birds ride air current instead of flapping rigorously.

Potential Add-on:

“swipe” to make the bird go left/right of the screen?

Potential Challenges:

I realized Soli can’t recognize consecutive motions as well as a single independent motion. It also often recognizes one motion as another (ex. “tap” as “swipe” or vice versa), so perhaps it’d lead to game over even though the user has been moving arms so much to get the motion of tapping across…

On a bright side, it’d lead to more arm exercise? Although I get annoyed when the sensor keeps reading my intended motion as another, so perhaps it might annoy others too..

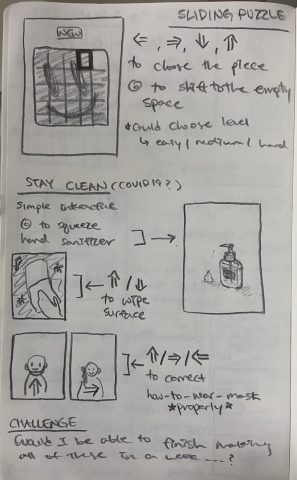

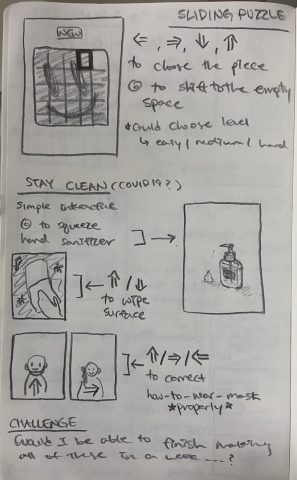

3. Stay Clean (covid19 edition?)

Idea:

Due to covid-19, many people including myself began to use disinfecting products much more often. I came up with this little app that swipes between the three interactive pieces related to our daily lives with covid19.

How it works:

Swipe left or right to move to the previous/next interactive piece:

a. hand sanitizer

“Tap” or “Swipe down” to push down the hand sanitizer bottle and make a little pile of hand sanitizer next to the bottle.

b. wiping surfaces

“Swipe up” & “Swipe down” to wipe out the virus.

c. wear mask properly

“Tap” to begin/end the game, “swipe up/left/right” accordingly to make the person on the screen correctly wear their mask. I got the idea from all those people who don’t wear their masks properly in the public space. (Mask is not a chin guard.. )

Potential Challenges:

- Would I be able to implement all three within the week of time? I’d be drawing all the visuals in illustrator on top of coding the interactive part. How realistic is this to all be done in a week with preparing for midterms?

- Same as in the second idea: Soli often detects one motion as another, so what if the user is having fun wiping surfaces, for example, and is so close to being done but then soli reads their motion as swiping left/right and changes to the next piece?