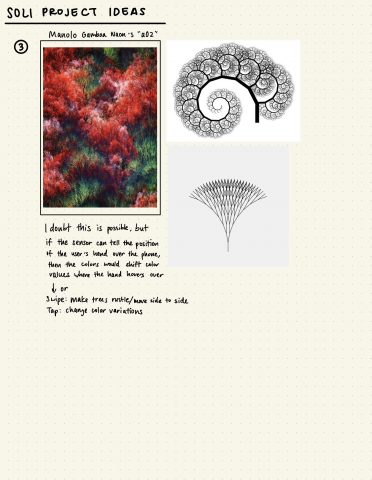

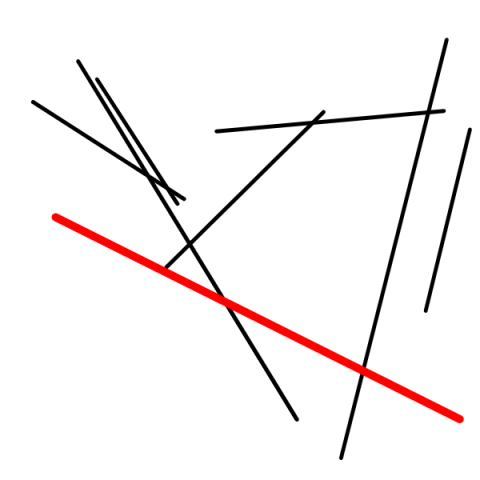

I was super impressed with the resources ArtBreeder had and how customized you could make your creation – especially the portraits and landscape categories I played around with. I really liked playing around with the specific categories compared to the general category because of how much you can customize each “factor” under the genes column. For example, I was super impressed with how many details you could change about the final image and how realistic the altered image looks compared to the parent images.

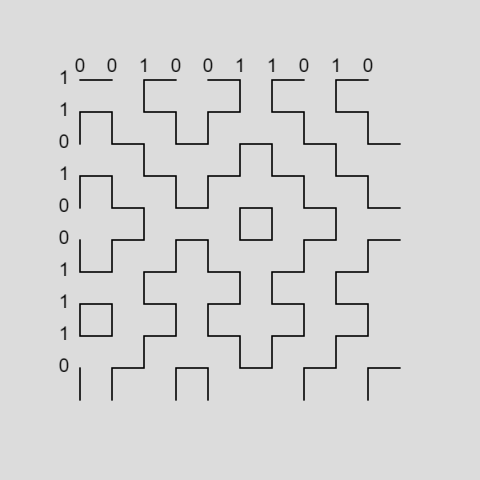

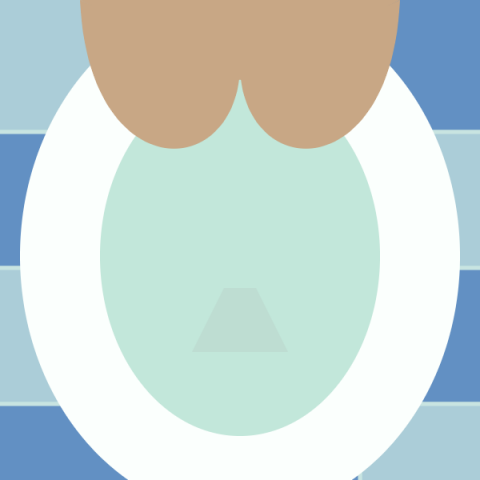

General category – parent: flowers, genes: underwater creatures and chaos

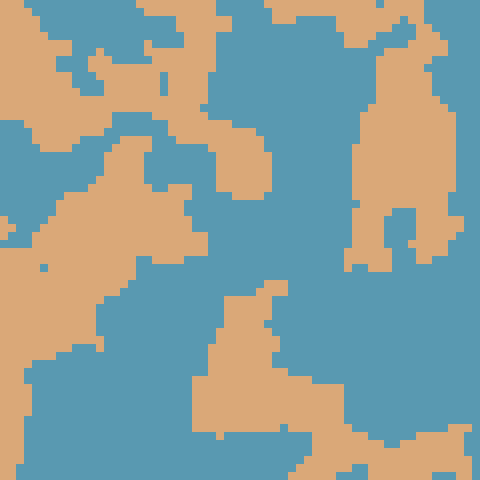

General category – parent: bubble, genes: nematode, bubble, and chaos

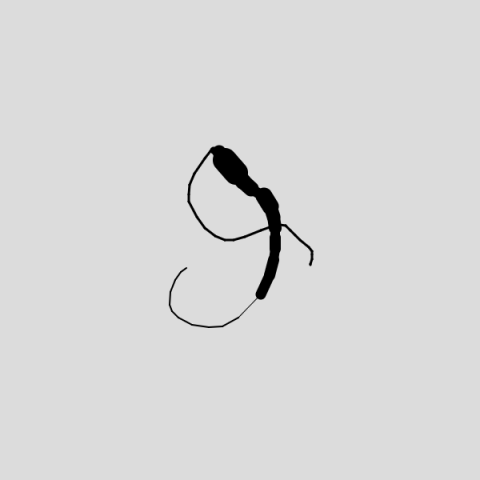

Landscape category

Portrait category – parent: 2 random male portraits and 2 random female portraits, played around with gene categories regarding art style and chaos