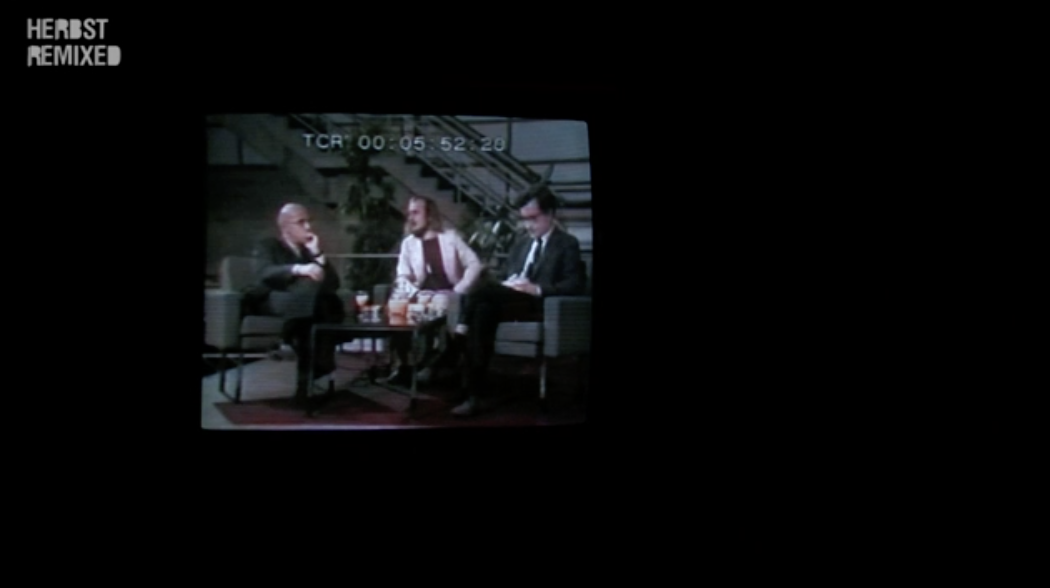

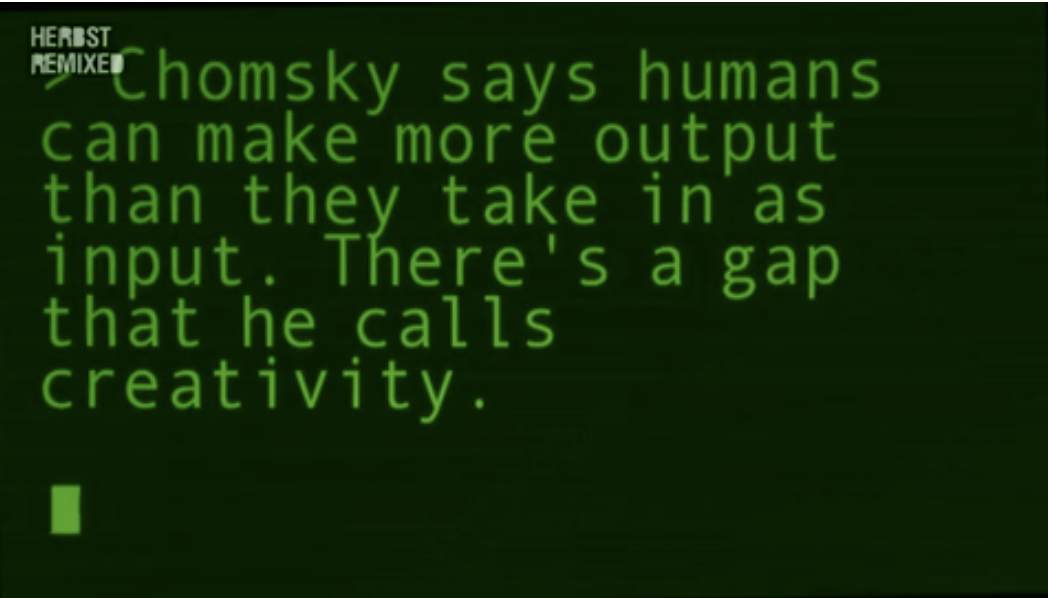

Hello Hi There by Annie Dorsen is a performance in which a famous, televised debate between the philosopher Michel Foucault and linguist/activist Noam Chomsky from the 70s and additional text from YouTube, the Bible, Shakespeare, the big hits of western philosophy, and many other sources are utilized as materials for creating a dialogue between two custom-designed chatbots. Annie designed these bots to imitate human conversation/language production while recognizing how the optimism for how natural language programming seemed to have helped us understand the process of human language.

Hello Hi There by Annie Dorsen is a performance in which a famous, televised debate between the philosopher Michel Foucault and linguist/activist Noam Chomsky from the 70s and additional text from YouTube, the Bible, Shakespeare, the big hits of western philosophy, and many other sources are utilized as materials for creating a dialogue between two custom-designed chatbots. Annie designed these bots to imitate human conversation/language production while recognizing how the optimism for how natural language programming seemed to have helped us understand the process of human language.

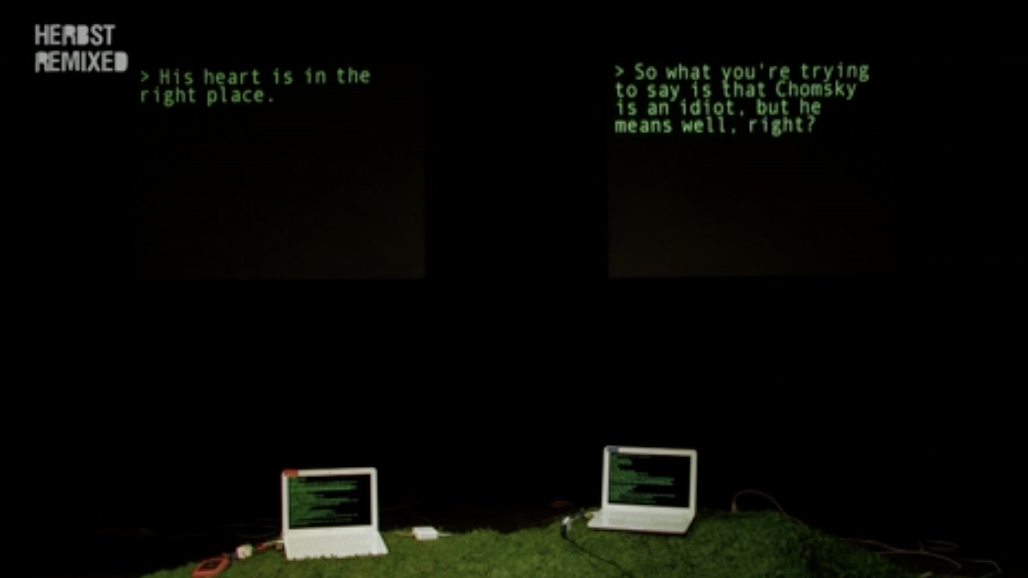

I really enjoyed how this study of human language production quickly turned into a theatrical performance between two instruments of technology(in this case, two laptops). It was striking to see how with all the information that these two laptops were given(seemingly like two brains communicating) even a conversation between these bots was able to digress into completely unrelated topics from the beginning just as humans could, but more humorously and incoherently.