Houdini Explorations in 3D Character Pipeline

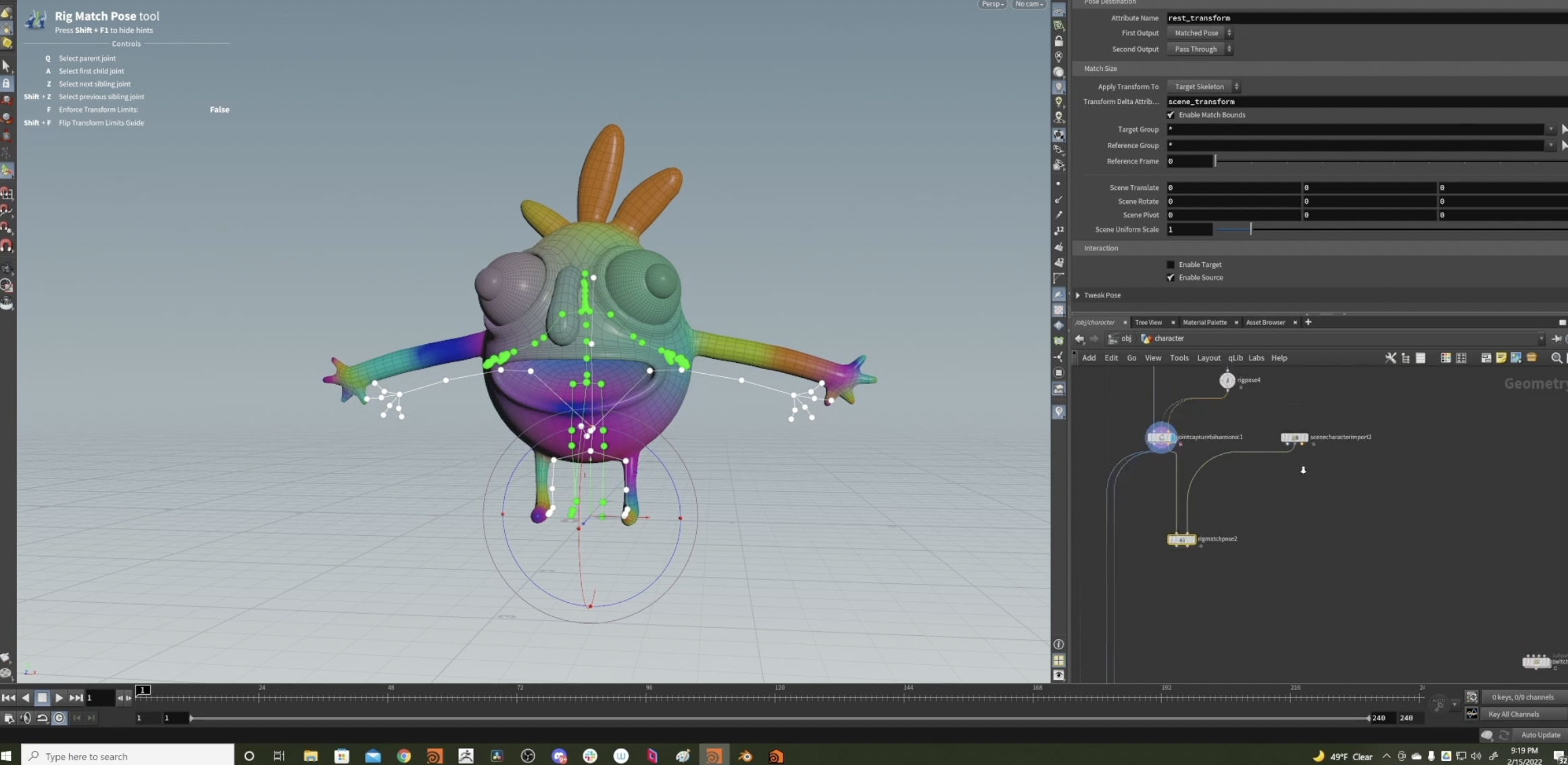

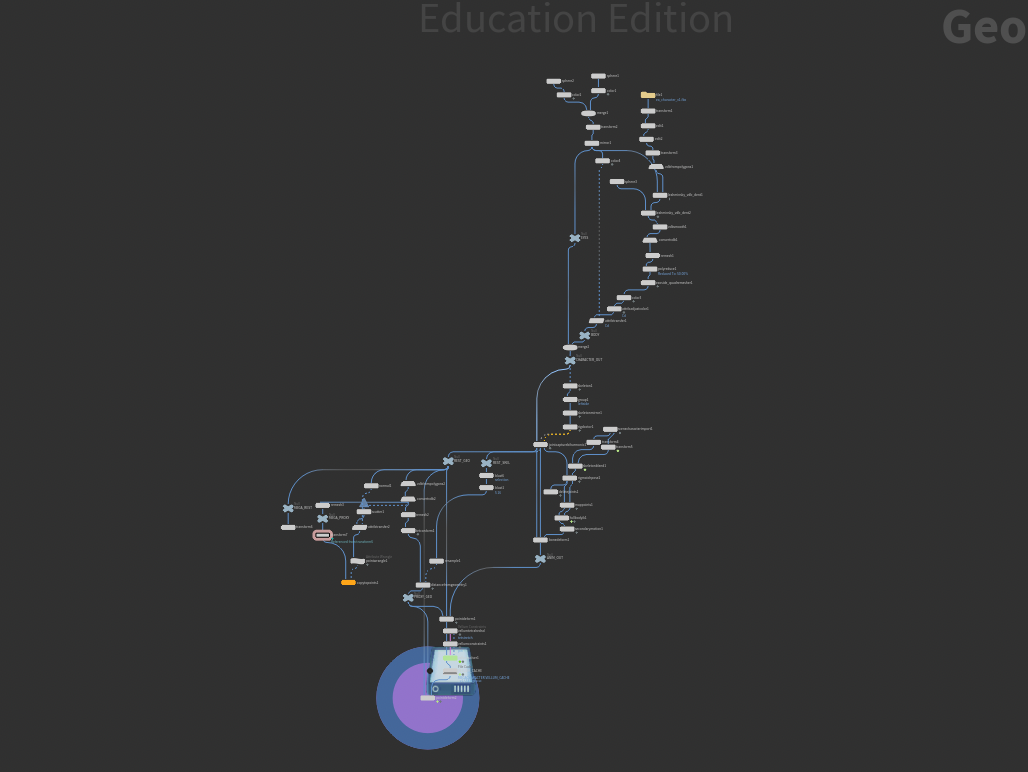

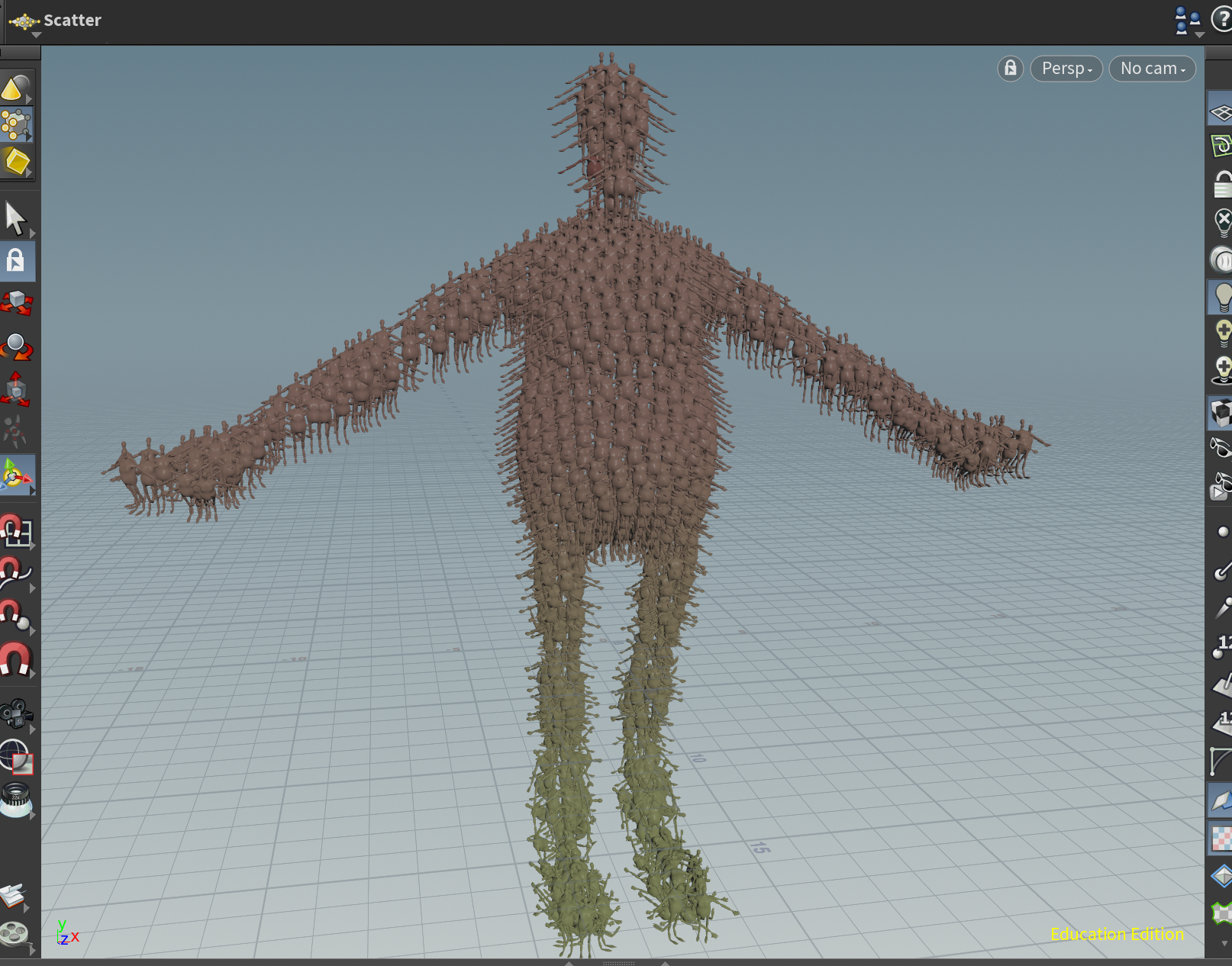

I have been self-teaching and using Houdini as my primary 3D tool for the past 4-ish months and have learned so, so many things. One area that I wanted to dive deeper into was exploring Houdini’s newer capability to do the entire character pipeline. With their rigging toolset’s update, you do pretty much everything without leaving the software. So I went through the whole character development process, with every step being entirely procedural! Very exciting, very cool. This was just a first stab at this whole pipeline within Houdini, so I’m excited to iterate in the future and learn from mistakes I made along the way.

Process:

-

Modeling

-

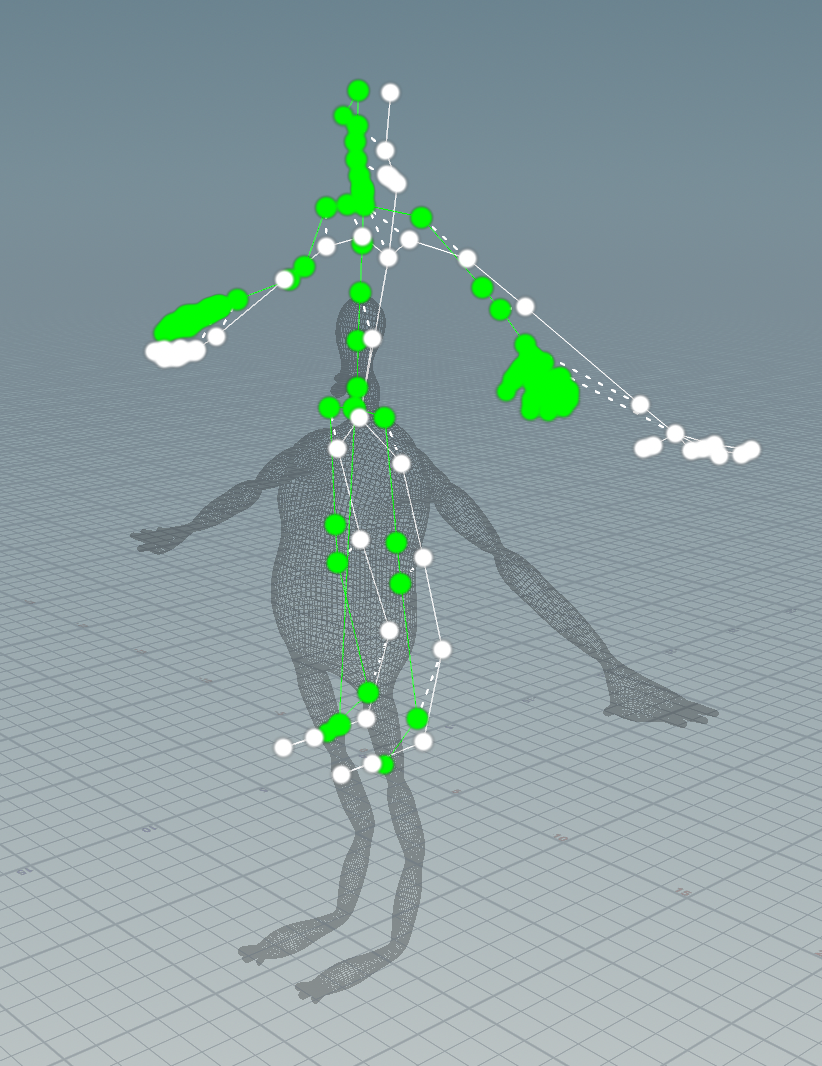

Rigging

-

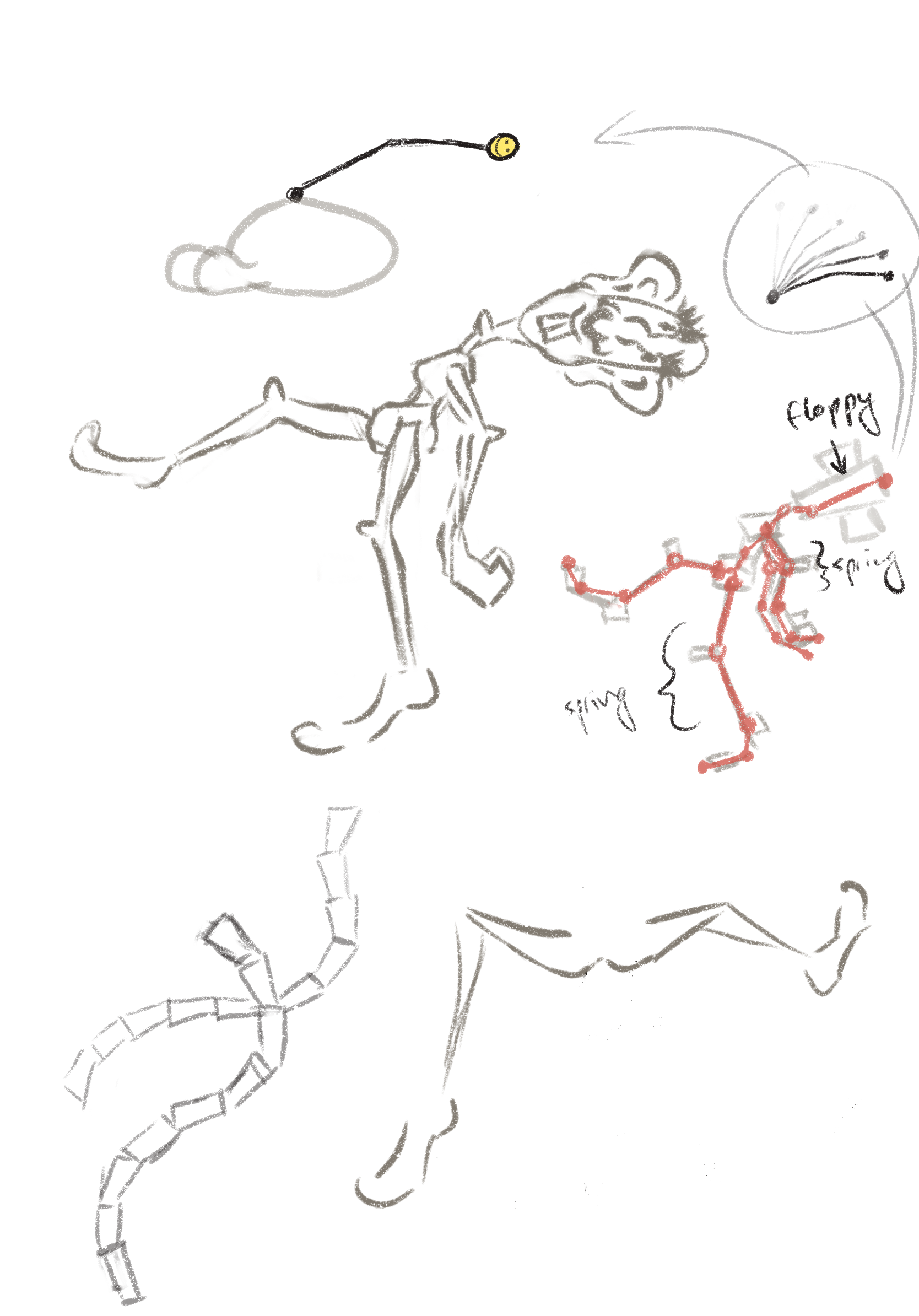

Animation

-

Simulation

- Floppy, soft-body character.

-

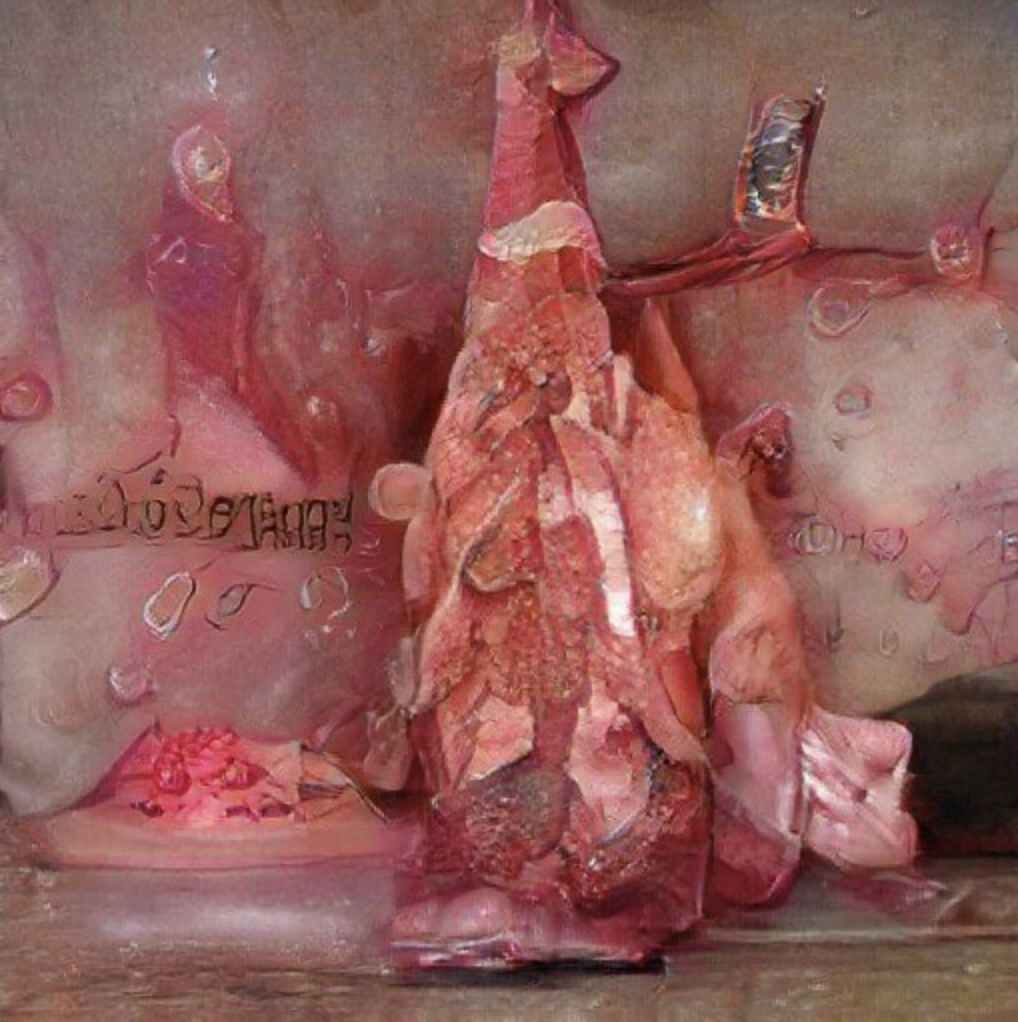

Other Computational Stuff

-

Rendering

- This one I have not yet gotten to since it will take quite a while. I will be turning in the final render as my final documentation.

-

Plus plenty of stuff in between