For my final project, I created a face filter titled “EXIT.”

Author: kong

kong-FinalPhase3

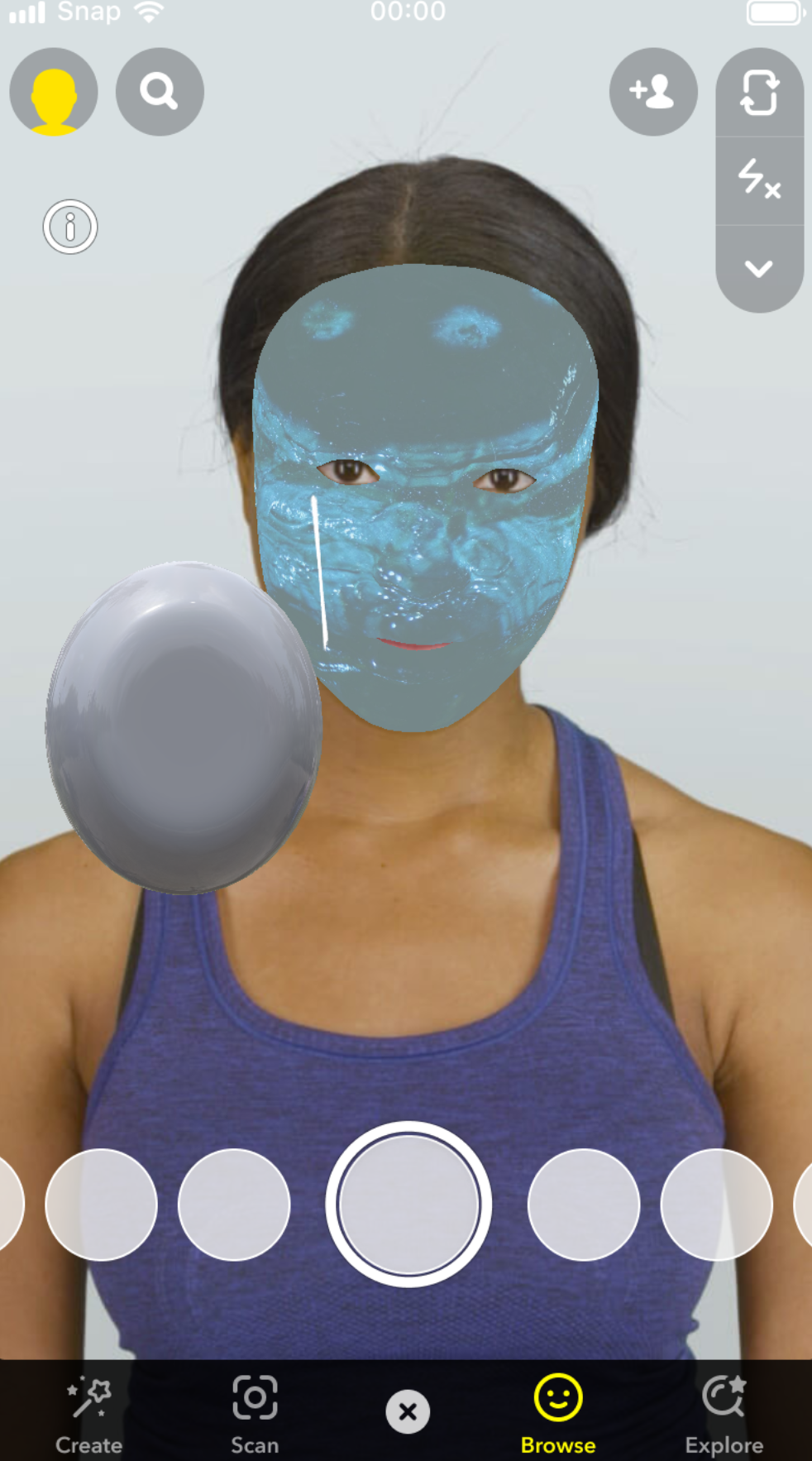

As mentioned in my FinalPhase2 post, I’ve been watching Snap Lens Studio tutorials on YouTube in order to achieve the desired effects. I have attached screenshots of two trials that I have experimented with. First, I was intrigued by the texture I saw in Aaron Jablonski’s work to play around with building different textures, especially those reminiscent of water. Secondly, I explored its 3d hand interaction module to induce particular phrases or animations based on the position of the hand. As of now, I think I will stick to my first trial to develop a certain virtual environment that the users could possibly be immersed in.

kong-FinalPhase2

Inspirational Work

I find the textures used in Alpha’s work to be inspirational–and how the fluid yet superficial texture flows out of the eyes. I also noticed that the fluid sometimes disappears into the space and sometimes flows down along the surface of the face. It seems to differ depending on the angle of the face, and I wonder how Alpha achieves such an effect.

I found this project captivating as it had similar aspects to my initial ideas in that it places patches of colors on the face (it reminded me of how paint acts in water). Further, this filter was created using Snap Lens Studio, which led me to recognize the significant capabilities of this tool.

Snap Lens Studio

To implement my ideas, I’m planning on utilizing Snap Lens Studio, which I was able to find plenty of YouTube tutorials for. Though I will have to constantly refer back to the tutorials throughout my process, I was able to learn the basics of Snap Lens Studio, how to alter the background, and how to place face masks by looking through the tutorials. I also found a tutorial regarding the material editor that Aaron Jablonski referred to in his post. I believe this feature would allow me to explore and incorporate various textures into my work.

kong-FinalPhase1

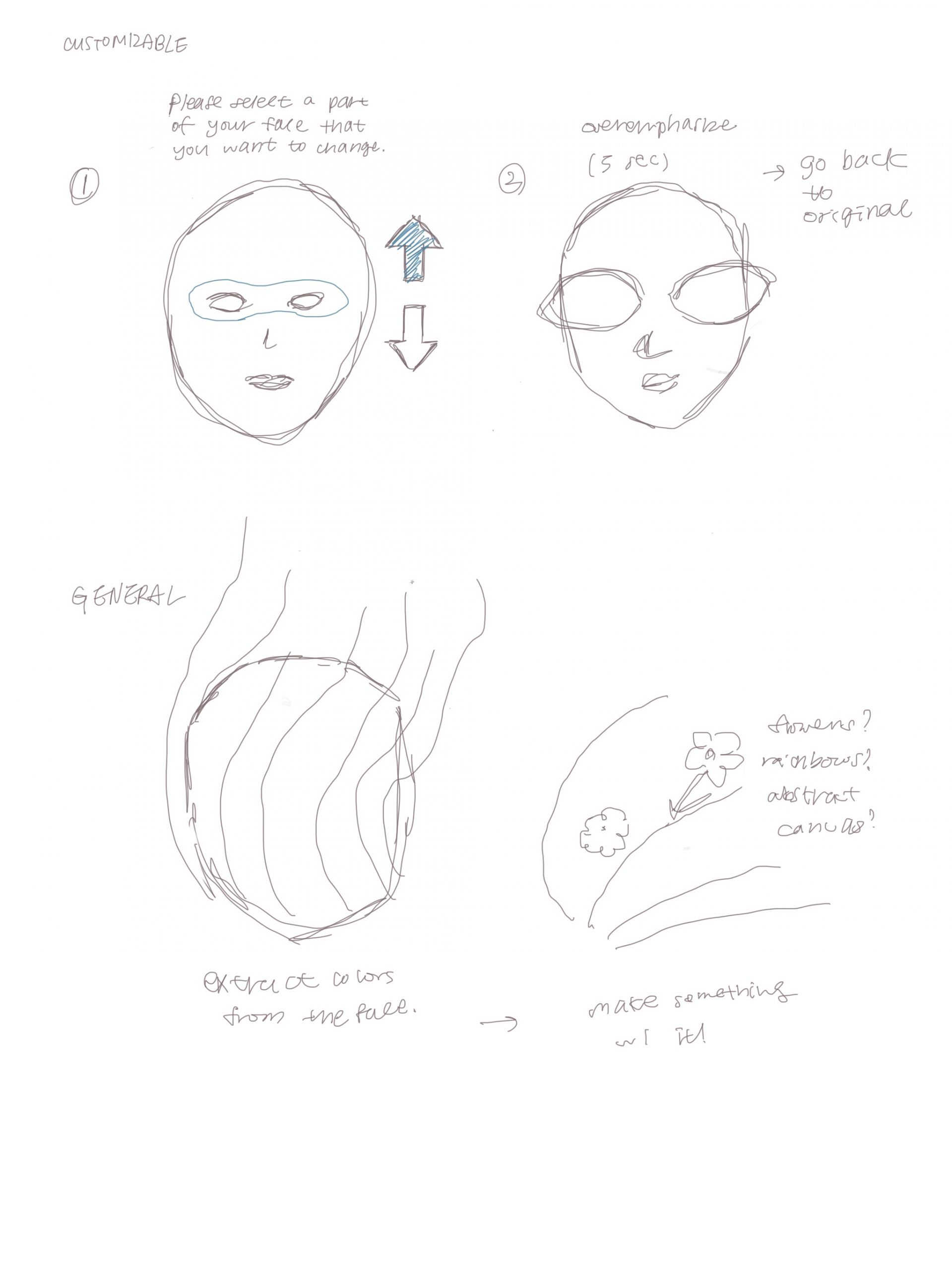

An idea that I have is making an augmented mirror with Snap Lens Studio. As these filters are popular on social media, it reminded me of a trend on social media where people would put on a filter that makes them “ugly” and then take it off to boost their self-confidence. My concept is similar in that I want people to recognize their beauty by using my augmented mirror. As everyone has different insecurities about their physical appearances, I think I would either have to make a customizable or a very general augmented mirror, which I will solidify after conducting more research about Snap Lens Studio.

kong-LookingOutwards04

IMAGE OBJECTS by ARTIE VIERKANT

Image Objects responds to the shift in art-making practices and the definition of the work of art, where contemporary art began focusing on its online representation. It is composed of sculptural works placed in between the physical objects and the digital image in order to place emphasis on the fluid boundary between them. The digital images were created by printing image-based works on aluminum composite panels so that they would appear three-dimensional. Then, the altered digital documentation of them was circulated online, which means that each documentation is a unique work of its own.

At first, I was drawn to this artwork because of its appealing visual qualities with simple yet vibrant colors. Yet, as I got to know more about the idea behind it, I was intrigued by its focus on the online circulation of the artwork. As my artwork in the future could also be digitally circulated, it led me to consider the interactions of my artwork through the audience’s lens (ex. phone camera).

kong-AugmentedBody

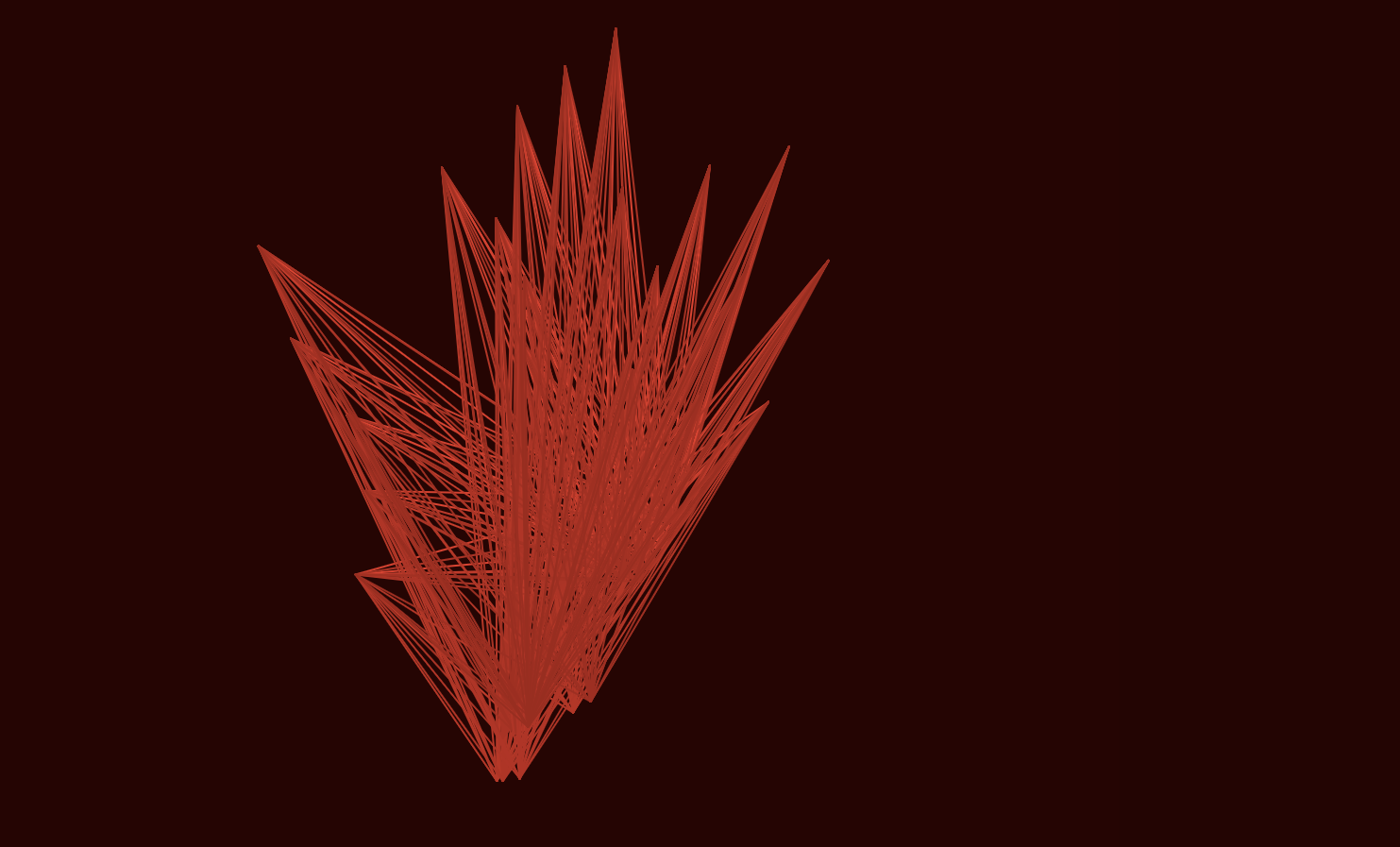

My initial idea was to represent the homesickness I was feeling by utilizing the distance between one’s face and hand: the face would represent self and the hand would represent home. I wanted to play with the distance between the face and the hand to foster different interactions.

While playing around with the connections, I came across the idea of recreating the period cramps I feel as I am currently on my period. Based’s on one’s face and hand movement, various lines stretch, shrink, and strangle with one another. When another hand enters, the lines switch over to the other side, representing the sudden pains that occur.

As this was a quick project, I believe that there is a myriad of ways to extend this project. For instance, I could also bring in points from the body to not only create more interactions but also present more diverse forms. Further, instead of utilizing lines, I could incorporate shapes such as triangles and make use of gradients to build a 3d object.

kong-facereadings

In Last Week Tonight’s Face Recognition video, it mentioned how facial recognition no longer applies only to humans. As an example, it demonstrates how sensors can scan fish and apply image processing to identify the fish as well as any relevant symptoms. This struck me as I wasn’t aware or ever thought about this idea. I believe that this opens a myriad of opportunities not only for creative practices but also for real-life problem solving, such as protecting endangered animals. Personally, as a pet owner, it would be helpful to have a pet recognition technology: it would detect and share the pet’s location with the pet owner to minimize pet loss.

The idea of algorithm bias itself was very striking to me as I’ve haven’t deeply thought about the negative sides of face technology before. More specifically, in Against Black Inclusion in Facial Recognition by Nabil Hassein, it mentioned facial recognition’s racial bias and law enforcement use. Though it can be used for good, it raised a point that it could strengthen police control and thereby deepen on-going racial bias. This made me wonder if facial recognition technology is “really” good and if there are ways to ensure that it is solely used for its benefits.

kong-VQGAN+CLIP

Textual Prompt: joyful beans

Textual Prompt: alice in the wonderland

It was interesting to see how both of my trials created a part of animal-like features (cat feet, fox?) when my prompts didn’t include direct relationships to animals. The resulting images were definitely different from my initial expectations, which I believe would require an in-depth understanding of the software to achieve. I like how the images contain brushstroke features, but maybe it was due to the images not being fully processed.

kong-TextSynthesis

InferKit: bright day

Narrative Device: rope, intestine

This was my first experience using an AI, and I was pleasantly surprised to find that AI was able to generate engaging stories. With InferKit, I even sometimes felt that there were poetic phrases. With Narrative Device, I was surprised as the story seemed like a horror story. It was interesting for me because it is a type of writing that I personally wouldn’t have thought about writing. Moreover, one thing I noticed is that when I typed “heart chain” as the input, it didn’t generate a story but rather a list of related words, such as hairclip, rings, games, dolls, etc.

kong-ArtBreeder

It was really fascinating to see how small clicks are able to completely alter the images. It was also interesting to see how my final outputs are mystical and ocean-like when original images are composed of unrelated objects, such as traffic lights, flowers, steel boxes, etc. Through this platform, I have experienced an iterative process in creating, where I swift the direction of my final output based on each selection of the image.