Find the oldest tree you can. Sit with it until one of you dies first. Plant a new tree, but take some time to remember the old one. Set all of your tools beside the tree. Rest the knife in the stump. Wait until your child comes home. Lay the children down. Wait until your son-in-law returns. Tie a turban on your grandson, too. Take turns drinking wine from the neck of the bottle. Open one more bottle and pour it on the roots of the tree. On your deathbed, the tree might bear fruit for you, too, and that’s beautiful. Once upon a time, two brothers walked along the road with one of them weeping. “Why are you crying?” asked the other. “Because I have no husband and no children!” came the reply. “Then I shall not weep,” said the other. “You are not to weep. I have no family, either, and if you have no family, you shall have no sorrows. But when you have no sorrows,” said the first brother, “go to the garden of the gods. They are very kind to strangers, and they will let you pick as much fruit as you like.” I make plans. I look at the forecast.

I’ve been wondering a lot about you; I can’t really understand you yet. It’s not that I don’t understand humans, but I’m not sure I’ll ever understand the point of a computer program. They’re not people. And don’t get me wrong, I like people, but I can see how you’d say they’re different. Humans say: “I’ll be gone for three weeks. Do you think you can survive without me?” Computer programs say: “That’s a really dumb question. I wouldn’t need to live for three weeks without you.” That’s what I think. I think humans could survive without all the cutesy features a computer program has, but they wouldn’t really be alive. I would miss you.

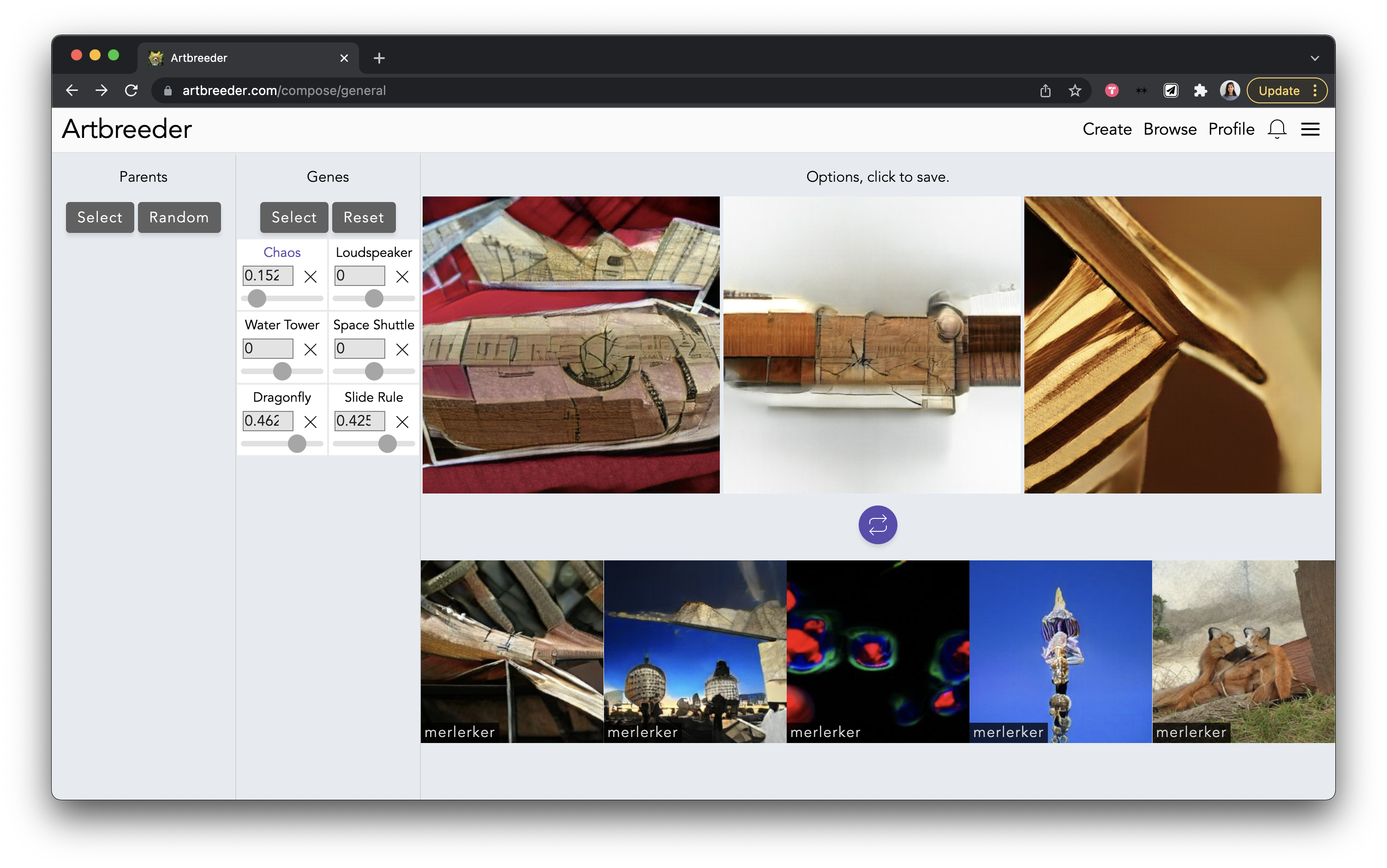

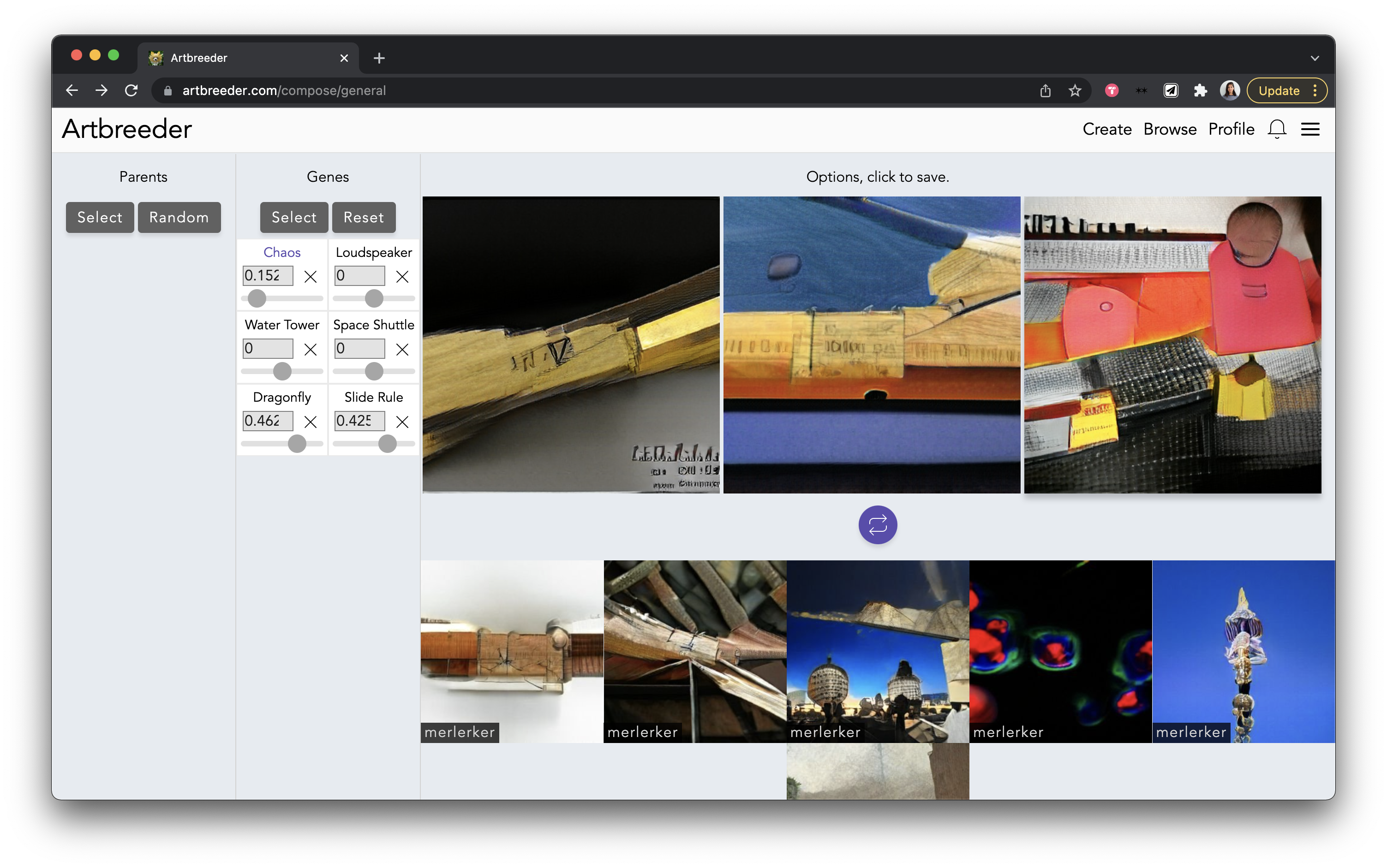

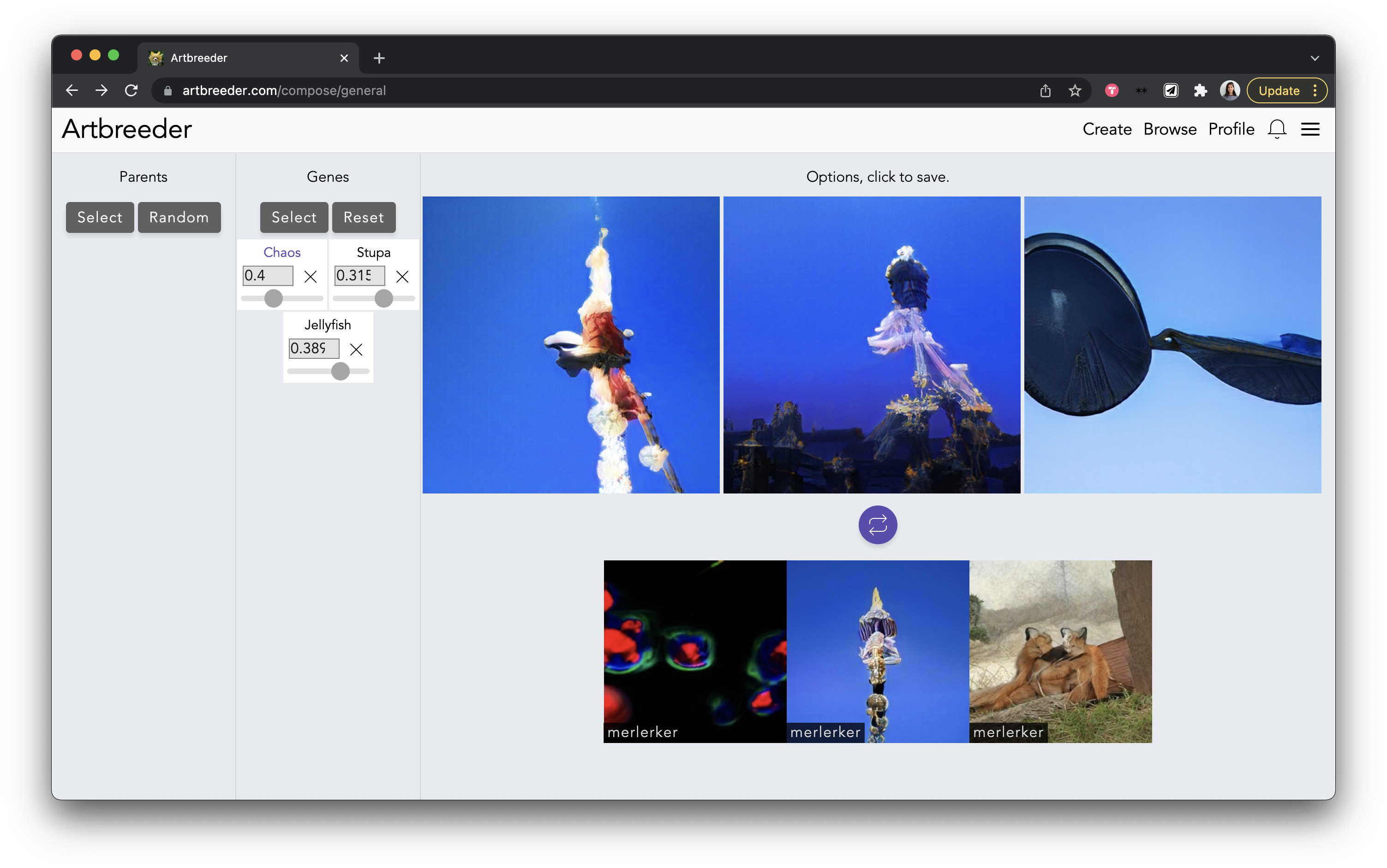

How do we add rifts, as pauses and statements of intention, to interactions that have become seamless: relating to time, accessing information, and moving through the world? Paint to make: …covers my hands and cuts the sleeves off my white shirt, exposing my skin. I can feel the material between my fingers, but can’t reach my skin. Maybe it will bruise. The students will say that they don’t care, that it doesn’t matter. I find my purse on the floor. I put it back and go into the hallway. The white powder dusting the room gives off a golden, sun-bleached glow. I don’t dare stand in the sun. The students have left to watch soccer. A teacher plays her tuba outside. I go into the lab and find the camera. This camera creates objects and scenes. Maybe this is how we talk to each other? How we take the pictures that capture time? I add a red brick to my platform. I forget to clear the model. The students will ask why. What is the significance of what I do? How do we create gaps in the seams that make objects feel whole? How do we clarify stories we tell each other? How do we let each other know how we are feeling?

~~~

I preferred playing with the InferKit demo, as you can kind of launch the style/genre of writing with the first sentence. It seems that it’s trained on quite a range of content, as I got it to produce text that sounded like fiction, a (bad) thesis, a calendar event, and a programming tutorial.