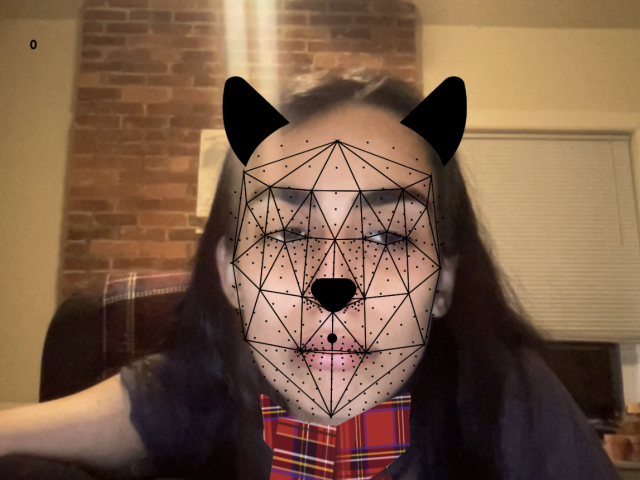

From Joy Buolamwini’s talk “1 in 2 adults in the U.S. have their face in facial recognition networks”… a terrifying fact because as she says, these networks are very often wrong. Misidentifying someone in the context of policing and the justice system takes this fact to an entirely new level of terrifying. There are many people out there that because they do not know how these systems work (or do, but know that others don’t), take it to be full-proof and factual, using these “facts” to leverage their goals.

In Kyle McDonald’s Appropriating New Technologies: Face as Interface, he describes how “Without any effort, we maintain a massively multidimensional model that can recognize minor variations in shape and color,” Going further to reference a theory that says “color vision evolved in apes to help us empathize.” I found this super interesting and read the article that it linked to. The paper, published by a team of California Institute of Technology researchers “[suggested] that we primates evolved our particular brand of color vision so that we could subtly discriminate slight changes in skin tone due to blushing and blanching.” This is just so funny to me, we are such emotional and empathetic creatures.