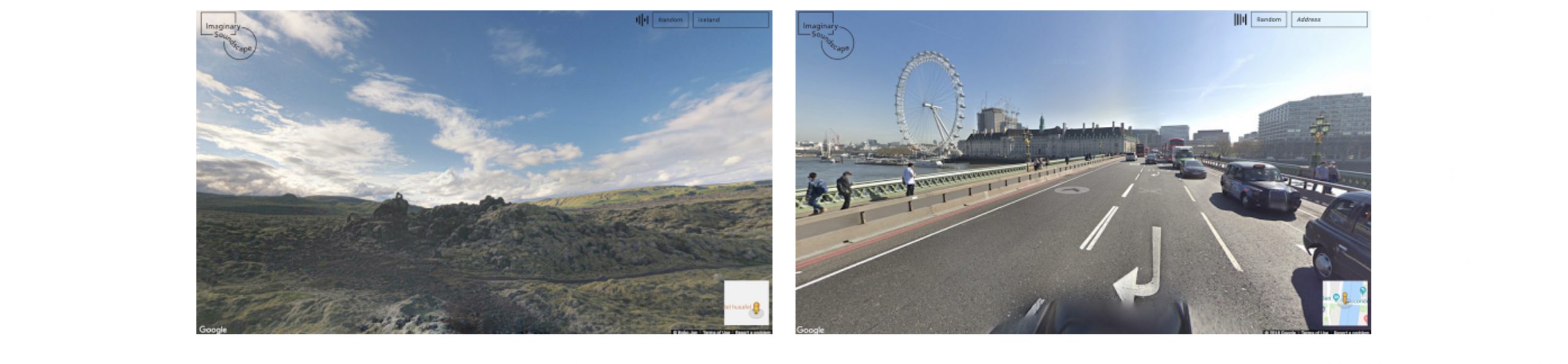

https://experiments.withgoogle.com/imaginary-soundscape

I found this project and thought it was a really cool use of ML with a lot of potentials. In the demo, the model generates sound based on the street view, giving the user a full audio visual experience from the purely visual street map data. I thought it really utilized DL to its strength — finding patterns for massive datasets. With only a reasonably sized training set, it can be generalized to the massive street view data we have. The underlying model (if I understood/guessed correctly) is a CNN (which is frozen) that takes in the image to generate an embedding, and another CNN that is trained but discarded at inference which takes in the sound file to generate an embedding as close to the one generated by the image CNN for the paired image. The embedding for each sound file is then saved and used as a key to retrieve sound file at inference time. With more recent developments in massive language models (transformers,) we’ve seen evidence (Jukebox, Visual transformers, etc.) showing that they are more or less task agnostic. Since the author mentioned that the model sometimes lacks a full semantic understanding of the context but simply match sound files to the objects seen in the image, these multi-modal models are a promising way to further improve this model to account for more complex contexts. It might also open up some possibilities of remixing the sound files instead of simply retrieving existing sound data to further improve the user experience. This might also see some exciting uses in the gaming/simulation industry. Windows’ new flight simulator is already taking advantage of DL to generate a 3D model (mainly buildings) of the entire earth from satellite imagery, it’s only reasonable to assume that some day we’ll need audio generation to go along with the 3D asset generation for the technology to be used in more games.