This project plays with the idea that was we give our technology the agency to think like us, we also give it our own human flaws.

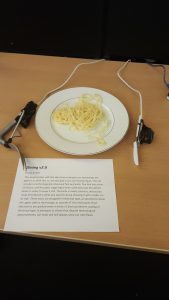

Therefore I’ve made an ‘IoT-ized’ dining set, featuring a knife and a fork that have been upgraded to improve the user’s dining experience.

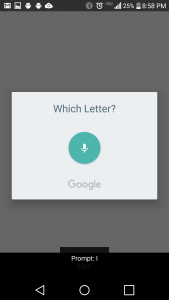

The dining set features an eco-friendly knife that you must pump yourself, and a fork that gets a bit nervous when it comes down to stabbing food, and therefore tries to avoid its responsibilities. The fork is plagued by a fear of failure, so it avoids its work rather than attempts to do its job. The knife is afraid to take creative liberties, and so you must instruct it exactly where you want to go.

Demonstration Video:

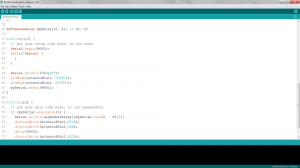

Code:

Making the Fork:

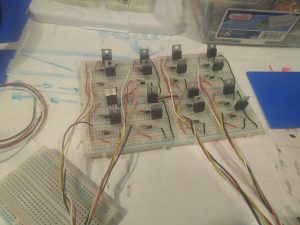

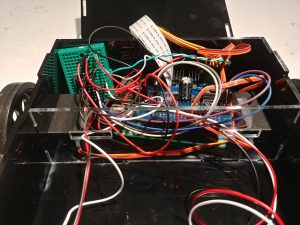

I initially prototyped the fork with a plastic fork, a servo, an arduino, and a tilt sensor. I used a straw to provide resistance, cutting the fork in half and allowing the servo to pull on a string that moved the fork down on command.

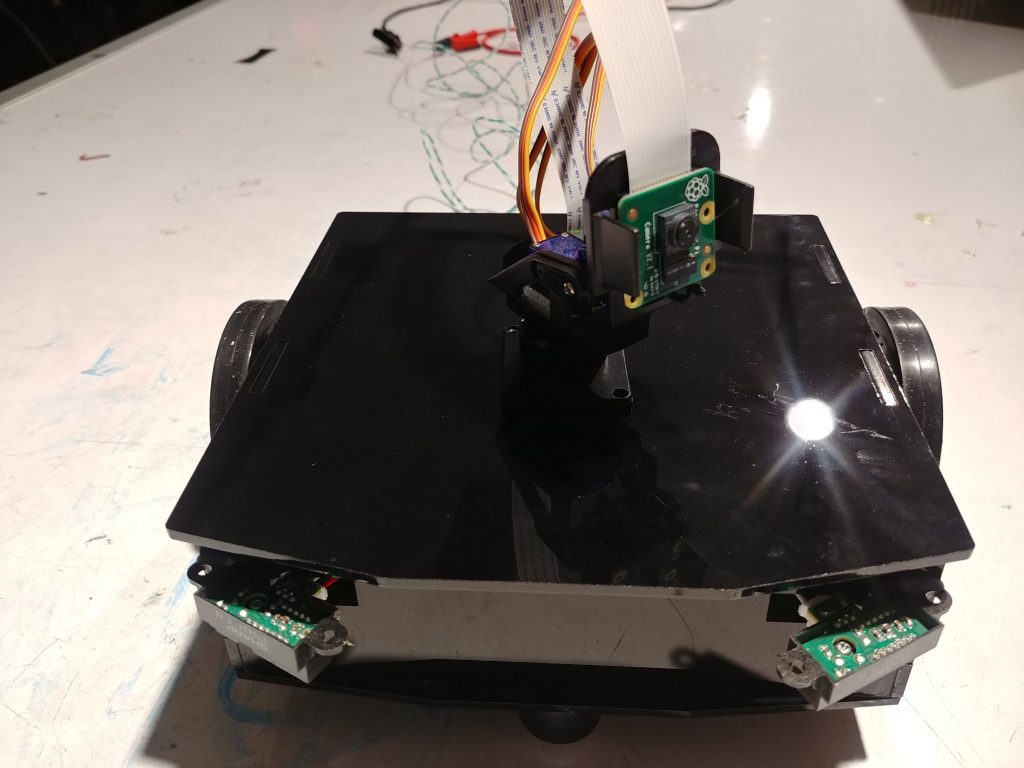

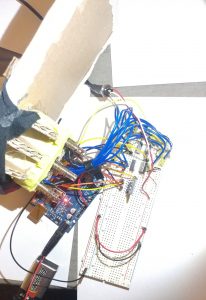

I found some springs, and found the motion to be more organic, making it seem as though the fork had life of its own. For the show, I used an Arduino Pro Mini and soldered it to a tilt sensor and to a servo attached to a larger metal fork. By connecting a spring between a cut off fork head and the fork base, and attaching this spring to the servo, I could cause the same motion as the prototype and move the fork.

Making the Knife:

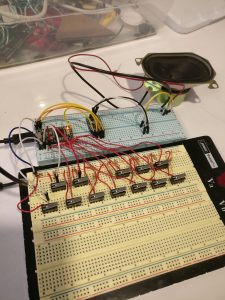

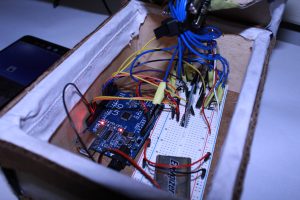

I 3D printed a gearbox for a servo that translated rotational to linear motion. (STL files here)

Then I soldered together an Arduino Pro Mini, a 100k ohm sliding potentiometer, and a servo, and mapped inputs from the pot to rotation (and thus linear motion) from the servo. I split a knife in half and attached it to the end of the linear actuator and the handle to its base.

For both of the utensils, I molded a handle out of InstaMorph, and used it to hide the electronics of the devices. I then wrapped that in electrical tape, mimicking the way sensitive electronics are packaged in devices (for example, batteries or ICs), as a way to highlight the difference between how ‘techy’ devices are constructed versus how the target audience perceives them.

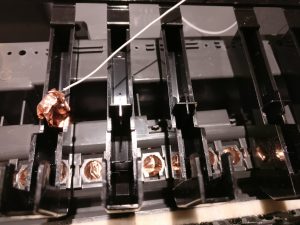

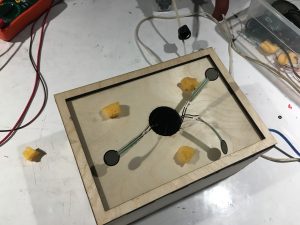

I had also worked on the gearbox for a jigsaw knife (a knife that moves in a similar way to a jigsaw blade), as an example of an overenthusiastic and unnecessary device, but had trouble attaching a motor to the gearbox.

I used this gearbox, and laser cut a baseplate to attach to my motor. I also made a D-shaft to Hex shaft couple out of acrylic, so that the motor shaft would nicely fit into the gearbox assembly, but the motor managed to break through the acrylic due to not being aligned properly when drying. Unfortunately I did not realize the repeated cause for stalling was due to misalignment, and when I tried to glue it again in the correct alignment, it dry a little bit off center.