Introduction:

This project selects, explains, and visualizes through AI image generation the ignored Chinese names of international students.

link to “Muted Identities” project website

Inspiration:

As an international student from China, one of the things I hear the most while studying in the US is that “Chinese students tend to be shy and quiet”. In reality, the personalities of international students are often “muted” when they speak another language. While they might be sharp, funny, or wild in their mother language, such sparkling qualities do not always get “translated” when they’re living in an English-speaking country.

This project attempts to unveil the hidden identities of these students due to the language barrier while they’re in a foreign country, by unveiling their original names, which are often ignored and forgotten in an English-speaking environment.

Process:

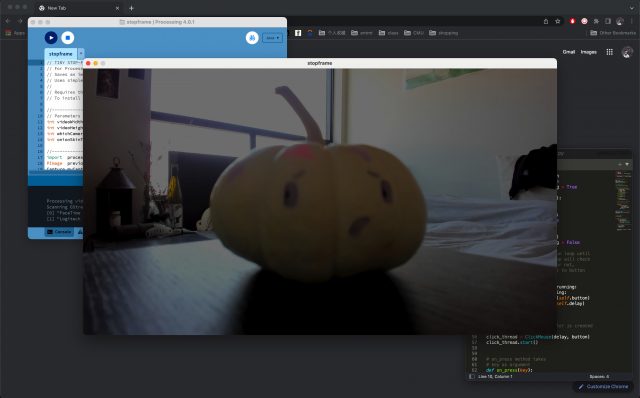

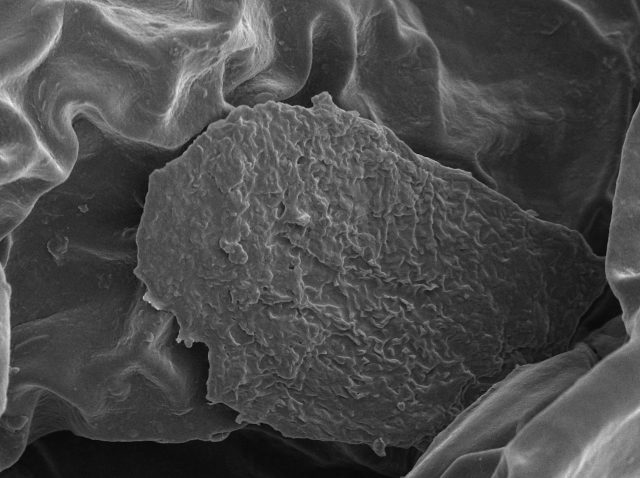

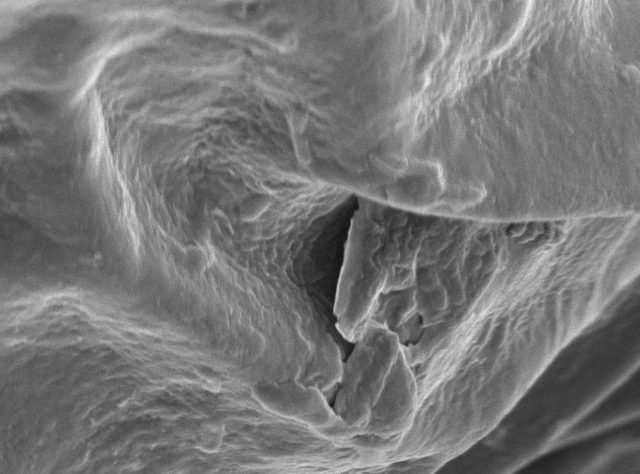

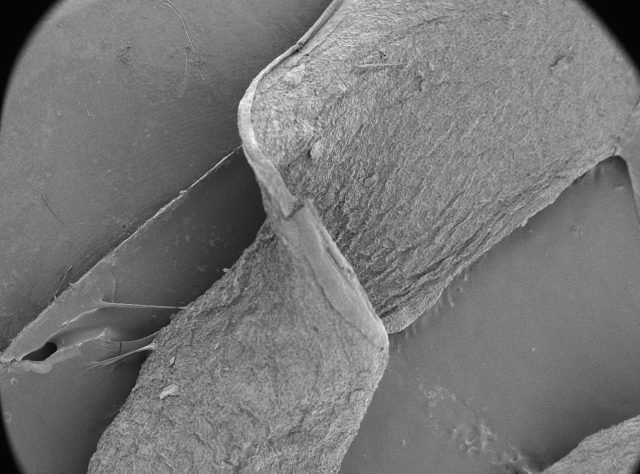

The participants were asked to sign their English and Chinese name digitally in their usual handwritten style. They were then asked to describe the meaning of their name.

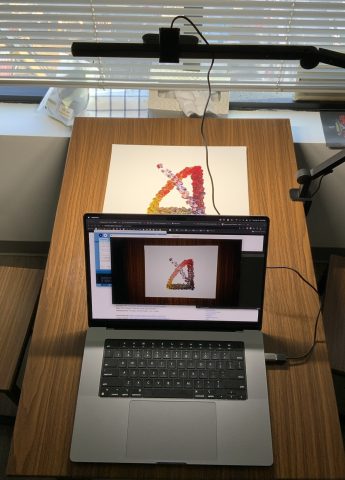

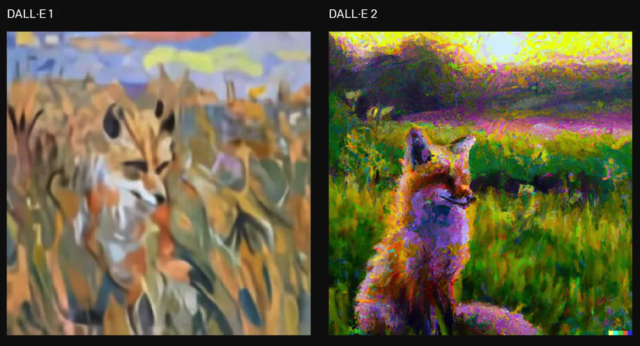

The description of their name was then given as a prompt for Midjourney AI Image generator, where a corresponding image is produced.

The participants would then give feedback, such as preferred composition, specific art style, or color scheme, on the generated image until the image is fine-tuned to their own interpretation of their names.

Example: The Chinese name of Bella is 刘宇辰, which means the universe, stars, and dragon. The description “A Chinese dragon in a starry universe” was given as a prompt for Midjourney AI generator. The image went through several iterations until the name owner was satisfied with the result.

Explanation:

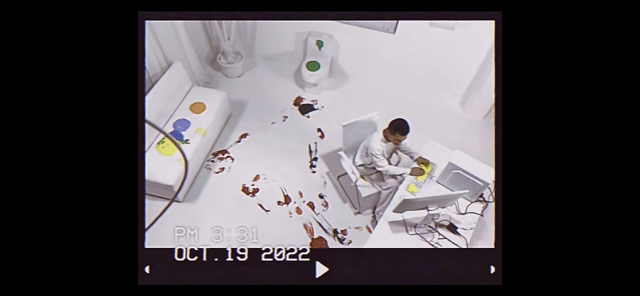

The website displays the English name of eight international students. The flip cards “reveal” the true names of these students upon hovering. Further explanation and visualization of their name can be found when the flip card is clicked.

An interesting observation can be made when comparing their given name in Chinese and their chosen name in English. It subtly reveals a process of choosing a self-identity, a “doppelgänger”, that represents them in a new country. The one that chose to be the feminine “Bella” was given a masculine name at birth, for example, and the one that chose to be a self-created word “Rigeo” was given a commonly used name at birth.

Feedback:

The project, while very simple conceptually, surprisingly resonated with people more than I expected. When the project was shown in class and in private to my friends, many more international students showed interest in adding their names to the list, including Korean and Japanese students as well. They were enthusiastic about creating visual representation of their names.