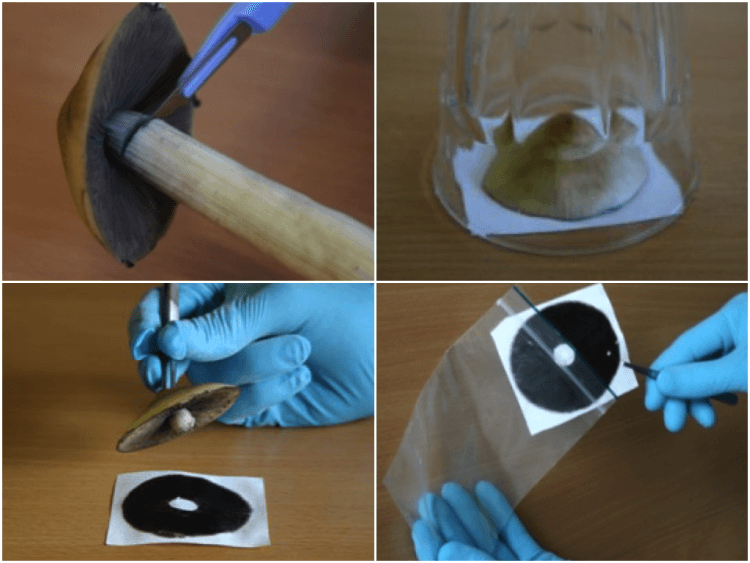

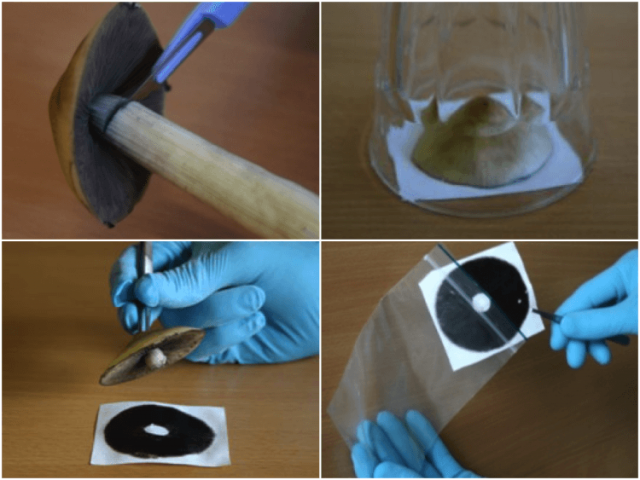

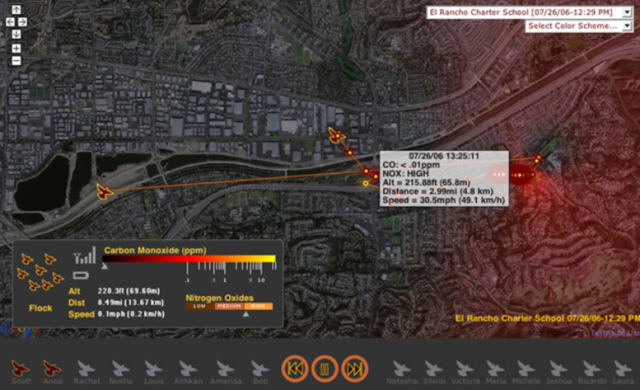

For my final project I created a pipeline for obtaining super hi-res , stitched together images from the studio’s Unitron zoom microscope.

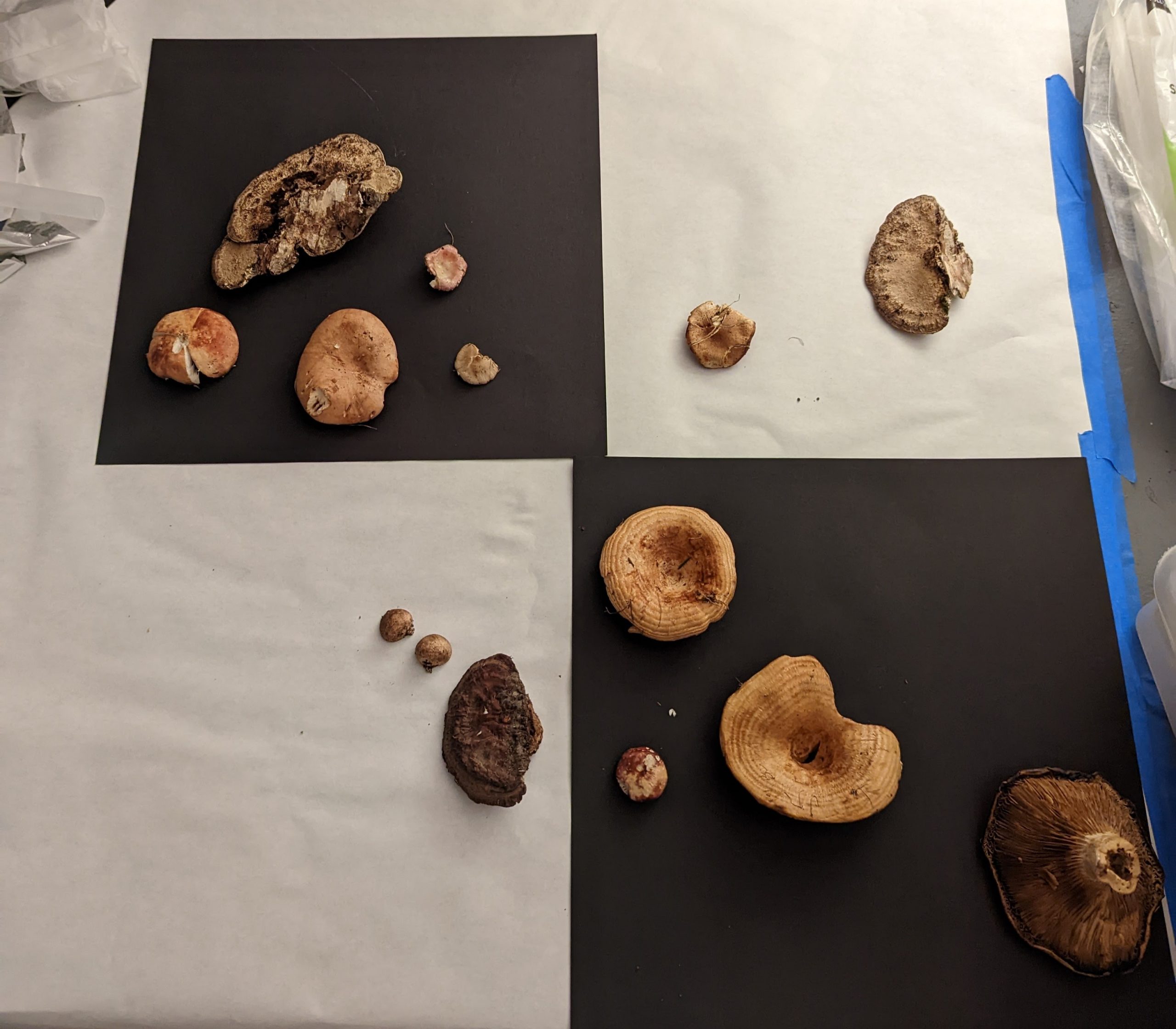

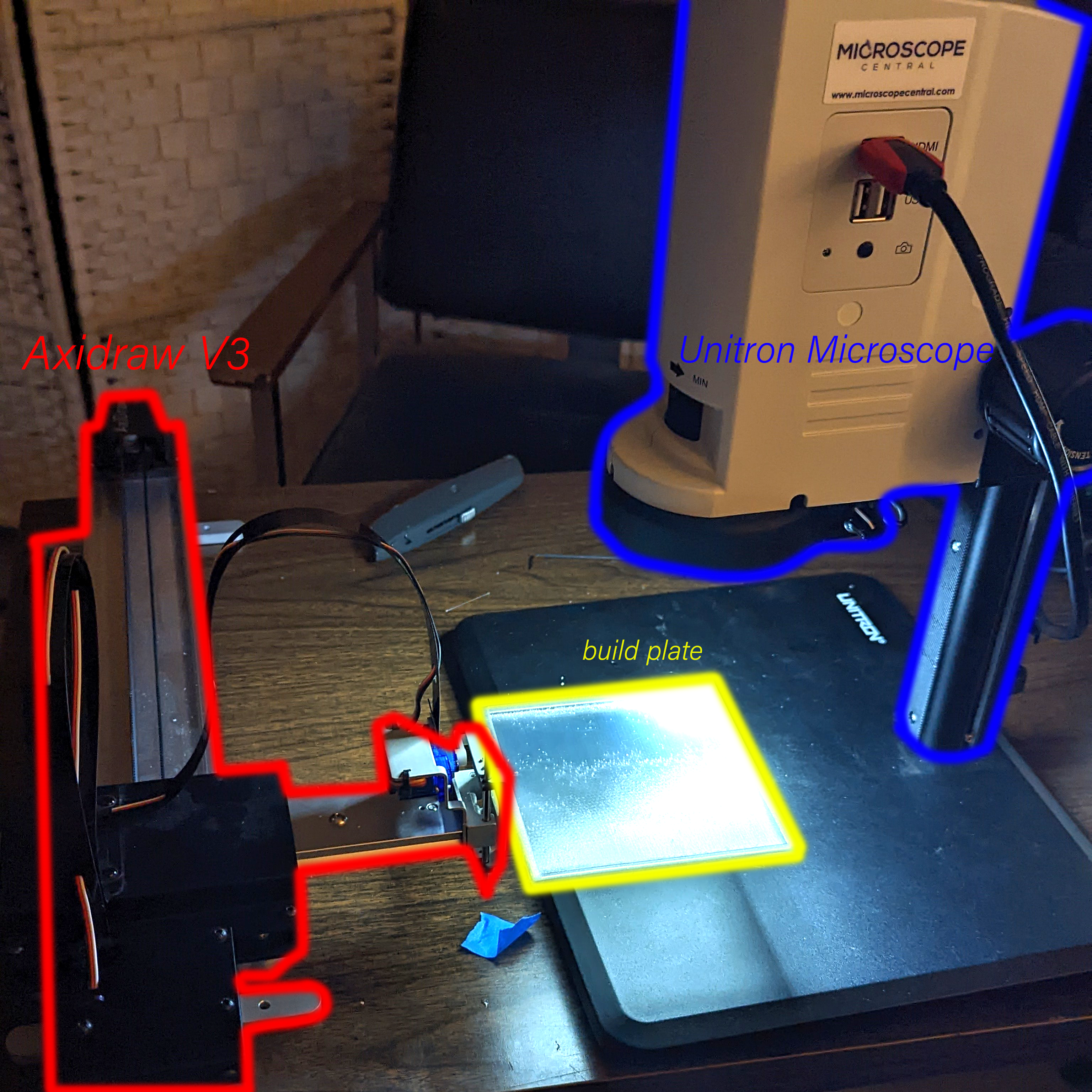

The main components of the capture setup I have are the axidraw a3, a custom 3d printed build plate , and the Unitron Zoom HD microscope.

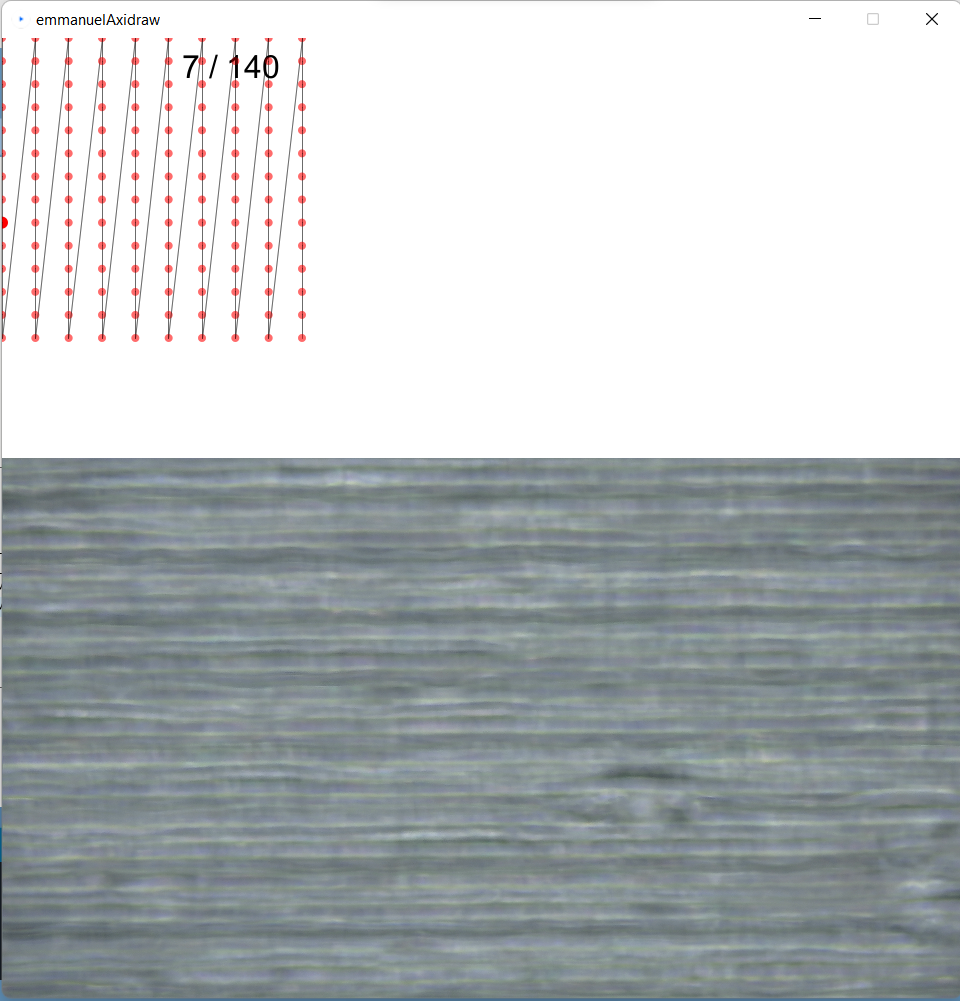

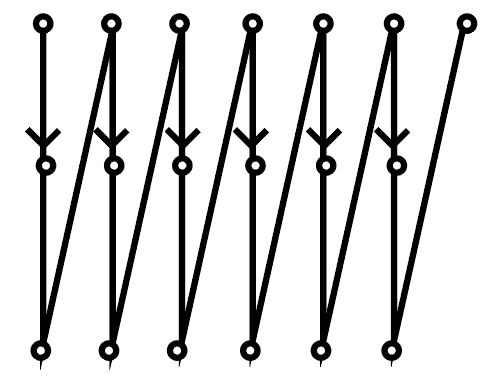

These items are all connected to my laptop which is running a processing script that moves the axidraw build plate in a predetermined path, stopping along that path and outputting the microscope image at each point along the path. With this code I have full control over the variables that move the axidraw arm and can make scans with a specific number of images, image density, and the time interval that the capture is occuring.(This code was given to me by Golan Levin, and was edited in great collaboration by Himalini Gururaj, and Angelica Bonilla)

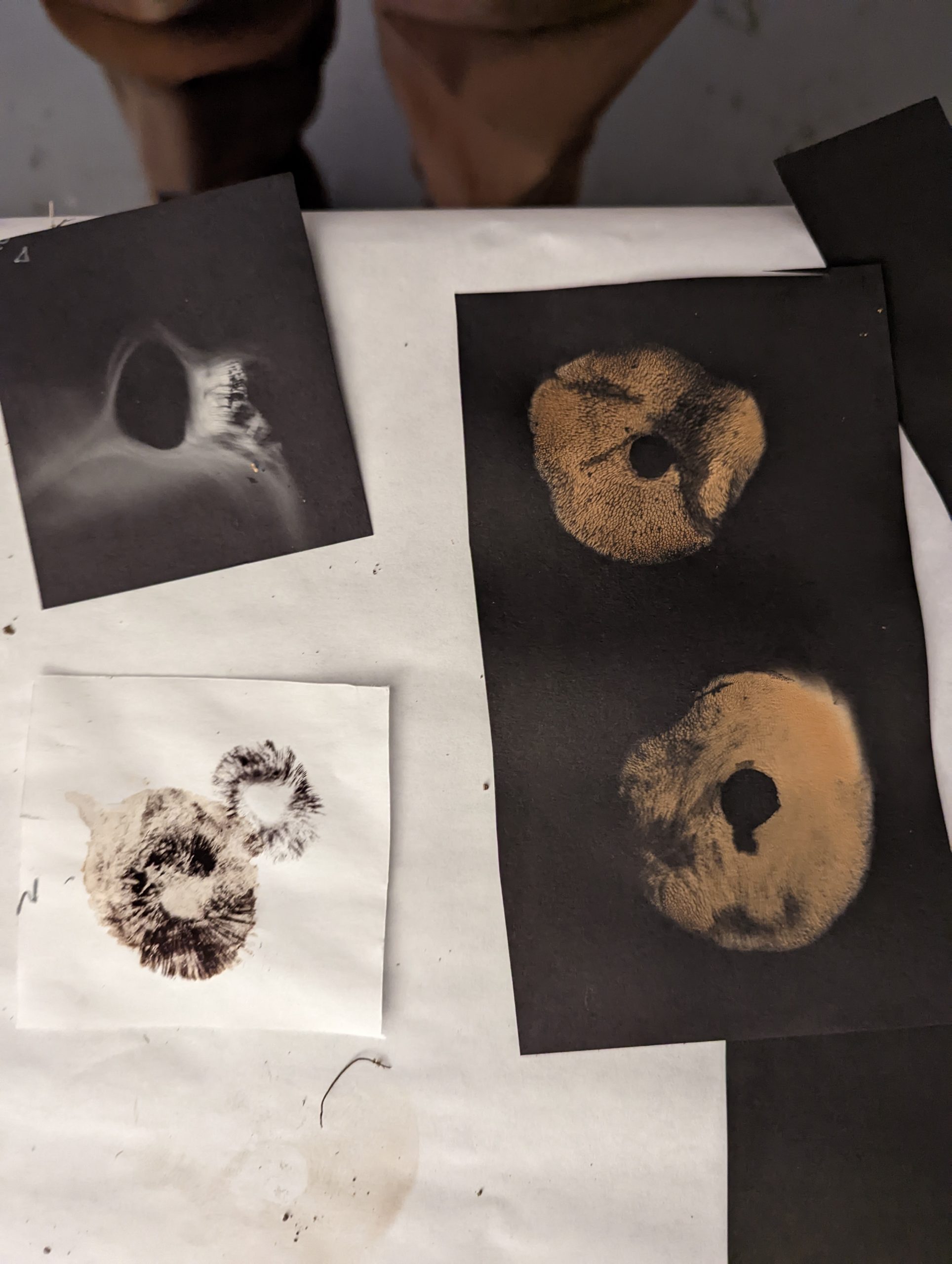

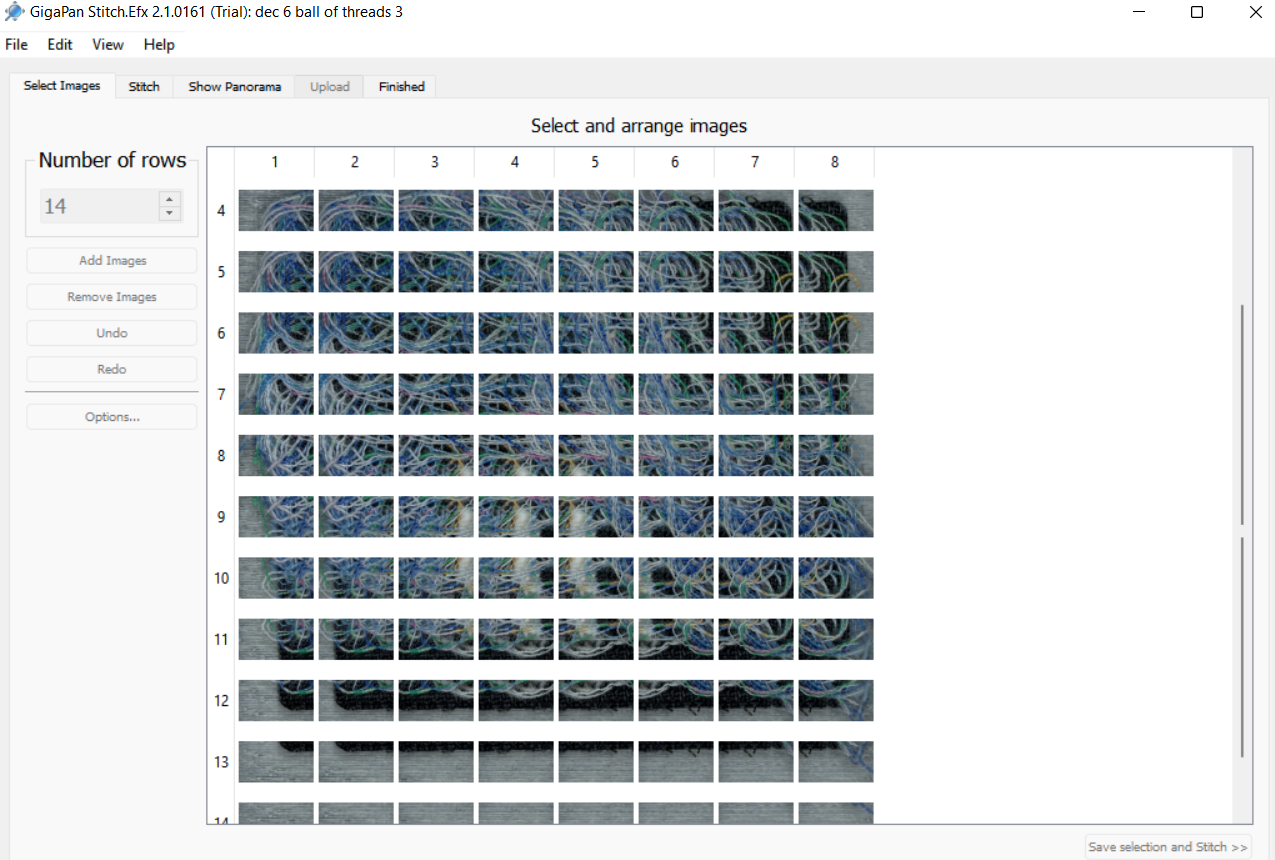

From here I put the output images from the processing program into a software called gigapan stitch to have the edges of each photograph aligned and merged together. each photograph needs some amount of overlap for the software to be able to detect and match up edges

From here the images go straight to being hosted on the gigapan website and are accessible to all.

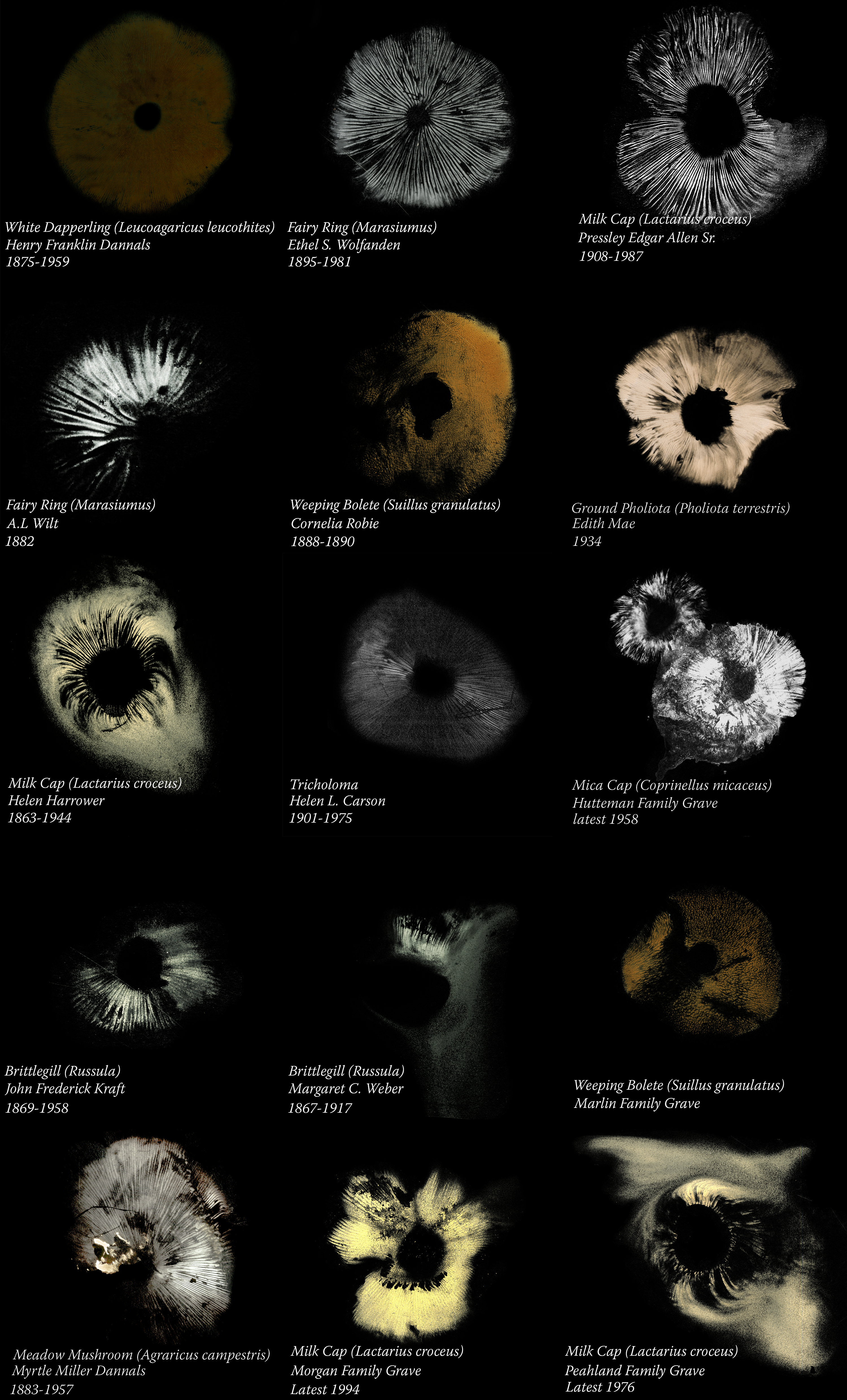

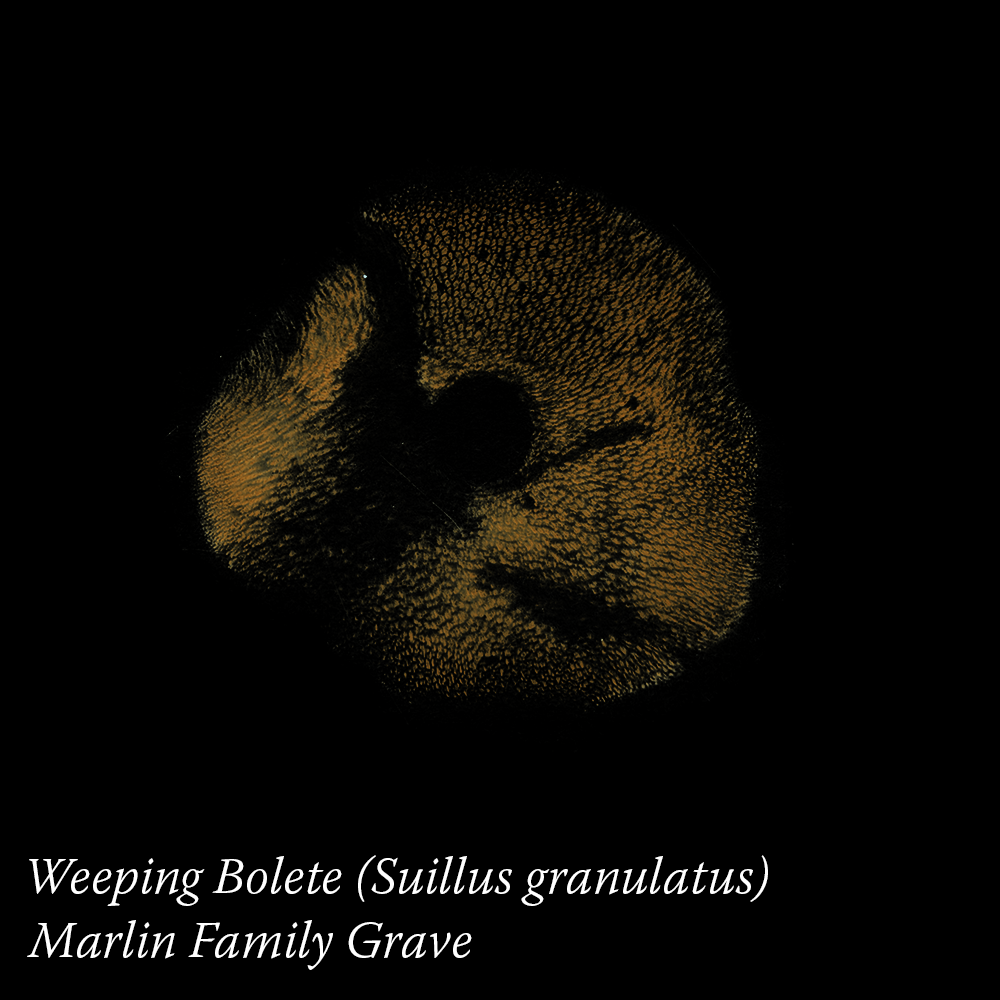

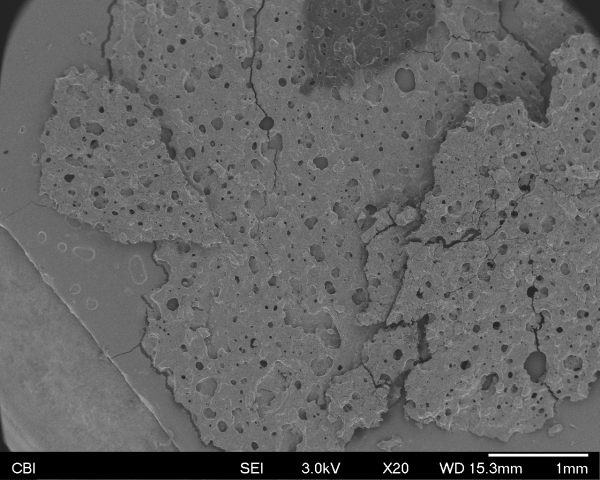

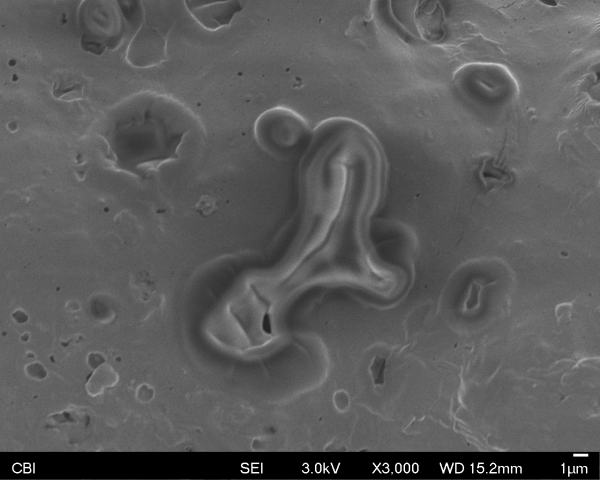

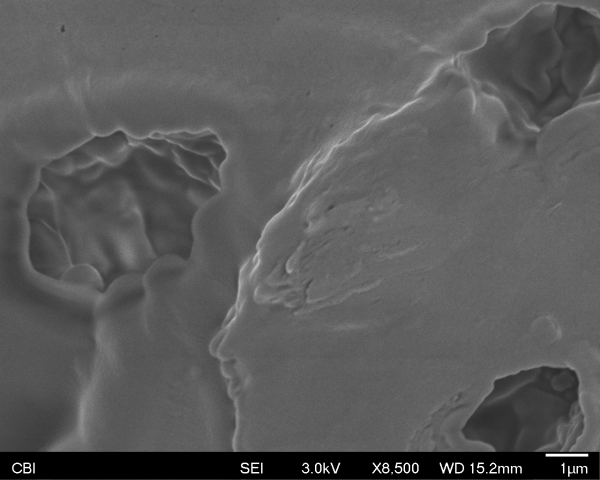

Here are some I have made so far with this method.

http://www.gigapan.com/gigapans/231258

http://www.gigapan.com/gigapans/231255

http://www.gigapan.com/gigapans/231253

http://www.gigapan.com/gigapans/231250

http://www.gigapan.com/gigapans/231241

http://www.gigapan.com/gigapans/231256

http://www.gigapan.com/gigapans/231259

here’s a timelapse of a scan I couldn’t use:

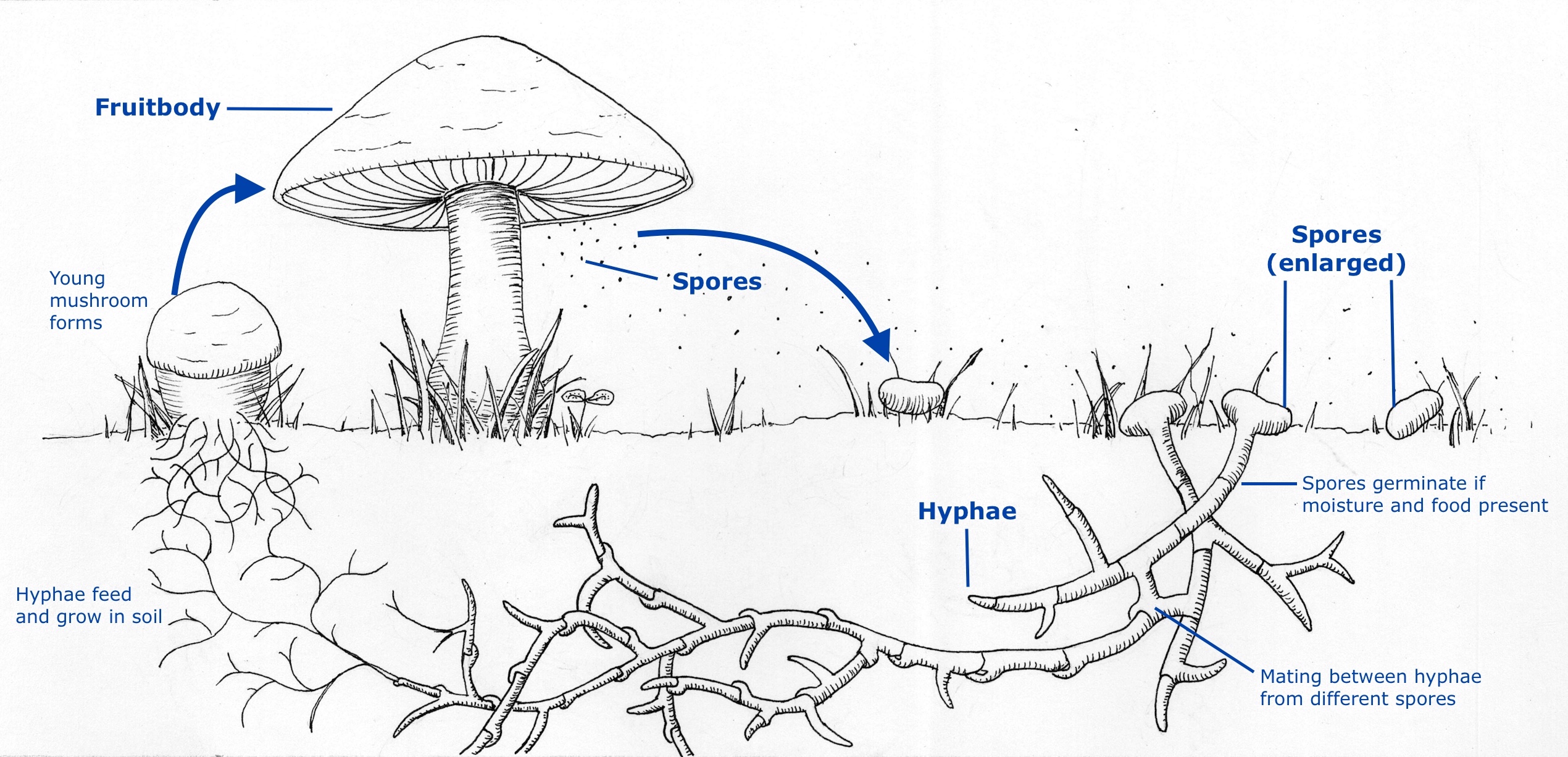

This process for scanning objects opens up scanning to a host of subjects that a flatbed scanner may not be able to capture. Such as objects with strange topology and subjects that ideally should not be dried out. I also believe that with a proper stitching software I will be able to record images with density and dpi that go beyond a typical flatbed scanner.

I have a lot I would change about my project if I was given a chance to restart or work on it further. I didn’t have enough time to give softwares similar to gigapan a shot, and I think in many ways gigapan is a less-efficient, data capped method for image stitching than may be necessary for what I need. I also wish the range of objects I could capture did not have so many stipulations. At the moment I am only able to get a small sliver of the plane of my subject in focus. where I would have preferred to stitch together different focal planes. the subjects also have to be rigid enough to not deform or slosh around during axi-draw bed transit. With this project I am super excited to get a chance to photograph live mushroom cultures I have been growing and other biomaterial that does not scan well in a flat bed scanner. I want to utilize this setup to it’s fullest potential and be able to use the microscope near maximum magnification while having it be viewable.

This project really tested a lot of my observational and hence problem solving skills. There were many moments where after viewing my exported images I was able to detect an issue in the way I was capturing that I could experiment with, such as realizing that increasing the amount of overlap between images doesn’t increase dpi unless I am actually increasing my magnification strength, or realizing with a zig zag path that half of my images are being stored in the wrong order.

Once a again very big thanks to all of the people who helped me at every step of the way with this project. Y’all rock.