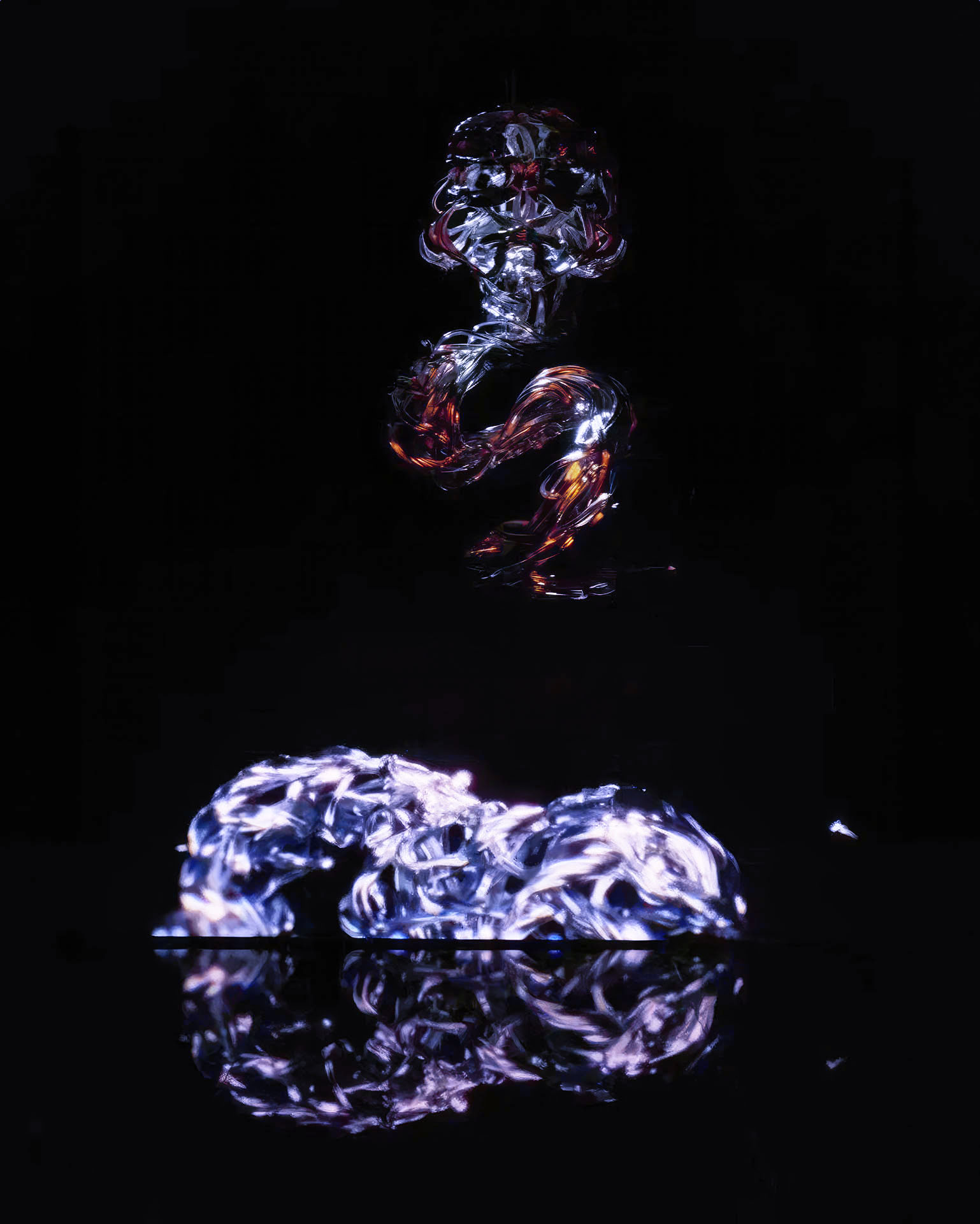

Motion is super intuitive for a person. Your brain says “move” and then you’re limbs move, you’re typing on a keyboard, and you dancing the night away at your friends house warming party. But the task of capturing motion and interpreting this data is a bit more difficult than that. Sougwen Chung takes motion and interprets it as a form in a virtual space. This process of capturing data, then running it through an AI system seems quite interesting, with some weird results. I’m curious as to what different results this sort of workflow can achieve.