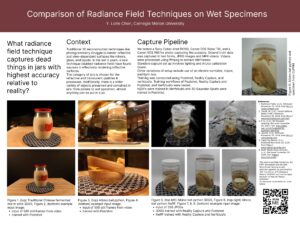

Traditional 3D reconstruction methods, like photogrammetry, often struggle to render reflective and translucent surfaces such as glass, water, and liquids. These limitations are particularly evident in objects like jars, which uniquely combine reflective and translucent qualities while containing diverse contents, from pickles to wet specimens. Photogrammetry’s reliance on point clouds with polygon and texture meshes falls short in capturing these materials, leaving reflective and view-dependent surfaces poorly represented. Advancements like radiance fields and 3D Gaussian Splatting have revolutionized this space. Radiance fields, such as Neural Radiance Fields (NeRFs), use neural networks to generate realistic 3D representations of objects by synthesizing views from any arbitrary angle. NeRFs model view-dependent lighting effects, enabling them to capture intricate details like reflections that shift with the viewing angle. Their approach involves querying 5D coordinates—spatial location and viewing direction—to compute volume density and radiance, allowing for photorealistic novel views of complex scenes through differentiable volume rendering. Complementing NeRFs, 3D Gaussian Splatting uses gaussian “blobs” in a point cloud, enabling smooth transitions and accurate depictions of challenging materials. Together, these innovations provide an unprecedented ability to create detailed 3D models of objects like jars, faithfully capturing their reflective, translucent, and complex properties.

Further development:

After a brief conversation with a PhD student working with splats and drawing from my own experiences, I’ve concluded that this project will require further development. I plan to develop this further by writing scripts to properly initialize my point clouds (using GLOMAP/COLMAP) and nerfstudio (an open source radiance field training software). This movement from commercial software in beta to open source is to gain more control over how the point clouds are initialized. I’m also changing how I capture the training images. Previous methods confused the algorithm.