Concept

I seek to pixelate a flat, two-dimensional image in TouchDesigner and imbue it with three-dimensional depth. My inquiry begins with a simple question: how can I breathe spatial life into a static photograph?

The answer lies in crafting a depth map—a blueprint of the image’s spatial structure. By assigning each pixel a Z-axis offset proportional to its distance from the viewer, I can orchestrate a visual symphony where pixels farther from the camera drift deeper into the frame, creating a dynamic and evocative illusion of dimensionality.

Outcome

Capture System

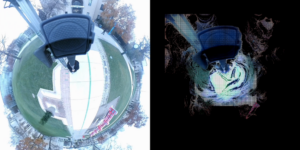

To align with my concept, I decided to capture a bird’s-eye view. This top-down perspective aligns with my vision, as it allows pixel movement to be restricted downward based on their distance from the camera. To achieve this, I used a 360° camera mounted on a selfie stick. On a sunny afternoon, I walked around my campus, holding the camera aloft. While the process drew some attention, it yielded the ideal footage for my project.

Challenges

Generating depth maps from 360° panoramic images proved to be a significant challenge. My initial plan was to use a stereo camera to capture left and right channel images, then apply OpenCV’s matrix algorithms to extract depth information from the stereo pair. However, when I fed the 360° panoramic images into OpenCV, the heavy distortion at the edges caused the computation to break down.

Moreover, using OpenCV to extract depth maps posed another inherent issue: the generated depth maps did not align perfectly with either the left or right channel color images, potentially causing inaccuracies in subsequent color-depth mapping in TouchDesigner.

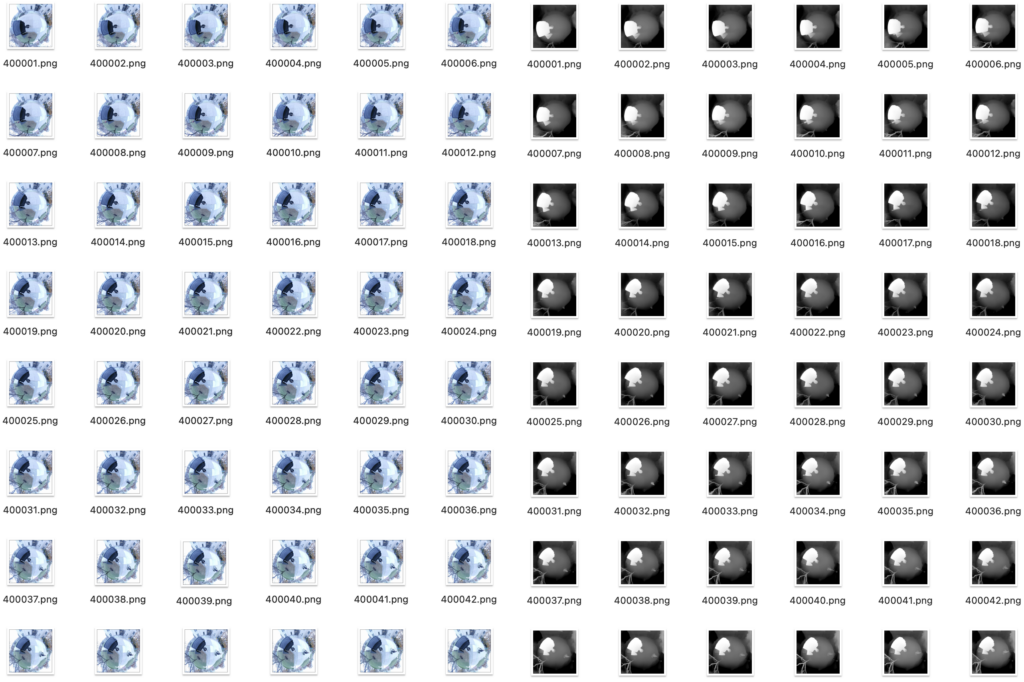

Fortunately, I discovered a pre-trained AI model online Image Depth Map that could directly convert photos into depth maps and provided a JavaScript API. Since my source material was a video file, I developed the following workflow:

- Extract frames from the video at 24 frames per second (fps).

- Batch processes 3000 images through the Depth AI model to generate corresponding depth maps.

- Reassemble the depth map sequence into a depth video at 24 fps.

This workflow enabled me to produce a depth video precisely aligned with the original color video.

Design

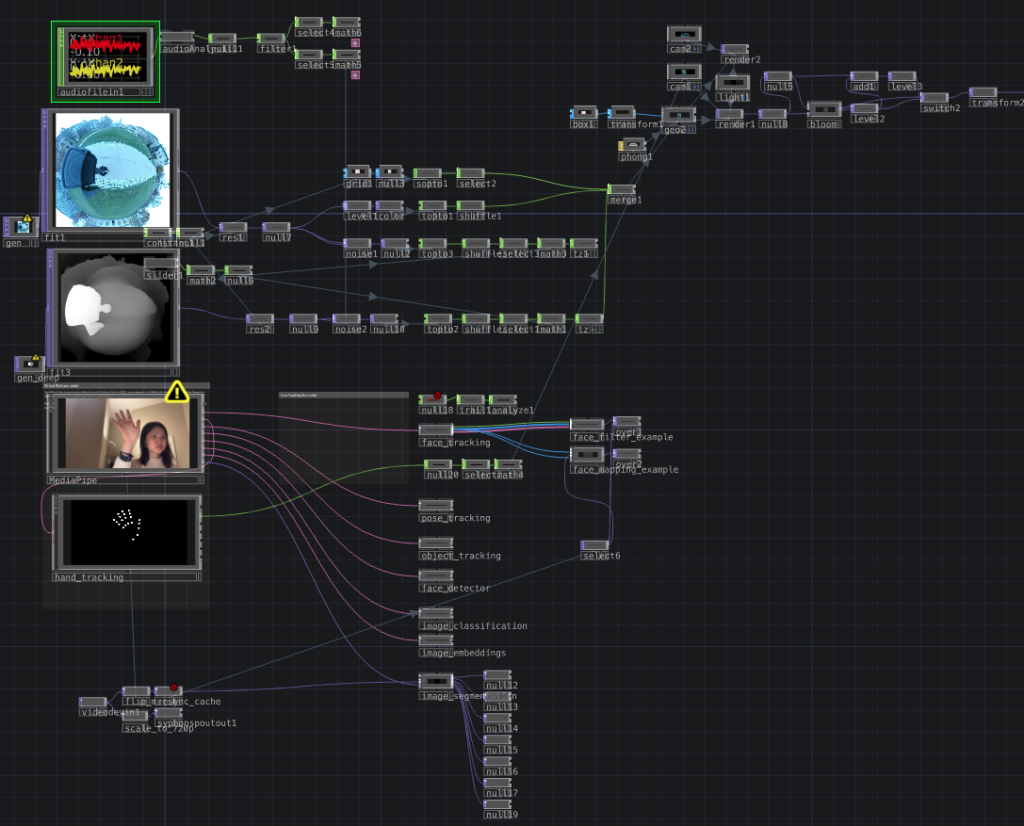

The next step was to integrate the depth video with the color video in TouchDesigner and enhance the sense of spatial motion along the Z-axis. I scaled both the original video and depth video to a resolution of 300×300. Using the depth map, I extracted the color channel values of each pixel, which represented the distance of each point from the camera. These values were mapped to the corresponding pixels in the color video, enabling them to move along the Z-axis. Pixels closer to the camera moved less, while those farther away moved more.

The interaction between particles and music is controlled in Real-Time

Observing how the 360° camera captured the Earth’s curvature, I had an idea: Could I make it so viewers could “touch” the Earth depicted in the video? To realize this, I integrated MediaPipe’s hand-tracking feature. In the final TouchDesigner setup, the inputs—audio stream, video stream, depth map stream, and real-time hand capture. The final result is an interactive “Earth” that moves to the rhythm of music. The interaction between particles and music is controlled in real time by the user’s beats.

Critical Thinking

- Depth map generation was a key step in the entire project, thanks to the trained AI model that overcame the limitations of traditional computer vision methods.

- I feel like the videos shot with the 360° camera are interesting in themselves, especially the selfie stick that formed a support that was always close to the lens in the frame, which was very realistic and accurately reflected in the depth map.

- Although I considered using a drone to shoot a bird’s-eye view, the 360° camera allowed me to realize the interactive ideas in my design. Overall, the combination of tools and creativity provided inspiration for further artistic exploration.