TLDR: Tested ways to get high quality 3DGSplats of dead things. Thanks to Leo, Harrison, Nica, Rich, Golan for all the advice + help! Methods tested:

- iPhone 14 camera (lantern fly) -> ~30 jpegs

- iPhone 14 camera + macro lens attachment (lantern fly) -> ~350 jpegs, 180 jpegs

- Canon EOS R6 + macro lens 50mm f/1.8 (lantern fly) -> 464 jpegs, 312 jpegs

- Canon EOS R6 + macro lens 50mm f/1.8 (snake) -> 588 jpegs, 382 jpegs

- Canon EOS R6 + macro lens 50mm f/1.8 (axolotl) -> 244 jpegs

- Canon EOS R6 (snake) -> 1 mp4 7.56GB, 2 mp4 6.38GB

- Canon EOS R6 (rice) -> 1 mp4 4.26GB, 3 mp4 9.05GB

- Canon EOS R6 (rice) -> 589 jpegs

- Canon EOS R6 (axolotl) -> 3 mp4 5.94GB

- Canon EOS R6 (honey nuts) -> 2 mp4 3.98GB

I will admit that I didn’t have any research question. I only wanted to play around with some cool tools. I wanted to use the robot and take pictures with it. I quickly simplified this idea, due to time constraints and temporarily ‘missing’ parts, and took up Golan’s recommendation to work with the 3D Gaussian Splatting program Leo set up on the Studio’s computer in combo with the robot arm.

This solves the “how” part of my nonexistent research question. Now, all I needed was a “what”. Perhaps it was just due to the sheer amount of them (and how they managed to invade the sanctuary called my humble abode), but I had/have a small fixation on lantern flies. Thus, the invasive lantern fly became subject 00.

So I tested 3DGS out on the tiny little invader (a perfectly intact corpse found just outside the Studio’s door), using very simple capture methods aka my phone. I took about 30-50 images of the bug and then threw it into the EasyGaussian Python script.

Hmmm. Results are… questionable.

Same for this one here…

This warranted for some changes in capturing technique. First, do research on how others are capturing images for 3DGS. See this website and this Discord post, see that they’re both using turntables and immediately think that you need to make a turntable. Ask Harrison if the Studio has a Lazy Susan/turntable, realize that we can’t find it, and let Harrison make a Lazy Susan out of a piece of wood and cardboard (thank you Harrison!). Tape a page of calibration targets onto said Lazy Susan, stab the lantern fly corpse, and start taking photos.

Still not great. Realize that your phone and the macro lens you borrowed isn’t cutting it anymore, borrow a Canon EOS R6 from Ideate and take (bad) photos with low apertures and high ISO. Do not realize that these settings aren’t ideal and proceed to take many photos of your lantern fly corpse.

Doom scroll on IG and find someone doing Gaussian splats. Feel the inclination to try out what they’re doing and use PostShot to train a 3DGS.

Compare the difference between PostShot and the original program. These renderings felt like the limits of what 3DGS could do with simple lantern flys. Therefore, we change the subject to something of greater difficulty: reflective things.

Ask to borrow dead things in jars from Professor Rich Pell, run to the Center for PostNatural History in the middle of a school day, run back to school, and start taking photos of said dead things in jars. Marvel at the dead thing in the jar.

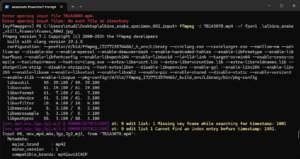

Figure out that taking hundreds of photos takes too long, and start taking videos because photos take too long to take. Take the videos, run it through ffmpeg, splice out the frames and run the 3DGS on those frames.

I think the above three videos are the most successful examples of 3DGS in this project. They achieve the clarity I was hoping for and the allow you to view the object from multiple different angles.

The following videos are some recordings of interesting results and process.

Reflection:

I think this method of capturing objects only really yields itself to be presented in real time via a virtual reality/experience or video run-throughs of looking at the object in virtual 3D space. I will say that this gives the affordance of allowing you to look at the reflective object in multiple different POVs. I think during this process of capturing, I really enjoyed being able to view the snake and axolotl in different perspectives. In the museum setting, you’re only really able to view it from a couple perspectives, especially since these specimens are behind a glass door (due to their fragility). It would be kinda cool to be able to see various specimens up close and from various angles.

I think I had a couple of learning curves, with the camera, software, and preprocessing of input data. I made some mistakes with the aperture and ISO settings, leading to noisy data. Also could’ve speed up my workflow by going the video-to-frames route sooner.

I would like to pursue my initial ambitions of using the robot arm. First, it would probably regularize the frames in the video. Second, I’m guessing that the steadiness of preprogramed motion will help decrease motion blur, something I ended up capturing but was too lazy to get rid of in my input set. Third, lighting is still a bit of a challenge. I think 3DGS requires that the lighting relative to the object must stay constant the entire time. Overall, I think this workflow needs to be used on a large dataset and to create some sort of VR museum of dead things in jars.

To Do List:

- Get better recordings/documentation of the splats -> I need to learn how to use the PostShot software for fly throughs.

- House these splats in some VR for people to look at from multiple angles at your own leisure. And clean them up before uploading them to a website. Goal is to make a museum/library of dead things (in jars) -> see this.

- Revisit failure case: I think it would be cool to paint these splats onto a canvas, sort of using the splat as a painting reference.

- Automate some of the workflow further: parse through frames from video and remove unclear images, work with robot,

- More dead things in jars! Pickles! Mice! More Snakes!