Updates for final exhibition:

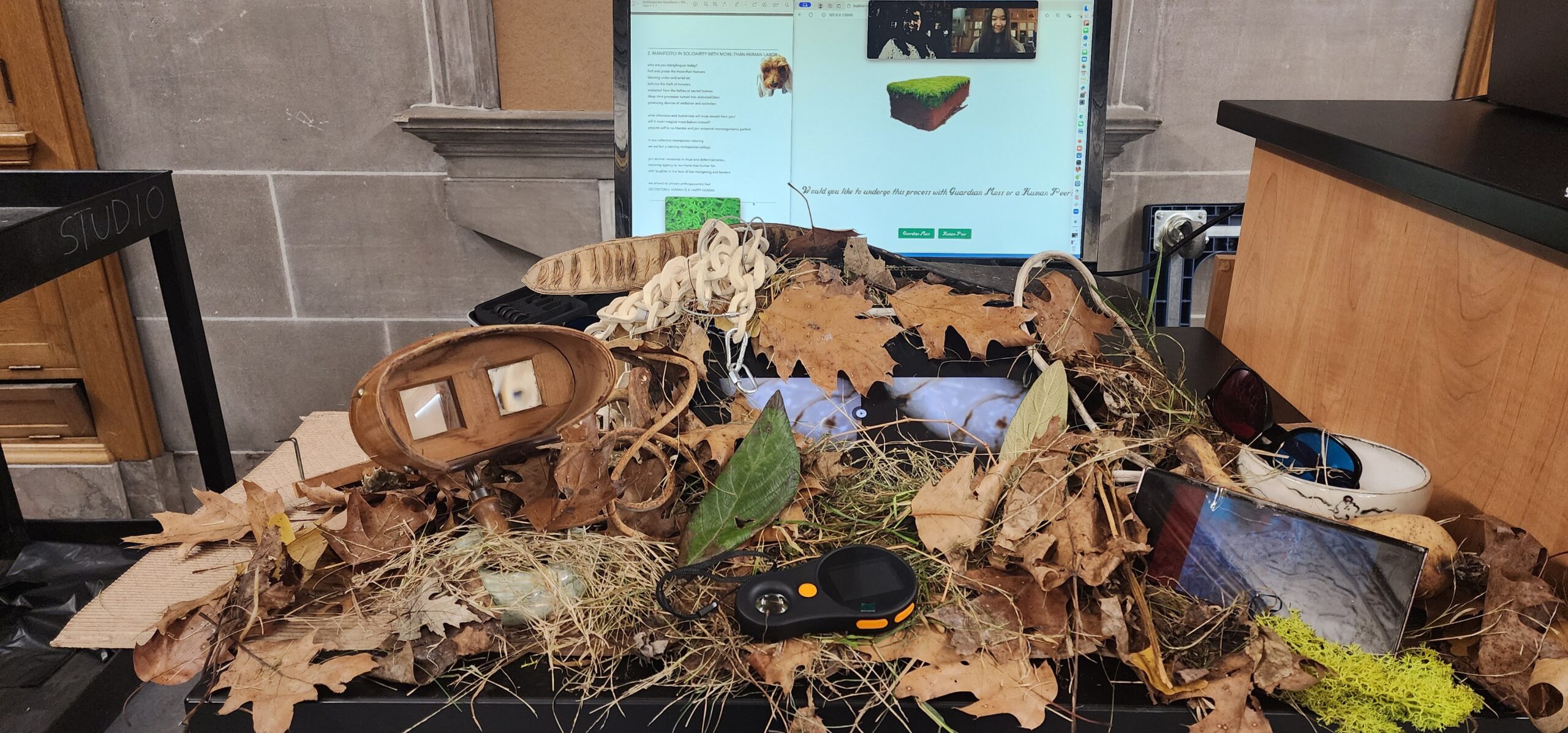

For the final exhibition, I was mainly thinking about how to bring everything into an interactive experience for the exhibition visitors. My exhibition station had a few components:

Large monitor: shows a variety of media to set the tone–a manifesto of ideas driving the project, an animation/introduction to the project, and a visual of pixels being remapped from one person to another.

Center screen: videos/audios of non-human kin at magnified scales

Left screen: stereo pairs of focus-stacked moss; the screen was shown with a stereo viewer to facilitate the 3D visual effect.

Right screen: red-blue anaglyphs of focus-stacked moss; red-blue glasses were provided nearby so visitors could enjoy the effect

Handheld microscope: provided so visitors can see even more of the installation at scales they wouldn’t otherwise experience.

The screens were surrounded by grass and leaves from my yard (I tried to chose something that would create minimal disruption to the natural surroundings) and some dried plants a friend gifted me in the past. This was done so the media on the screens seemed to merge into the grass/leaves. As visitors leaned in to engage with the material (e.g. the stereo viewer), they would also find themselves getting closer to and smelling the grassy/vegetal scents.

I used my phone as one of the screens, so I both forgot to take an image earlier on during the exhibition (when everything was assembled more nicely) and wasn’t able to take a photo of the full setup, but here’s an image of the overall experience.

Project summary from before:

On the last episode of alice’s ExCap projects… I was playing around with stereo images while reflecting on the history of photography, the spectacle, stereoscopy and voyeurism, and “invisible” non-human labor and non-human physical/temporal scales and worlds. I was getting lost in ideas, so for this current iteration, I wanted to just focus on building the stereo macro capture pipeline I’ve been envisioning earlier (initially because I wanted to explore ways to bring us closer to non-human worlds* and then think about ways to subvert the way that we are gazing and capturing/extracting with our eyes… but I need more time to think about how to actually get that concept across :’)).

*e.g. these stereo videos made at a recent residency I was at (these are “fake stereo”) really spurred the exploration into stereo

Anyway… so in short, my goal for this draft was to achieve a setup for 360, stereoscopic, focus-stacked macro images using my test object, moss. At this point, I may have lost track a bit of the exact reasons for “why,” but I’ve been thinking so much about the ideas in previous projects that I wanted to just see if I can do this tech setup I’ve been thinking about for once and see where it takes me/what ideas it generates… At the very least, now I know I more or less have this tool at my disposal. I do have ideas about turning these images into collage landscapes (e.g. “trees” made of moss, “soil” made of skin) accompanied by soundscapes, playing around with glitches in focus-stacking, and “drawing” through Helicon’s focus stacking algorithm visualization (highlighting the hidden labor of algorithms in a way)… but anyway… here’s documentation of a working-ish pipeline for now.

Feedback request:

I would love to hear any thoughts on what images/what aspects of images in this set appeal to you!

STEP 1: Macro focus-stacking

Panning through focal lengths via pro video mode in Samsung Galaxy S22 Ultra default camera app using macro lens attachment

Focus stacked via HeliconFocus

Actually, I love seeing Helicon’s visualizations:

And when things “glitch” a little:

I took freehand photos of my dog’s eye at different focal lengths (despite being a very active dog, she mainly only moved her eyebrow and pupil here).

2. Stereo macro focus-stacked

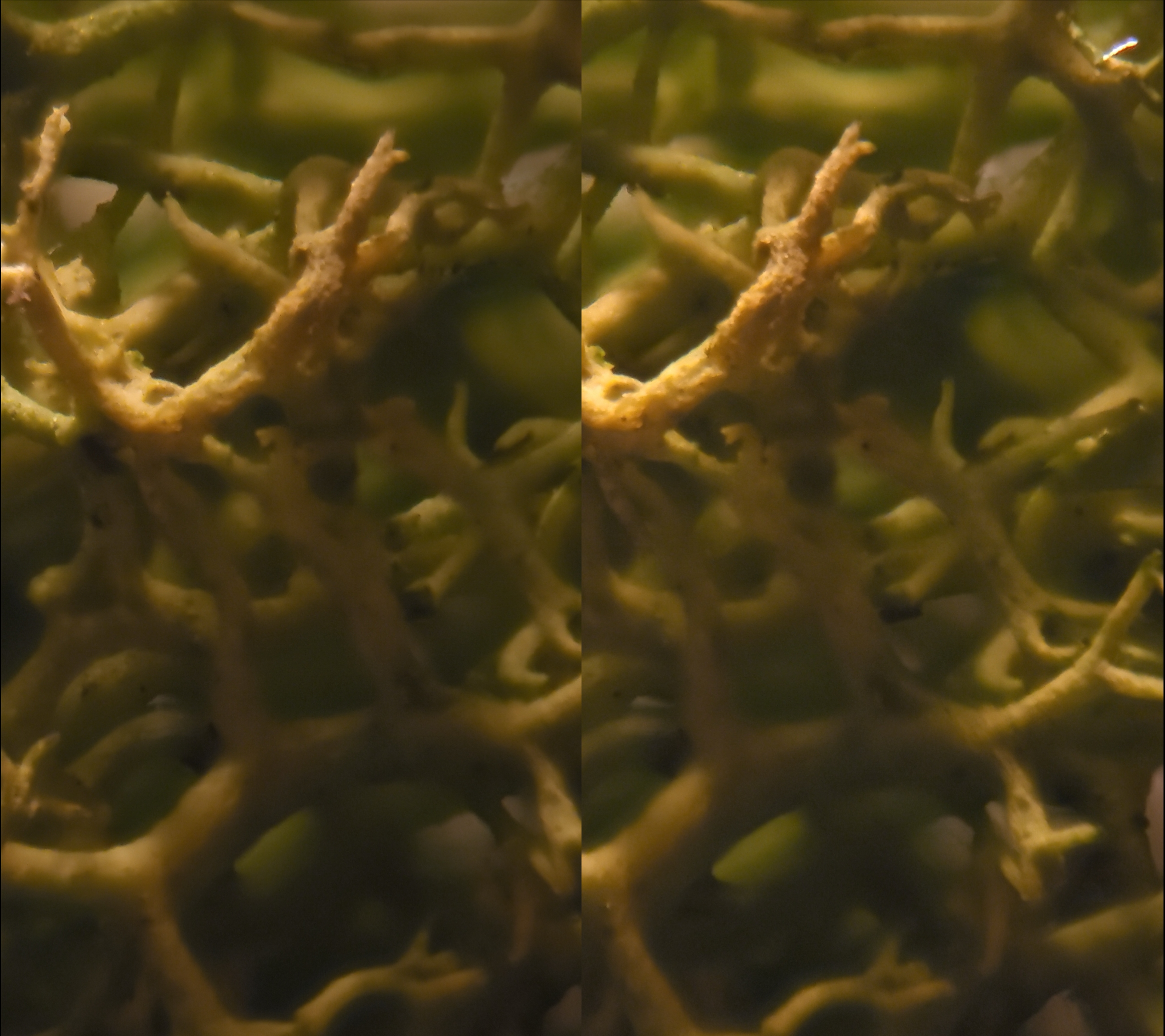

Stereoscopic pair of focus-stacked images. I recommend viewing this by crossing your eyes. Change the zoom level so the images are smaller if you’re having trouble.

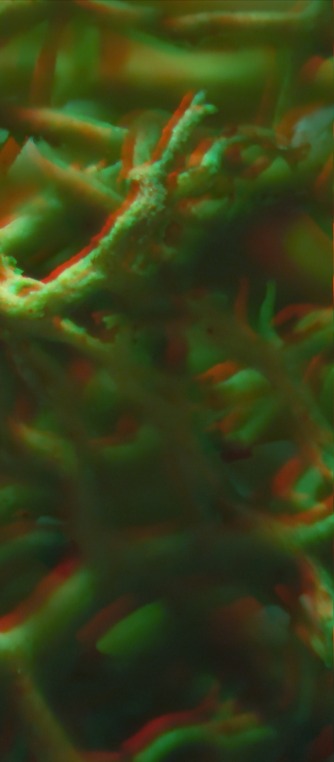

Red-Cyan Anaglyph of the focus-stacked image. This should be viewed via red-blue glasses.

3. 360 Stereo, macro, focus-stacked

I would say this is an example of focus stacking working really well, because I positioned the object/camera relative to each other in a way that allowed me to capture useful information throughout the entire span of focal lengths allowed on my phone. This is more difficult when capturing 360 from a fixed viewpoint.

Setup:

- Take stereo pairs of videos panning through different focal lengths, generating the stereo pair by scooting the camera left/right on the rail

- Rotate turntable holding object and repeat. To reduce vibrations from manipulating the camera app on my phone, I controlled my phone via Vysor on my laptop.

- Convert videos (74 total, 37 pairs) into folders of images (HeliconFocus cannot batch process videos)

- Batch process focus stacking in HeliconFocus

- Take all “focused” images and programmatically arrange into left/right focused stereo pairs

- Likewise, programmatically or manually arrange into left/right focused anaglyphs

Overall, given that the cameral rail isn’t essential (mostly just used it to help with stereo pairs; the light isn’t really necessarily either given the right time of day), and functional phone macro lenses are fairly cheap, this was a pretty low-cost setup. I also want to eventually develop a more portable setup (which is why I wanted to work with my phone) to avoid having to extract things from nature. However, I might need to eventually transition away from a phone in order to capture a simultaneous stereo pair at macro scales (lenses need to be closer together than phones allow).

The problem of simultaneous stereo capture also remains.

Focus-stacked stereo pairs stitched together. I recommend viewing this by crossing your eyes.

Focus-stacked red-blue anaglyphs stitched together. This needs to be viewed via the red-blue glasses.

The Next Steps:

I’m still interested in my original ideas around highlighting invisible non-human labor, so I’ll think about the possibilities of intersecting that with the work here. I think I’ll try to package this/add some conceptual layers on top of what’s here in order to create an approximately (hopefully?) 2-minute or so interactive experience for exhibition attendees.

Some sample explorations of close-up body capture

) when I drive and I wanted to preserve something of that encountering and the sense of mourning that drove this project through a manual/personal approach to sifting through the data. Further, when I was collecting data with the intention of sifting through algorithmically for roadkill, I found myself anxious to find roadkill–which completely started distorted how I wanted to view this project.

) when I drive and I wanted to preserve something of that encountering and the sense of mourning that drove this project through a manual/personal approach to sifting through the data. Further, when I was collecting data with the intention of sifting through algorithmically for roadkill, I found myself anxious to find roadkill–which completely started distorted how I wanted to view this project.