For my final I decided to build upon my typology from the beginning of the semester. If you’re curious, here’s the link to my original project.

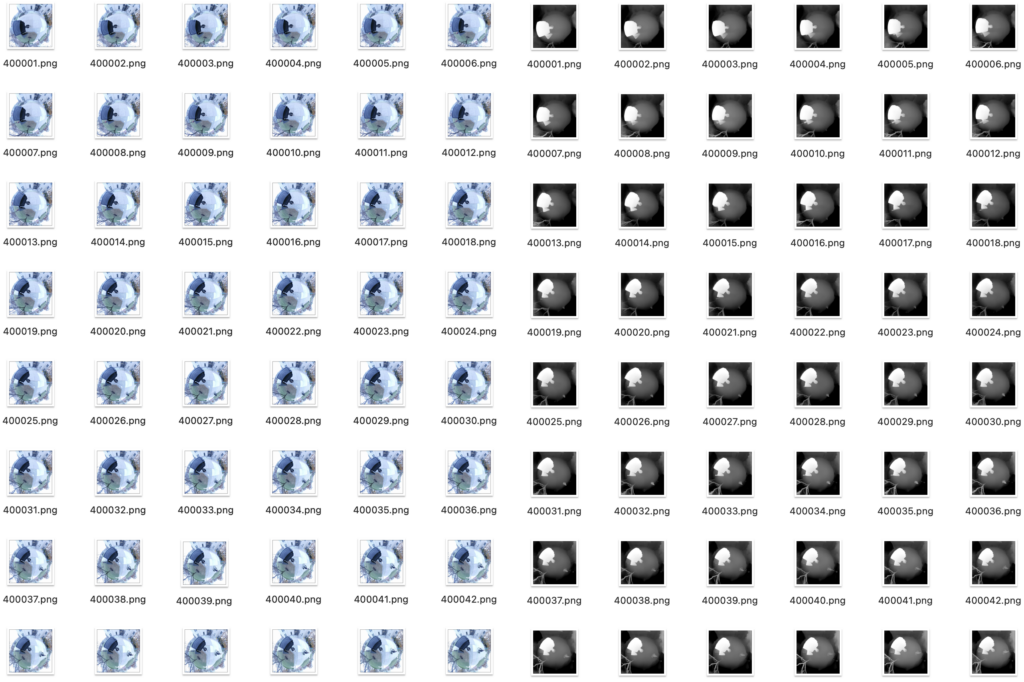

In short, I captured the undersides of Pittsburgh’s worst bridges through photogrammetry. Here’s a few of the results.

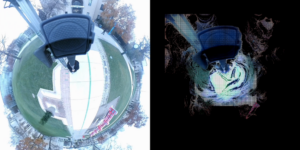

For the final project, I wanted to revise and refine the presentation of my scans and also expand the collection with more bridges. Unfortunately, I spent too much time worrying about taking new captures/better captures that I didn’t focus as much as I should have on the final output, which I wanted to ideally be either augmented reality experience, or a 3d walkthrough in Unity. That being said, I do have a very rudimentary (emphasis on rudimentary) draft of the augmented reality experience.

https://www.youtube.com/watch?v=FGTCfdp8x4Q

(Youtube is insisting that this video be uploaded as a short so it won’t embed properly)

As I work towards next Friday, there’s a few things I’d like to still implement. For starters, I want to add some sort of text that pops up on each bridge that has some key facts about what you’re looking at. I also currently don’t have an easy “delete” button to remove a bridge in the UI, but I haven’t figured out how to do that yet. Lower on the priority list would be to get a few more bridge captures, but that’s less important than the app itself at this point. Finally, I cannot figure out why all the bridges are somewhat floating off the ground, so if anyone has any recommendations I’d love to hear them.

I’m also curious for feedback if this is the most interesting way to present the bridges. I really like how weird it is to see an entire bridge just like, floating in your world where the bridge doesn’t belong, but I’m open to trying something else if there’s a better way to do it. The other option I’m tossing around would be to try some sort of first person walkthrough in unity instead of augmented reality.

I just downloaded Unity on Monday and I think I’ve put in close to 20 hours trying to get this to work over 2.5 days… But after restarting 17 times I think I’ve started to get the hang of it. This is totally out of my scope of knowledge, so what would have been a fairly simple task became one of the most frustrating experiences of my semester. So further help with Unity would be very much appreciated, if anyone has the time!! If I see one more “build failed” error message, I might just throw my computer into the Monongahela. Either way, I’m proud of myself that I have a semi functioning app at all, because that’s not something I ever thought I’d be able to do.

Thanks for listening! Happy end of the semester!!!!