When Ginger came to teach us spectroscopy, and we were asked to collect samples outside to look at under a microscope, I unexpectedly discovered a microscopic bug when looking at a leaf from outside (I could’t see it with my plain, human eyes). This made me curious about what else I was missing. Then, for my first project, I spent so much time in Schenley Park (I was trying to exhaust it- à la Perec). In how many ways could I get to know Schenley Park? What was interesting in it?

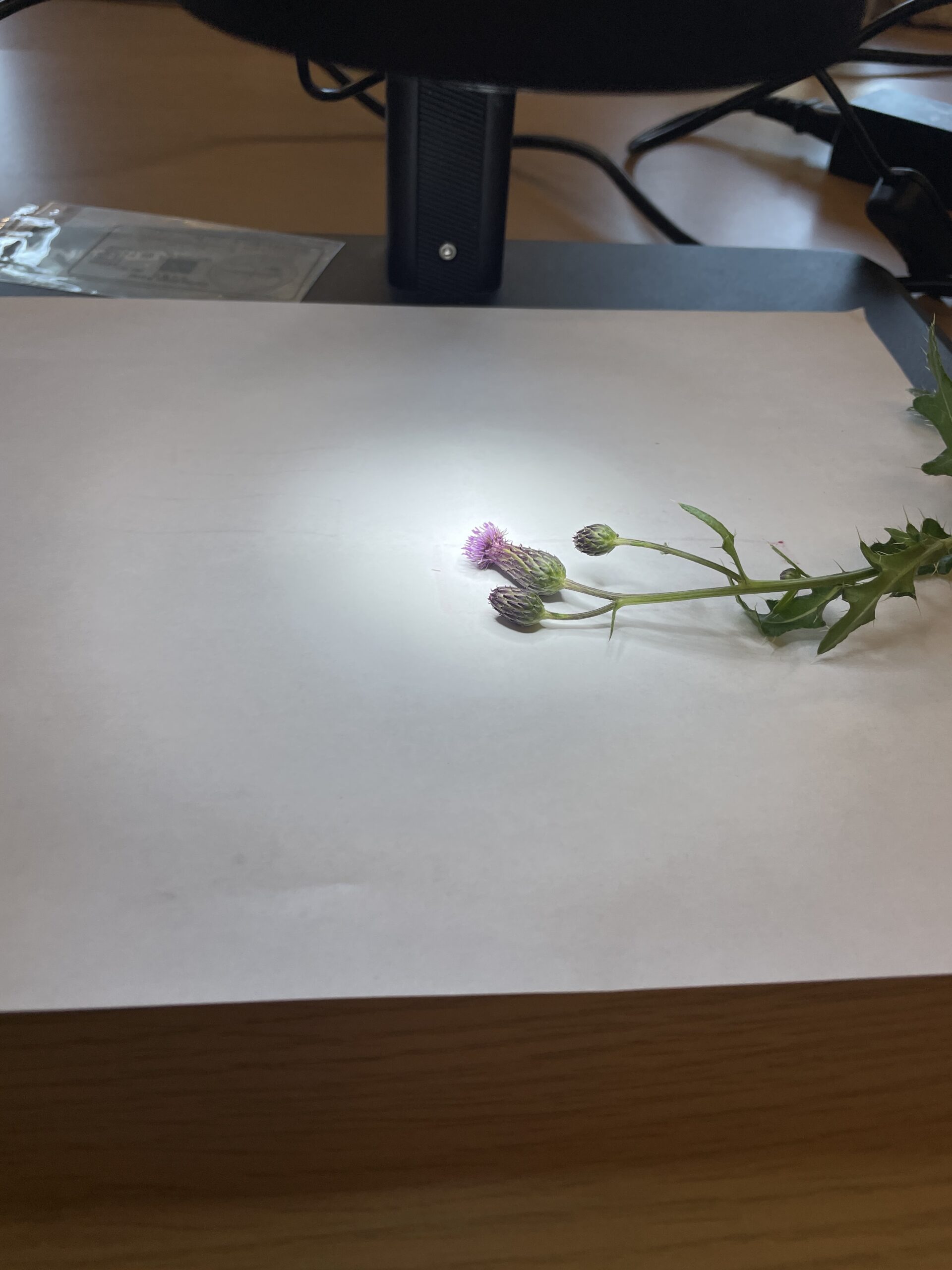

In October, I collected a bunch of samples (algae from Panther Hollow lake, flowers, twigs, rocks, leaves, etc.) and brought them to the studio to see what I could find in a deeper look. Below is a short video highlighting only a small portion of my micro explorations. A lot of oohs, ahh, and silliness.

Last week, Richard Pell told us about focus stacking! I wanted to try using this technique to make higher resolution images from some of my videos. While the images are not super high resolution because they are made from extracted video stills, you are still able to see more detail in one image with the stacking. I ran a bunch of my captures through heliconFocus to achieve this. Below are my focus stack experiments along with GIFs of the original footage to show the focus depth changing.

Focus Stacking Captures:

- Pollinated Flower Petals.

Focus Stacking – Dry Moss

Focus Stacking – Bugs on Flowers

You can see the movement of the bug over time like this!!

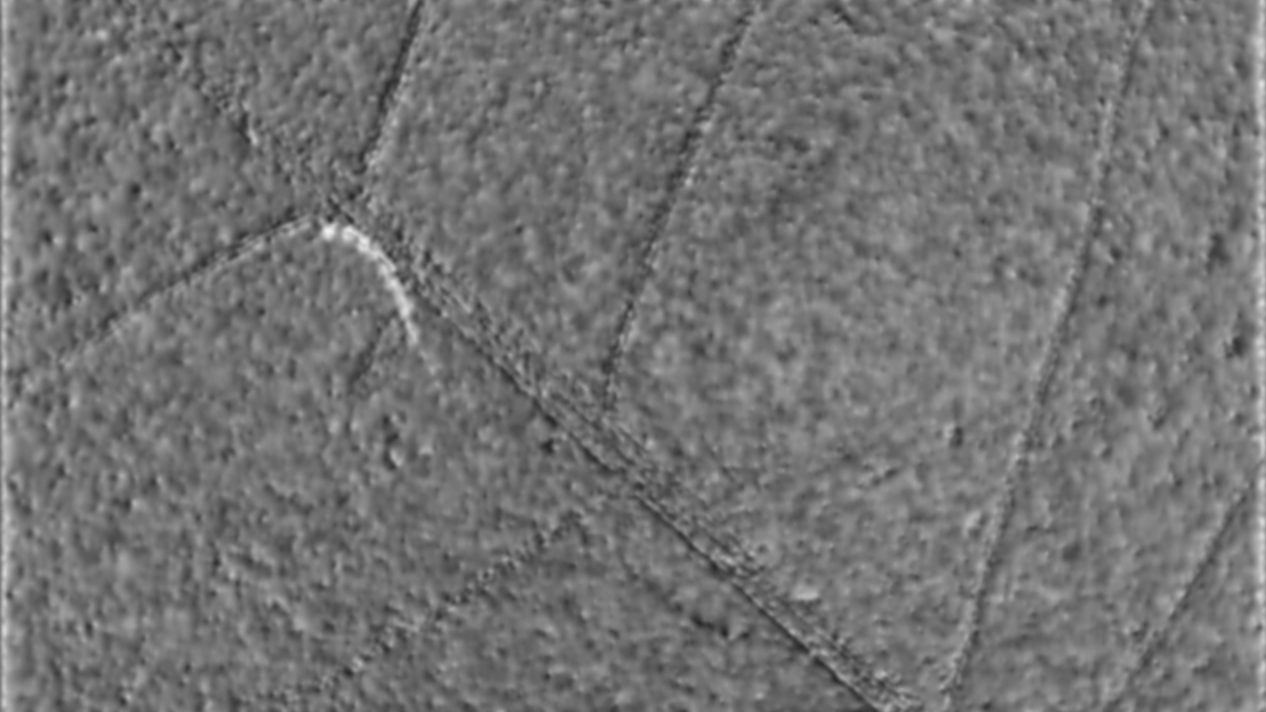

I also did this with a tiny bug I saw on this leaf, you can see its path.

Next, you can see some bugs moving around on this flower

Now Mold!

A single strand of moss

A flower bud

Having done these microscopic captures for fun in October and really enjoying this close-up look at things, and being so mesmerized by the bugs lives, I wanted to spend an extraordinary amount of time just looking at the same types of bugs found on Schenley organic matter for my final project. On November 25th, I set out again to collect a plethora of objects from Schenley Park- including flowers, strands of grass, twigs, acorns, stones, algae+water from the pond, charred wood and more… I eagerly brought them to studio… wondering what bugs I’d see. But of course, it had gotten cold. After an hours-long search under the microscope, I did find one bug. But by the time I found the bug, I was exhausted (and interested in other things).

In an Attempt at Exhausting A Park In Pittsburgh. (Schenley Park), I ended up exhausting myself.