Hello darlings. My final project is essentially a revised and fixed version of my previous project –smalling.

To recap, particularly for any new guests, I’m interested in the form of being small, the action of having to contort oneself, and how that works when it has to happen consciously, with no immediate threat or reward. This idea came out of considering more formal elements of small bodies along with “smallness” as a symbolic item (as it’s used in movies and other media,) and smallness as a relatable concept. Some of the images I was looking at were these:

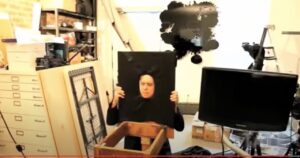

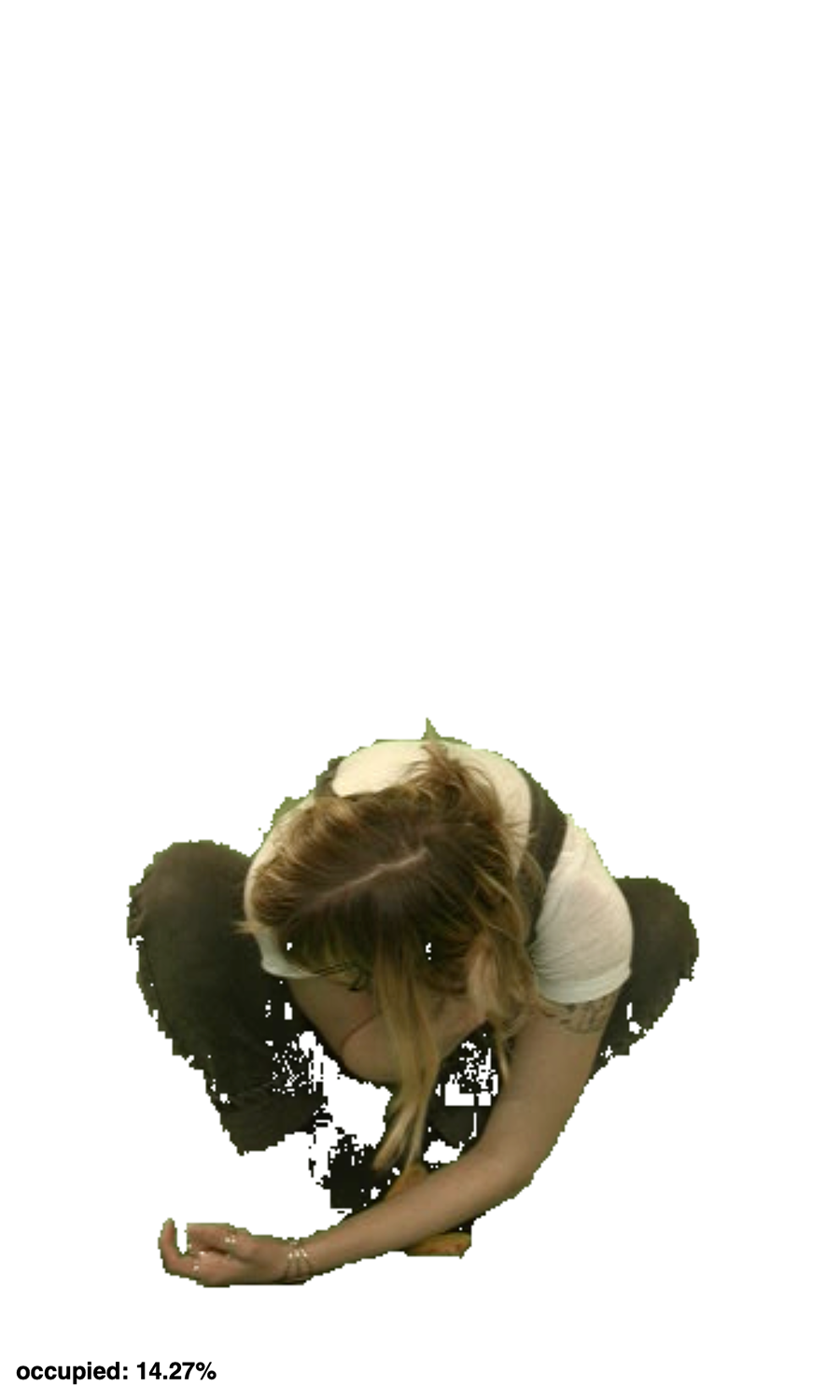

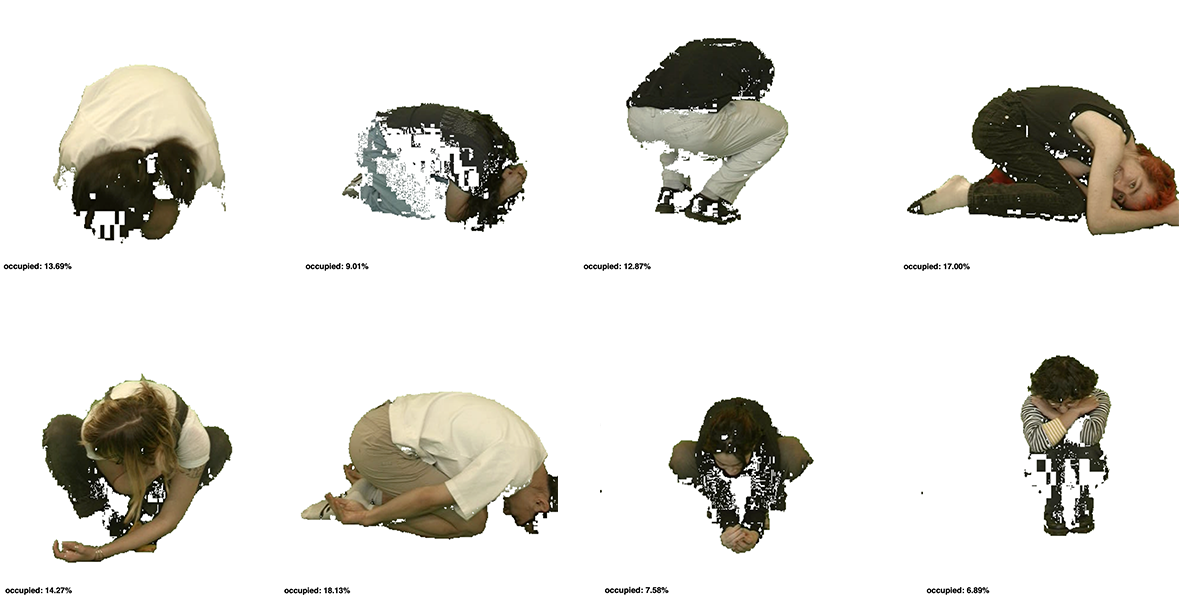

I had ChatGPT write most of my code, and then Golan, Leo, Benny, and Yon helped fix anything that I couldn’t figure out past that, so I can’t explain most of the technical details here. What I can say is that it’s a pretty basic green screen setup, the program turns anything green to white judging on hue values, functions for ten-thirty seconds (this number has changed over the course of the project,) and retroactively takes a picture of the participants at their smallest size during that time. The tests from the previous version looked like this:

The problem with this, which I didn’t address in the last critique, was that it measured the size of the person compared to the canvas, not as compared to their previous size. This privileged people with smaller frames and was never my intention, it was a genuine forgotten detail over the course of the mess of me ChatGPT-ing my code. This is fixed now (wow!)

Here’s a mini reel because WordPress doesn’t like anything that isn’t a teeny tiny gif compressed to the size of a molecule ♥️ sorry

This is essentially where it’s at right now. I’m mostly looking for feedback on potential output and duration of the piece. As far as duration, I noticed that even with more time, most people tended to immediately get as small as possible and then were left to backtrack or just sit there. On the other end, there were a few participants that were kind of shocked by the short amount of time, even when they knew what it was before starting.

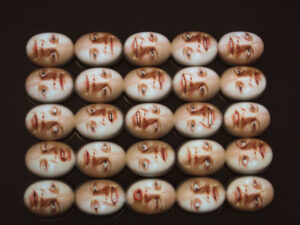

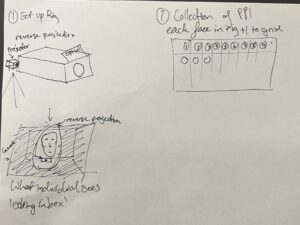

As for output, I had somewhat decided on a grid of photos of people at their smallest that’s automatically added to as more people participate, but I started feeling not so good about that. It would look something like this: (and be displayed on a second monitor next to the interactive piece)

Some of the other ideas mentioned in my proposal include:

- physical output (golan mentioned receipt printer, but open to other ideas here) example here: https://www.flickr.com/photos/thart2009/53094225345

- receipts could exist as labeling system or just an image

- scoreboard on a separate monitor

- website with compiled images of people at their smallest

- email sent to participants of their photo(s)

- value to descriptor system

- some other form of data visualization or image reproducing (???)

I’m not married to any of these. If any ideas are had please let me know. I just don’t want anything to become too murky because it’s being dragged down by a massive unrelated piece of physical/digital output/data visualization/whatever. There’s already the element of interaction that makes this project inherently game-like, but I’m trying to keep it from becoming too gimmicky.