The PSiFI

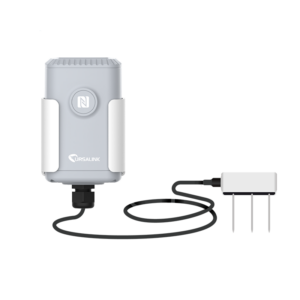

“Personalized Skin-Integrated Facial Interface” (PSiFI) is an advanced human emotion recognition system using a flexible wearable mask device designed to “recognize and translate human emotions” in real time (Nature Communications).

The PSiFI utilizes strategically placed multimodal triboelectric sensors (TES) to gather data by detecting changes in movement, facial strain, and vibration on the human body: speech, facial expression, gesture, and various physiological signals (temperature, electrodermal activity).

The device incorporates a circuit for wireless data transfer to a machine learning Convolutional Neural Network (CNN) algorithm that classifies facial expressions and speech patterns. The more the classifier trains, the more accurate the analysis of emotion (Nature Communications).

Image: PSiFI CREDIT: Nature Communications

Inspiration/Research

I’ve conducted research where we have used various devices to collect physiological data, and the sensors are ridiculously bulky and temperamental. The ability to capture a variety of human emotions using a combination of machine learning, sensors, and computational power within one device is incredible!

In my art practice, I often explore ways to evoke emotional reactions from the audience. I could imagine creating installations where participants encounter thought-provoking or uncomfortable situations while wearing a motion-sensor mask that analyzes and tracks their every movement and physiological response. This would not only reveal the external, visible reactions to the artwork but also provide insight into more internal, unseen responses.

Critique

The researchers’ decided to test the PSiFI in a VR environment allowed for an extremely controlled research environment. By employing a VR-based data concierge that adjusted content-music or a movie trailer-based on user reactions, demonstrated the system’s ability to accurately keep up with the individual’s emotions.

Links

https://thedebrief.org/groundbreaking-wearable-technology-can-accurately-detect-and-decipher-human-emotions-in-real-time/

https://www.nature.com/articles/s41467-023-44673-2#citeas