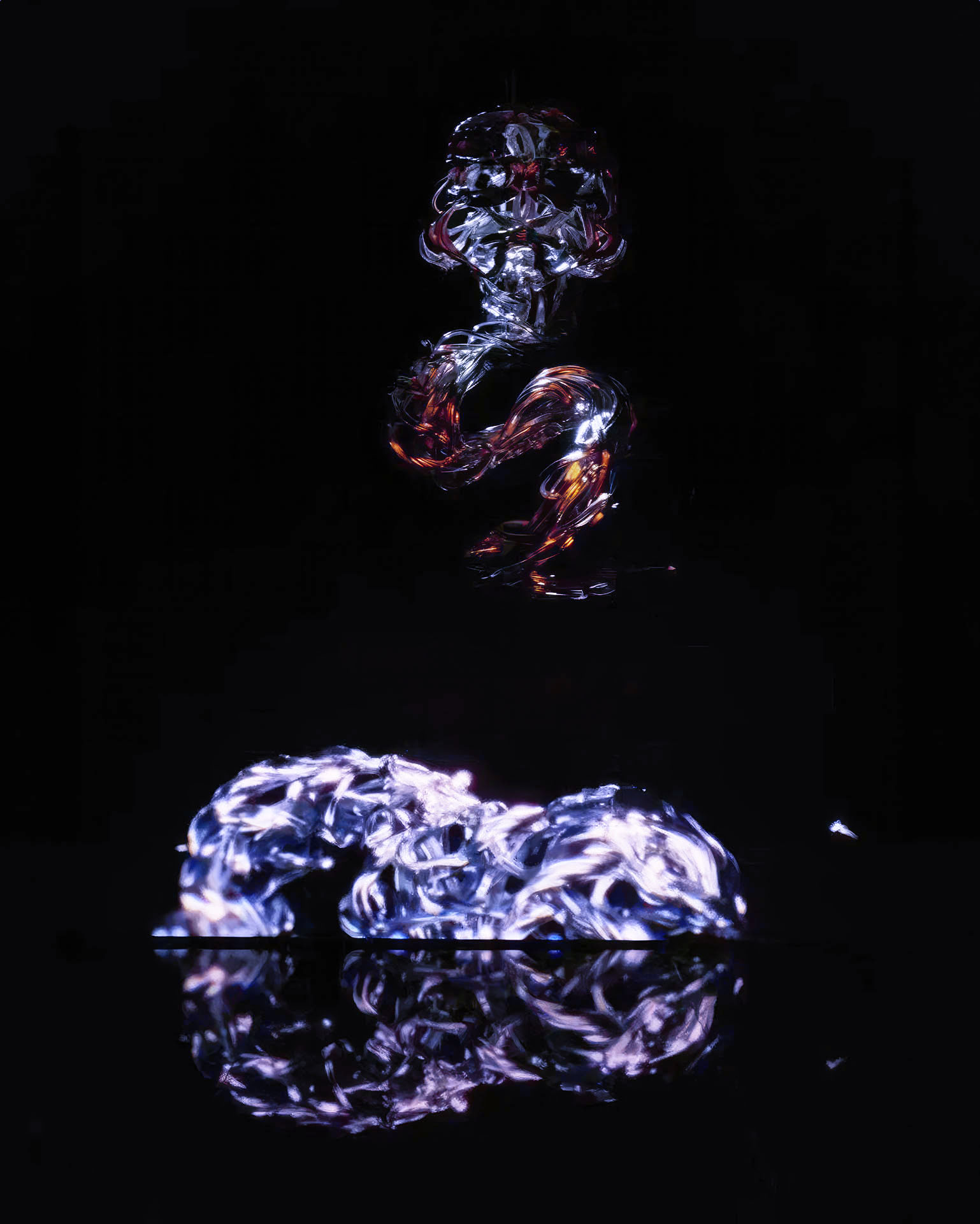

Captured by Hanna Haaslahti

Haaslahti’s immersive digital art installation thrusts viewers into a dual role of spectator and actor, assigning them a “new identity” within a virtual community. Incorporating viewers through face capture technology and crowd simulation, the community explores the theme of “Bully, Target, or Bystander” simulating the viewer’s experience of human crowd behavior where unpredictable moods fester (Diversion Cinema).

CREDIT: Diversion Cinema

CREDIT: Diversion Cinema

By guiding the viewer through uncomfortable and traumatic scenarios of human crowd behavior, the installation probes a severe reaction and extreme reflection regarding our environment and the individuals who surround us. Viewers witness “themselves” within these simulated scenarios, evoking feelings of guilt, horror, or a desire to escape. Seeing oneself in such a vulnerable position – regardless of the role assigned – forces individuals to confront uncomfortable, somewhat unavoidable situations.

Critique

While I love the concept, the current use of colored-bodied individuals somewhat detracts from the reality of the message, reminding viewers that the installation is not entirely real despite seeing their own face. Employing a more visually realistic background or environment, rather than a white backdrop, would further emphasize the art’s message and increase its emotional resonance.

Chain of Influences

Haaslahti’s central tool is “computer vision and interactive storytelling,” primarily “interested in how machines shape social relations” (Haaslahti). When observing her past artwork, Haaslati’s perspecitve is clear since her focus is influenced by:

-

- Computer vision techniques replicating Big Brother-the feeling of being constantly being watched

- Visual Perception, real time mapping

- Participatory simulations through viewers as actors using hyper-realistic capturing and 3D modeling techniques

- Social implications on human relationships

Haaslahti has not listed sources or a biography.

Links

https://www.diversioncinema.com/post/captured-the-installation-by-hanna-haaslahti-enters-diversion-cinema-s-line-up

https://www.diversioncinema.com/captured

https://www.hannahaaslahti.net/about/