LoRaWAN Environmental Sensors: Capturing the Pulse of the Environment

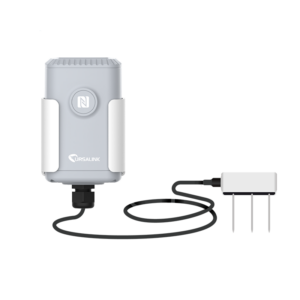

One intriguing capture device that has caught my attention is the LoRaWAN Environmental Sensor. These sensors are capable of monitoring various environmental parameters such as air quality, temperature, and humidity across large areas. The idea of using real-time data to create art is what truly inspires me about these devices.

LoRa environmental sensors refer to environmental sensors equipped with LoRa nodes, such as temperature sensors, humidity sensors, air pressure, indoor air quality sensors, etc. However, unlike traditional environmental sensors, LoRa environmental sensors send the collected data to LoRa gateways through the LoraWan protocol, and then finally transmit them to the server.Since LoRa environmental sensors use LoRa technology to send data, this also determines that its networking is simpler than traditional sensors.

If I had access to LoRaWAN sensors, I would use them to develop a data-driven art installation visualizing the fluctuating environmental feature in urban spaces and transforming unconscious data into dynamic art forms. The installation would be a living reflection of the community we lived in, encouraging viewers to consider their connection to the urban. I think the project could be made even more compelling by incorporating interactive elements where viewers could contribute data or see the impact of their immediate actions in real-time. This would add a layer of personal responsibility and immediacy to the environmental message. This concept is inspired by earlier data-driven projects like Sarah Cameron Sunde’s The Climate Ribbon, which also uses environmental data to create responsive art.

https://www.niubol.com/Product-knowledge/LoRa-environmental-sensors.html