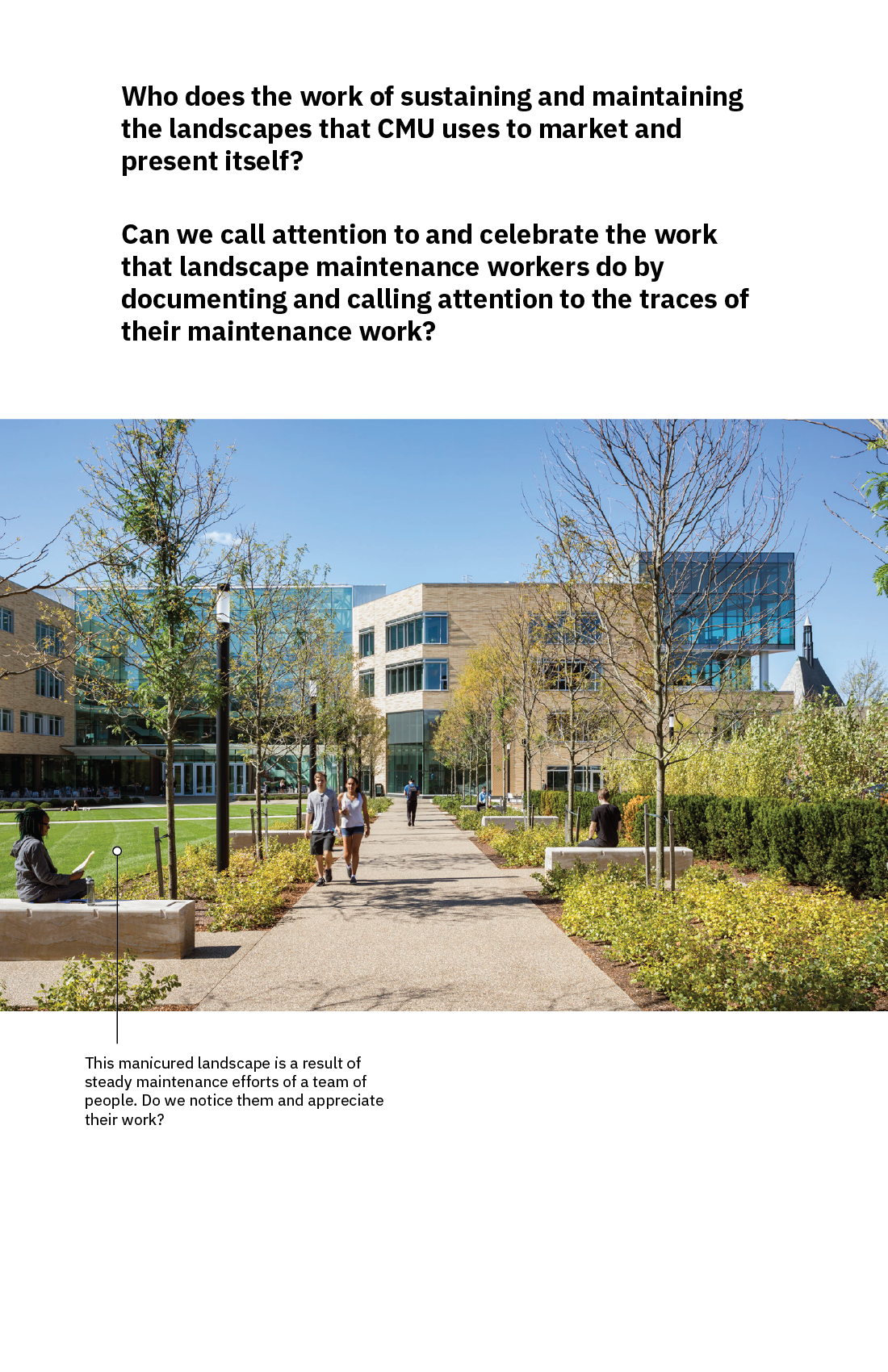

Take my heartrate data live from a fitbit. Find some things in my house that tick. Have the house tick in sync with my heartbeat.

Expanding:

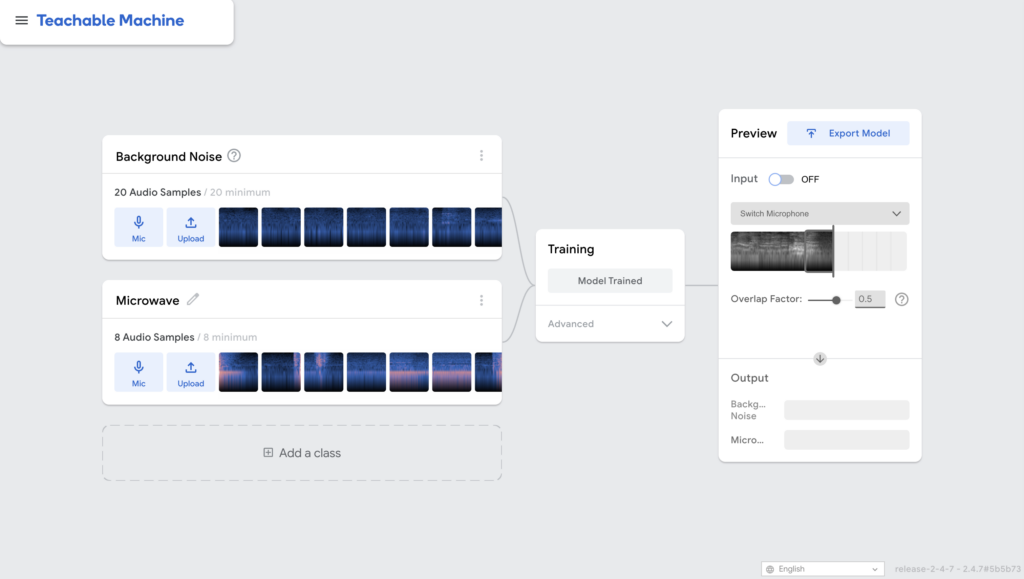

I already have a fitbit, I am already in progress getting the API to work. Pretty sure I can get data at the rate I’d want. Can also give up use other heartrate monitor arduino whatever.

Heartrate can also be breathing.

“Tick” means like: clock hand, beep, water drip, quick power on/off.

I will have to experiment to find out what I can make tick. I am allowed to be selective about what household appliances/subjects are incorporated. The end result does not have to be “my house, 100% accurate to how it is everyday”, it can be staged.

The end result of this is an experience for me to be in. That will probably manifest into a video documentation for class. I imagine I would be in the ticking space, because I imagine I would respond to hearing my own heartrate. An alt (not for class) version would be an actual of an installation of a room ticking, and that runs constantly, and I’m not there.

Original idea involved sleep/REM date, and dreamwalking.

Alt: Getting a UV light & Sunbleaching my shadow into a piece of paper for like a hundred something hours. Just I think it’d be fun to make that much time translate into something really stupidly subtle.

Alt alt: Grid of office cubicles. In each cubicle is one chair, a controller to pan/zoom/tilt a ceiling-mounted camera, and a monitor displaying that camera’s feed. IDK office-core panopticon.