For this assignment, I’d like to create a website selling some kind of stereoscopic footage or other “micro-media” I’ve captured about micro worlds/worlds happening at timescales other than ours–with a bit of a twist.

When users buy, they are taken through several steps that highlight non-human (including what we consider living and non-living under non-animist frameworks) and human labor processes. As a simple example:

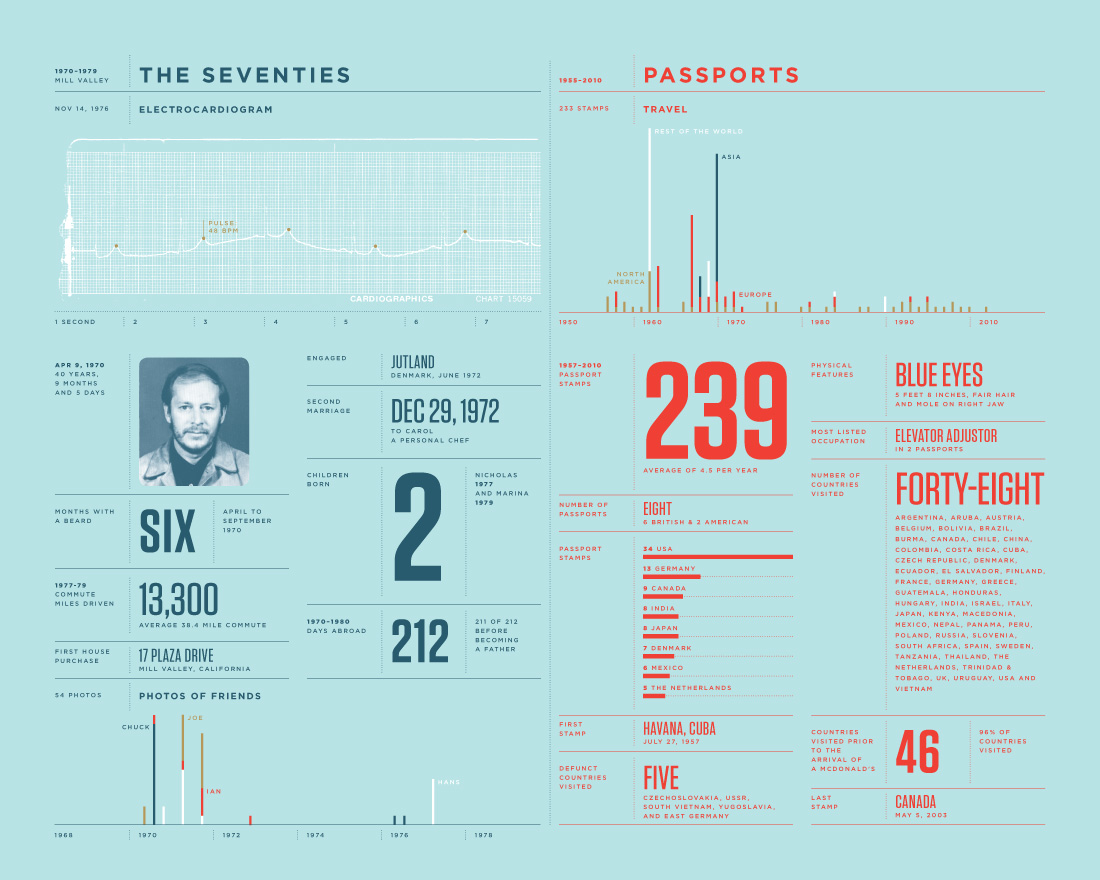

This is a clip of a video of a small worm wriggling in some kind of soil substrate. The final price might include

1 min x $20/60 min worm acting fee = $0.33 (fee is arbitrary at the moment, but I will justify it somehow for the completed project, though there are obvious complications/tensions around the determination of this “price”)

1 min x $20/60 min soil modeling fee = $0.33

5 min x $20/60 min computer runtime fee = $1.65

5 min x $20/60 min artist labor fee

…

Etc

The calculation of costs will help bring attention to aspects of the capture process that people might not normally think about (e.g. are we mindlessly stepping into another’s world?), but I think care needs to be taken with the tone taken.

Since some of the “participants” can’t be “paid” directly, we mention to buyers that this portion of the cost will be allocated to e.g. some organization that does work related to the area. For instance, the worm/soil might link to a conservation organization in the area. The computer runtime step might link to an organization that relates to the kinds of materials extracted to make computers and the human laborers involved in that process (e.g. Anatomy of an AI). There will also be information about the different paid participants (e.g. information about the ecosystem/life in the ecosystem the video was filmed in, something of an artist bio for myself in relation to the artist labor).

I will aim for final prices that make sense for actual purchase–as a way of potentially actually raising money for these organizations. If the final totaled labor costs result in something too high, I will probably provide a coupon/sale.

To avoid spamming these organizations with small payments, the payments will be allocated into a fund that gets donated periodically.

Besides the nature of the footage/materials sold, a large part of this project would be thinking/researching about how I actually derive some of these prices, which organizations to donate to, and the different types of labor that have gone into the production of the media I’m offering for sale.

Background from “existing work” and design inspirations:

Certain websites mention “transparent pricing”

Source: Everlane

Other websites mention that X amount is donated to some cause:

I’m also thinking of spoofing some kind of well-known commerce platform (e.g. Amazon). One goal is to challenge the way these platforms promote a race to the bottom in pricing in ways completely detached from the original materials and labor. If spoofing Amazon, for instance, instead of “Buy Now,” there will be a sign that says “Buy Slowly.”

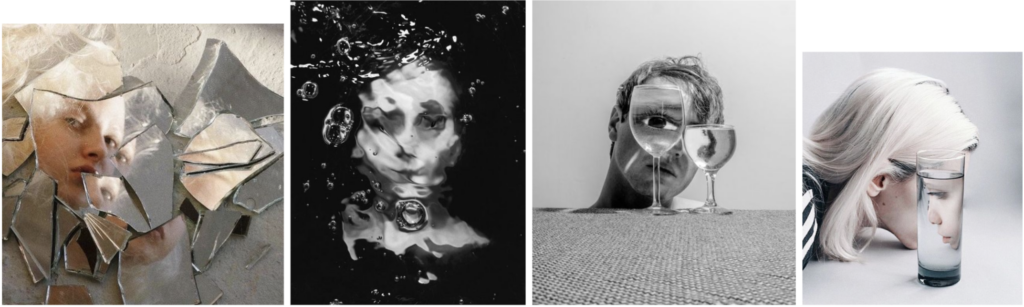

Nica had mentioned the possible adjacencies to sites selling pornography (where else do you buy and collect little clips like that) and NFTs. And in considering this project, I’m also reminded of the cabinet of curiosities. Ultimately, all of these (including stereoscopy) touch on a voyeuristic desire to look at and own.

What I initially had in mind for this project was a kiosk in an exhibition space where visitors can buy art/merchandise. I’m still thinking about how to make the content of the media more relevant to the way in which I want to present it, so open to suggestions/critical feedback!! I think there are a couple core threads I want to offer for reflection:

1. desire to look and own, especially in a more “art world” context (goal would be to actually offer the website/art objects in an exhibition context to generate sales for donations). What would generate value/sales? Would something physical be better (e.g. Golan had mentioned View-master disks)

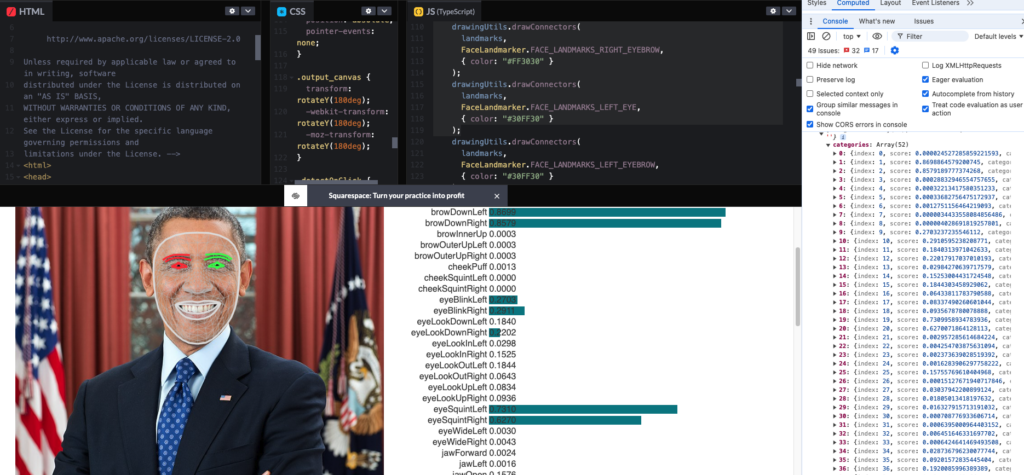

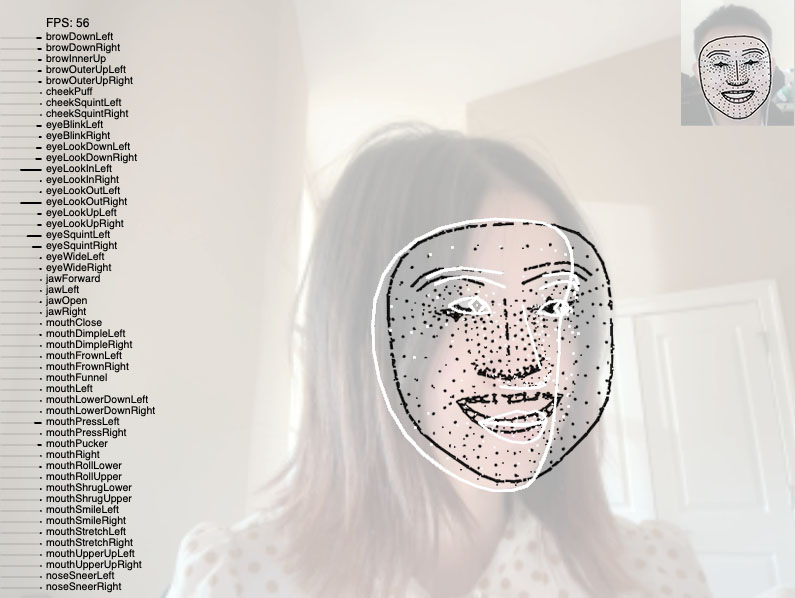

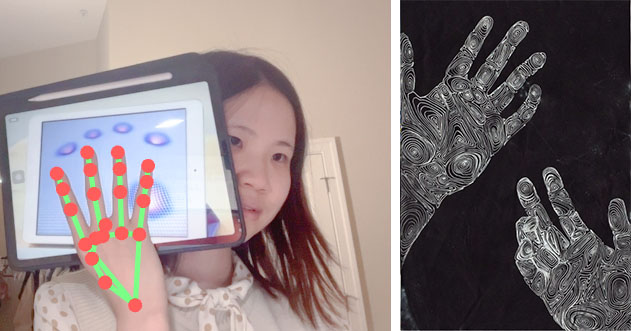

2. Unacknowledged labor, including of the non-human

3. Building in the possibility for fundraising and general education. Thanks!