Right now, I am thinking of making a photo booth where the moment of capture is controlled by someone else.

I’m interested in making a photo booth. Capturing the candid.

TBD for Updates.

Experimental Capture – Fall 2024

Computational & Expanded ███ography

Right now, I am thinking of making a photo booth where the moment of capture is controlled by someone else.

I’m interested in making a photo booth. Capturing the candid.

TBD for Updates.

Pupil Tracking Exploration

I have two main ideas that would capture a person in time using pupil tracking and detection techniques.

1. Pupil Dilation in Response to Caffeine or Light

The first idea focuses on tracking pupil dilation as a measurable response to external stimuli like caffeine intake or changes in light exposure. By comparing pupil sizes before and after exposure to caffeine or varying light conditions, the project would aim to capture and quantify changes in pupil size and potentially draw correlations between stimulus intensity and pupil reaction.

2. Fixed Pupil Center with Moving Eye (The idea I am likely moving forward with)

Inspired by the concept of ‘change what’s moving and what’s still,’ this idea would create videos where the center of the pupil is always fixed at the center of the frame, while the rest of the eye and person moves around it.

Implementation Details

Both projects rely on an open-source algorithm that detects the pupil by fitting an ellipse to the pupil. Changes in pupil size will be inferred from the radius of the ellipse. The second idea will involve frame manipulation techniques to ensure that the center of the pupil/ellipse remains in the center of the image or video frame at all times.

https://github.com/YutaItoh/3D-Eye-Tracker

Take my heartrate data live from a fitbit. Find some things in my house that tick. Have the house tick in sync with my heartbeat.

Expanding:

I already have a fitbit, I am already in progress getting the API to work. Pretty sure I can get data at the rate I’d want. Can also give up use other heartrate monitor arduino whatever.

Heartrate can also be breathing.

“Tick” means like: clock hand, beep, water drip, quick power on/off.

I will have to experiment to find out what I can make tick. I am allowed to be selective about what household appliances/subjects are incorporated. The end result does not have to be “my house, 100% accurate to how it is everyday”, it can be staged.

The end result of this is an experience for me to be in. That will probably manifest into a video documentation for class. I imagine I would be in the ticking space, because I imagine I would respond to hearing my own heartrate. An alt (not for class) version would be an actual of an installation of a room ticking, and that runs constantly, and I’m not there.

Original idea involved sleep/REM date, and dreamwalking.

Alt: Getting a UV light & Sunbleaching my shadow into a piece of paper for like a hundred something hours. Just I think it’d be fun to make that much time translate into something really stupidly subtle.

Alt alt: Grid of office cubicles. In each cubicle is one chair, a controller to pan/zoom/tilt a ceiling-mounted camera, and a monitor displaying that camera’s feed. IDK office-core panopticon.

I want to use motion detection to examine the of musicians as they play, particularly looking at the relationships of their movements.

Some ideas:

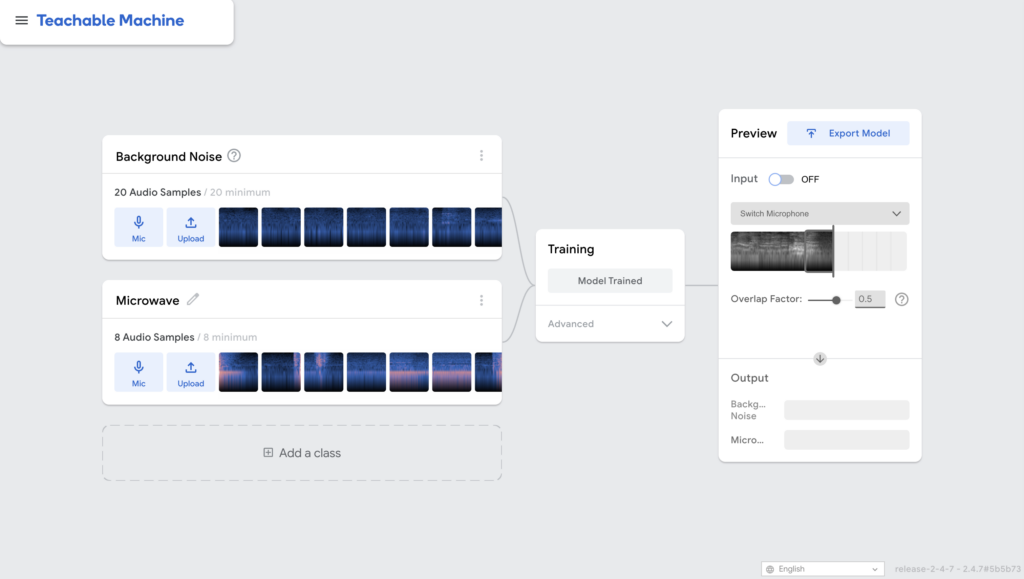

I sleep in the living room so I hear everything that is going on in the house… especially in the kitchen. If someone is making food, running the microwave, filling up their Brita water, etc. I can assume what they are doing in the kitchen by listening on the sound.

Goal of the project

Detect the sound coming from the kitchen and machine recognizes the activity and sends me a notification by logging my roommates activity while I am outside the house.

How

Test: microwave

Questions

People’s interactions in a room

smokers allowed reference-In the “Smokers Allowed” episode of Nathan For You, Nathan Fielder helps a struggling bar owner increase business by creating a designated smoking area inside the bar, circumventing smoking laws. His solution involves reclassifying the bar as a “theatrical performance” where smoking is allowed as part of the act. Patrons are told they are watching a play, though it’s just regular bar activity. The episode escalates when Fielder decides to refine this into an art piece, staging intricate performances based on the footage recorded during the regular bar activity down to the detail of their conversations -under the guise of theater.

people in a room/everything happening at the same time (being able to zoom into people’s private interactions – maneuver volume level, high-light certain areas)

play intermissions, retail store, orchestra/band?

My friend has a motorboat that he has had a strong connection with for most of his life. I wanted to help him say goodbye to the boat by making a piece that will be able to take him to the boat whenever he misses it after he sells the boat.

My plan is to make a multichannel (likely 8 channel audio capture) of the boat using a combination of recording techniques. This includes binaural microphone recording as well as using the ambisonic recorder. Small background – binaural audio replicates a particular person’s pov of hearing and binaural captures a 360 degree audio field that is completely unbiased which sounds quite different but I think are equally important in recreating the sound in a space. My idea is to amplify the recordings by panning them together in various ways spatially to create an effective soundscape. The documentation of this will be through comparing the outcomes of mixing down the multichannel sound.

I will be comparing the effectiveness of these techniques

How? – Boat trip – Recording out on a boat with both microphones while we have a small intimate chat about the boat and the history with it.

The hand is the extension of thought. In this project, I will continue to capture the movements of the hand. The hand is the sensory antenna through which we perceive the world. It is not merely a tool for executing tasks or achieving goals; it is an extension of our thoughts and intentions. Through the movement of the hand, thought is materialized and transformed into tangible interactions with the world. Thinking does not happen solely in the brain—hand and body movements are also part of the cognitive process.

In this project, I aim to capture the unconscious use of the hand, focusing on the small, involuntary movements that occur without deliberate intention. These subtle actions, which often go unnoticed, reflect the hand’s role as an extension of thought and perception.

Biting nails, rubbing hands, twisting fingers, clenching fists, tapping fingers on the table, touching the face, stroking the chin, rubbing the nose, caressing the wrist, or rubbing the arm…

First Direction: A set scene, Invite others, 30 min 1 to 1 chat (topic unsure)

Second Direction: Take my camera to public space.

I want to explore individuals reaction of shock or fear when encountering real time, invasive surveillance when private information is verbally revealed by the viewer’s own personal face (think Deepfakes!). I’ll be using Golan’s Face Extractor & FaceWork code from p5.js to capture the indivdual’s face in real time, and manipulate their mouth movements into saying something they don’t believe in OR speaking on their personal information.

I have been playing with projected installations where I project recorded faces onto a white sculpture of a face, and the face begins talking to the viewer. I love probing a reaction of shock, confusion, any type of reaction that probes the viewer into reflecting on the message based on a bit of realization or even fear…

I’ve manipulated Golan’s p5.js code into manipulating and focusing on the mouth movements. The code also makes sure the face is centralized in the middle of the screen for easy, stabilized projection!!!!! SCORE!!!! Thank you Golan!

Face Extractor & FaceWork1

https://openprocessing.org/sketch/2066195

https://openprocessing.org/sketch/2219047

I’m not exactly sure what I want the individual’s face to say once I’m able to manipulate their mouths.

A long time idea has been to have the projected face say something the person likely doesn’t agree with, but I think this idea requires more thought & attention then just a few weeks.

I’m leaning towards the face revealing the individual’s personal information of somesort:

Address, phone number (this would require me paying for some governmental record)

Instagram or Twitter

Or I let the individual type in a seceret into the computer and the face verbally reveals it.

With all of these ideas, the main goal is to make sure I can capture the individual’s live reaction to their own projected face almost betraying them in some way.

I’m also unsure of HOW I need to manipulate my code.

I can either pre-write the code to manipulate the mouth with pre-written phrases OR with private information I already know about the individual and I just capture their reaction.

OR

I code the mouth alphabet, and write in sentences in real time. Not sure of the fluidity of the reaction.

I’m somewhat unsure of the capture method.

I think this project would most definitely need verbal consent, or their consent by typing their name into the computer.

Verban consent would likely come from friends, family, classmates, professors. Not sure random viewers would be as willing, unless I just brought my computer to the cut? Debating how to gather individuals to the capture experience.

Who does the work of sustaining and maintaining the landscapes that CMU uses to market and present itself? Can we call attention to and celebrate the work that landscape maintenance workers do by documenting and calling attention to the traces of their maintenance work?

I’m interested in finding ways of exploring these questions and would like to use time-lapse, tilt-shift photography to capture this.

*I am a little worried about the time constraint for this project. I wanted to contact CMU maintenance workers (I haven’t been able to intercept any maintenance working on campus yet, but I have reached out to people via email (although no one has responded yet). I contacted Nate Holback, who I understand is head gardener at CMU, but have not heard back yet (I will try to find other ways of contacting people).*

I described my plan to Nate in this way:

Dear Nate,

I hope you’re enjoying the beautiful fall season. I’m Kim, a graduate design student who is also a landscape architect. I have long admired the often-unseen work of the campus landscape maintenance team, and I want to bring more visibility to it through an experimental art project which is part of a class project.

For this class project, I’m using time-lapse, tilt-shift photography to create an almost toy-like, miniature view of the campus landscapes (you can see fun example of those here) My idea for the project is to capture the movements of members of maintenance team—mowing, pruning, mulching, or even watering plants—highlighting the subtle, repetitive work that keeps the campus looking beautiful but often goes unnoticed. No faces would be visible due to the tilt-shift effect and the far away distance of the camera, but I believe it’s important to have the consent of the workers involved, given they’d be the focal point of the scenes.

I’d love your advice on this. Are there particular people or landscapes on campus you think would be ideal for documenting? And would you be able to point me toward the best contacts to start a conversation about consent? I want to ensure that anyone who may be a focal point is comfortable with the project. I will be beginning the project at the end of this week (and may shift plans according to your response).

Thanks for your time! I really appreciate any thoughts or guidance you can offer.

Kind regards,

Kim

______

If this doesn’t work, I’m planning to continue my fun microscopy explorations. And might change my subject to a bug in time.

Here’s one capture (I have more to share).