Objective:

To compress the decay of flowers into a single image using the slit-scan technique, creating a typology that visually reconstructs the process of decay over time.

I’ve always been fascinated by the passage of time and how we can visually represent it. Typically, slit-scan photography is used to capture fast motion, often with quirky or distorted effects. My goal, however, was to adapt this technique for a slower process—decay. By using a slit-scan on time-lapse footage, each “slit” represents a longer period, and when compiled together, they reconstruct an object as it changes over time.

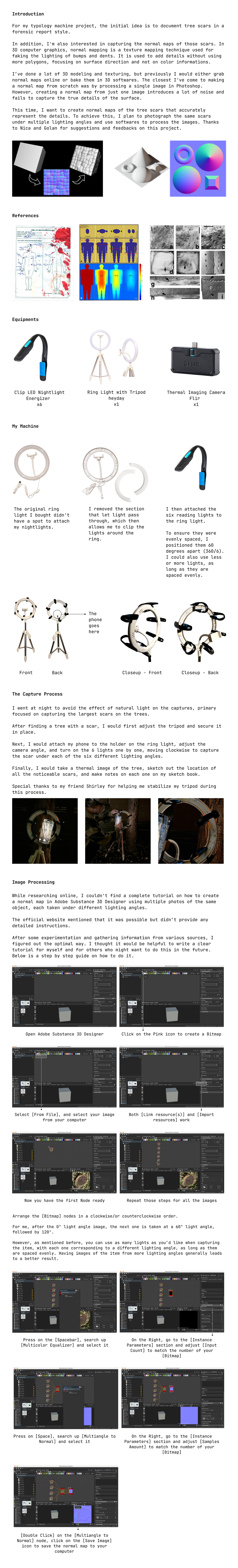

Process

Why Flowers?

I chose flowers as my subject because their relatively short lifespan makes them ideal for capturing visible transformations within a short period. Their shape and contour changes as they decay fit perfectly with my goal to visualize time through decay. Initially, I considered using food but opted for flowers to avoid insect issues in my recording space.

Time-Lapse Filming

The setup required a stable environment with constant lighting, a still camera, and no interruptions. I found an unused room in an off-campus drama building, which was perfect as it had once been a dark room. The ceiling had collapsed in the spring, so it’s rarely used, ensuring my setup could remain undisturbed for days.

I used Panasonic G7s, which I sourced from the VMD department. These cameras have built-in time-lapse functionality, allowing me to customize the intervals. I connected the cameras to continuous power and set consistent settings across them—shutter speed, white balance, etc.

The cameras were set to take a picture every 15 minutes over a 7-day period, resulting in 672 images. Not all recordings were perfect, as some flowers shifted during the decay process.

Making Time-Lapse Videos

I imported the images into Adobe Premiere, set each image to a duration of 12 frames, and compiled them into a video at 24 frames per second. This frame rate and duration gave me flexibility in controlling the slit-scan speed. I shot the images in a 4:3 aspect ratio at 4K resolution but resized them to 1200×900 to match the canvas size.

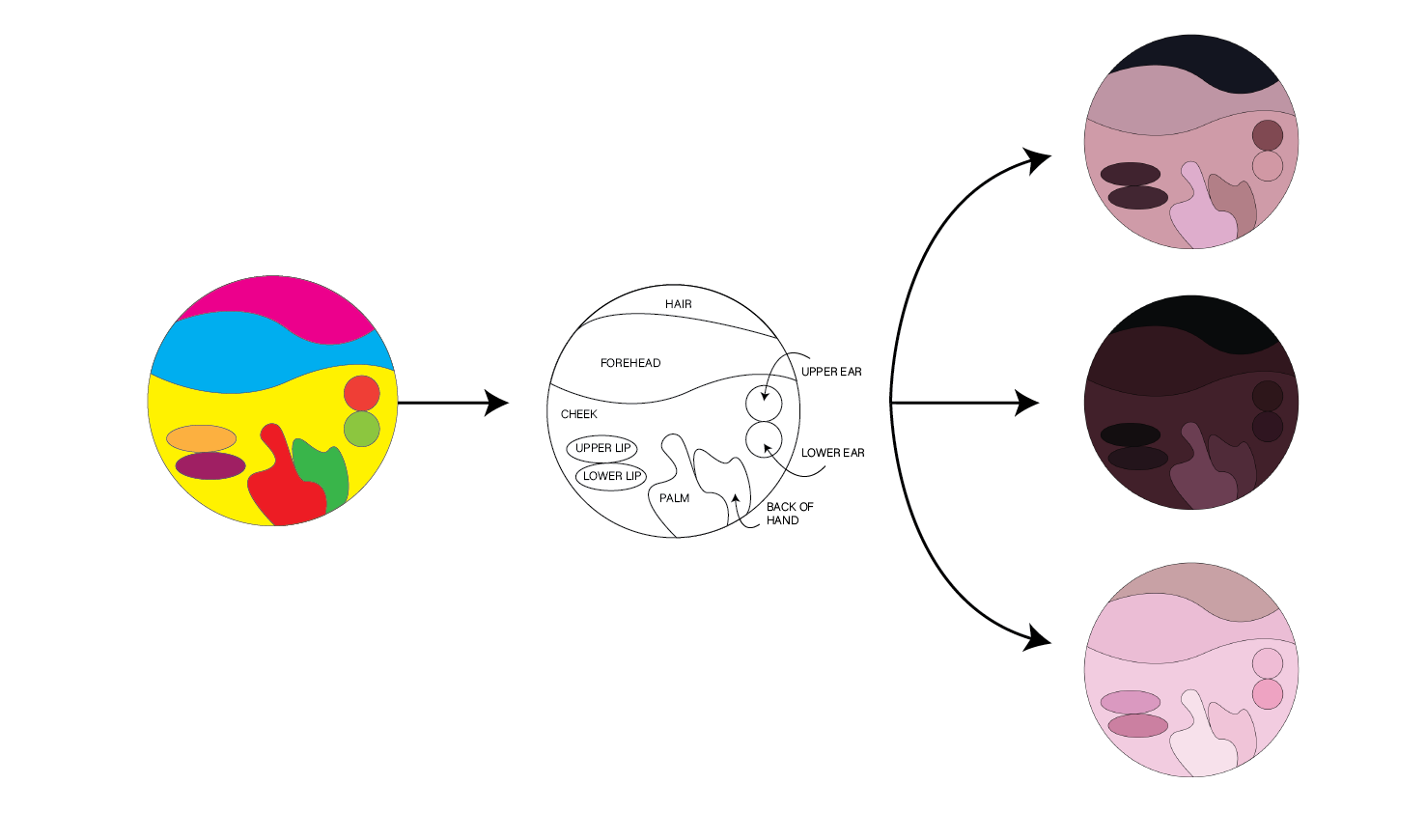

Slit-Scan

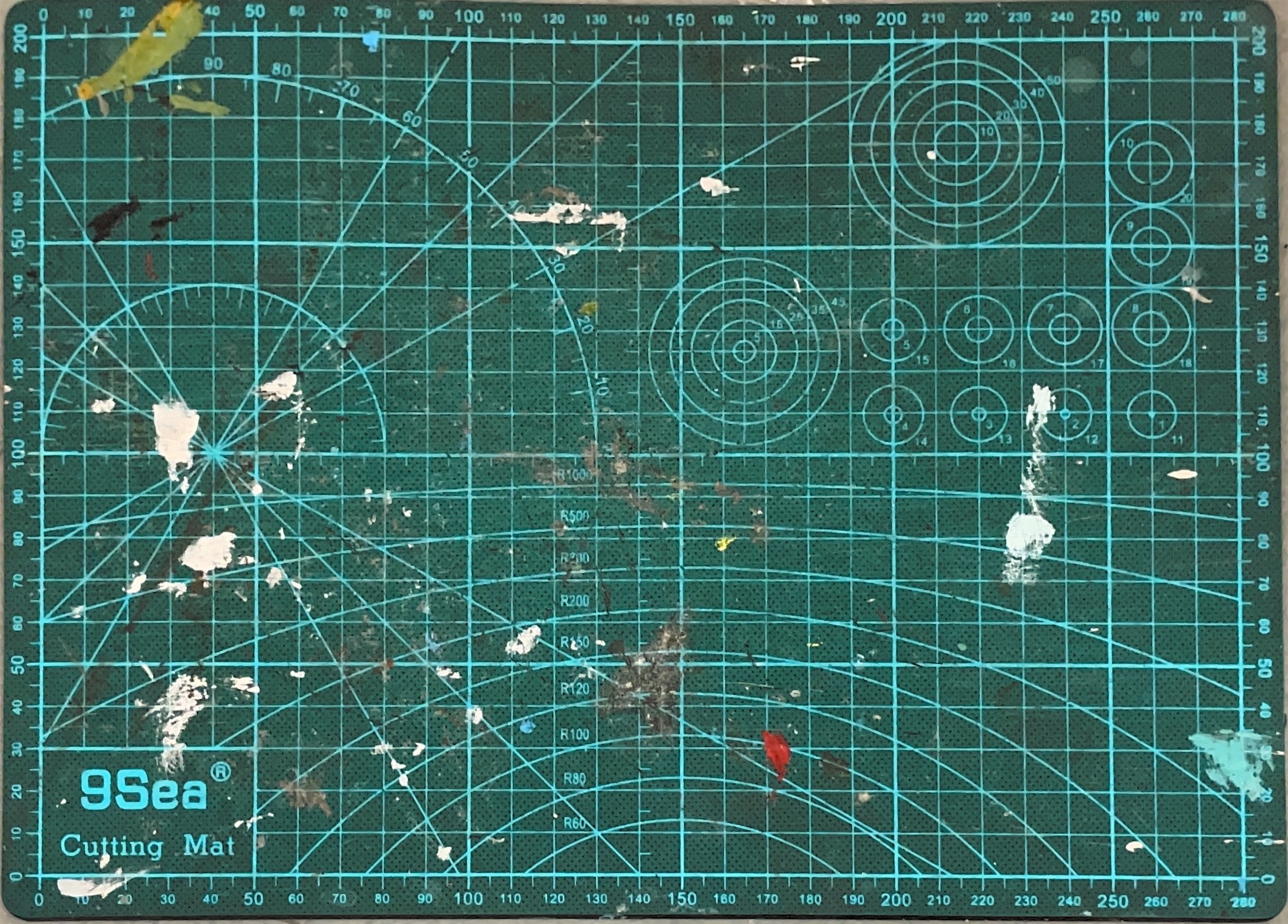

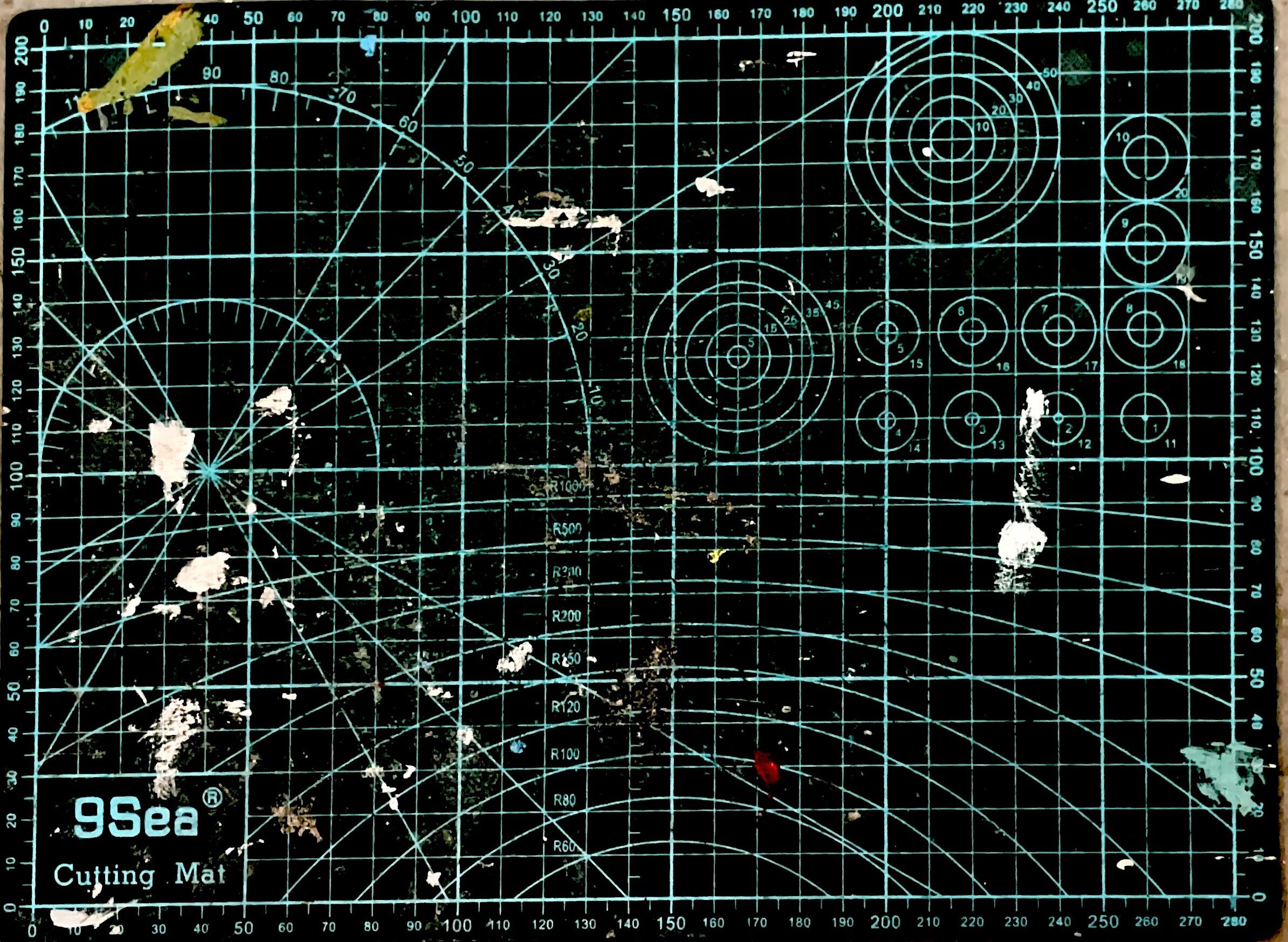

Using Processing, I automated the slit-scan process. Special thanks to Golan for helping with the coding.

Key Variables in the Code:

- nFramesToGrab: Controls the number of frames skipped before grabbing the next slit (set to 12 frames in this case, equating to 15 minutes).

- sourceX: The starting X-coordinate in the video, determining where the slit is pulled from.

- X: The position where the slit is drawn on the canvas.

For the first scan, I set the direction from right to left. As the X and sourceX coordinates decrease, the image is reconstructed from the decay sequence, with each slit representing a 15-minute interval in the flowers’ lifecycle. In this case, the final scan used approximately 3,144 frames, capturing about 131 hours of the flower’s decay over 5.5 days.

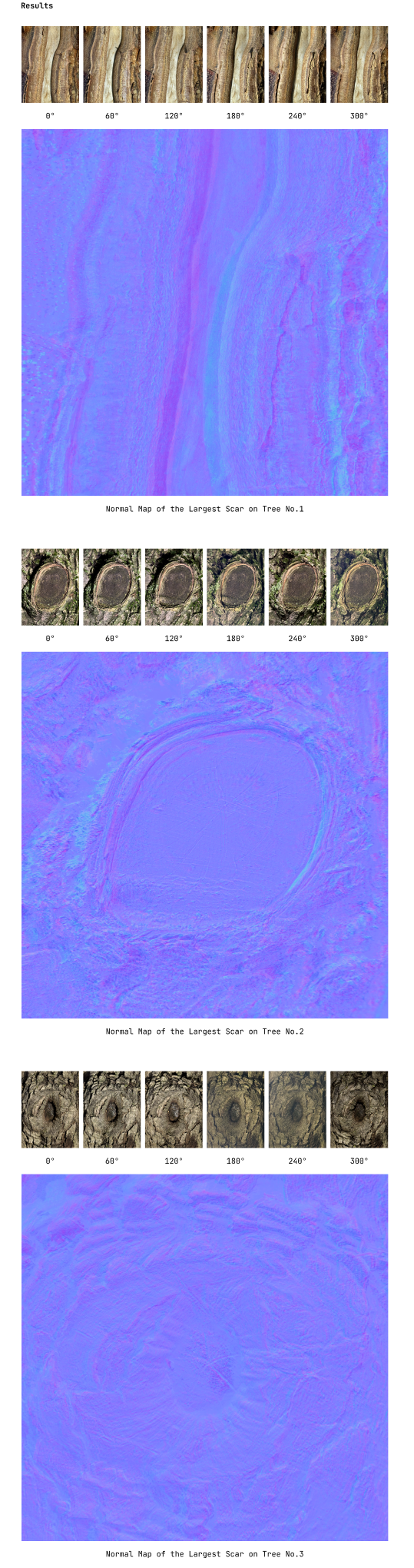

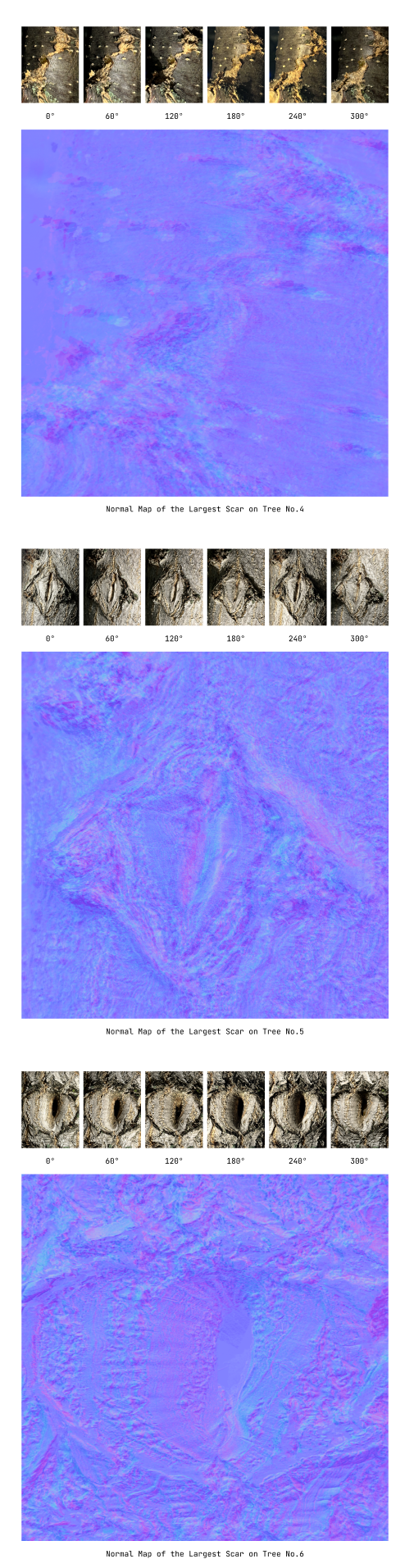

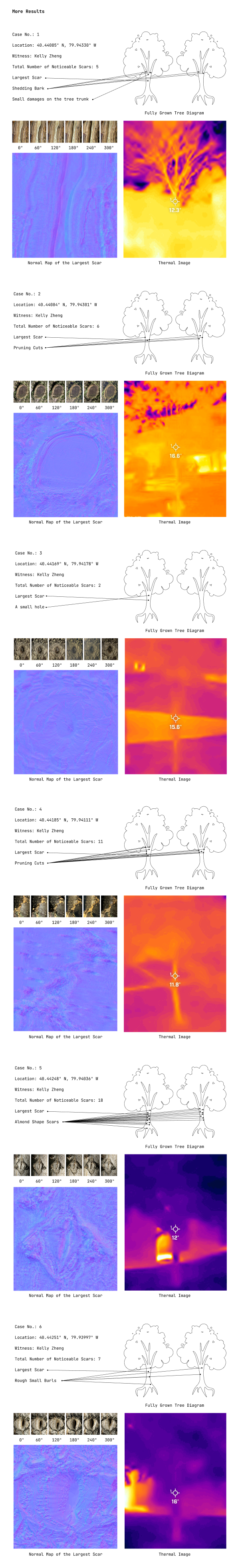

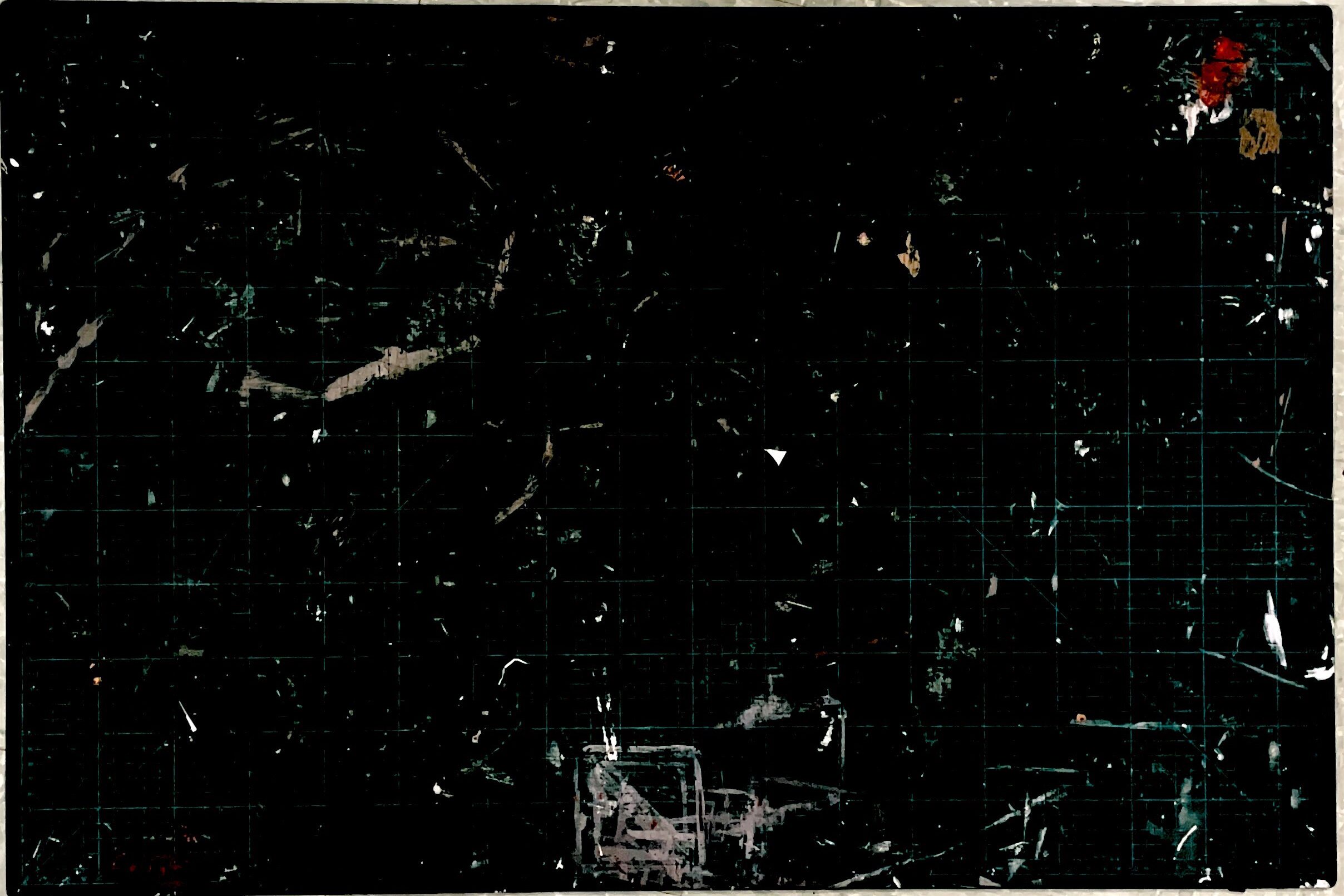

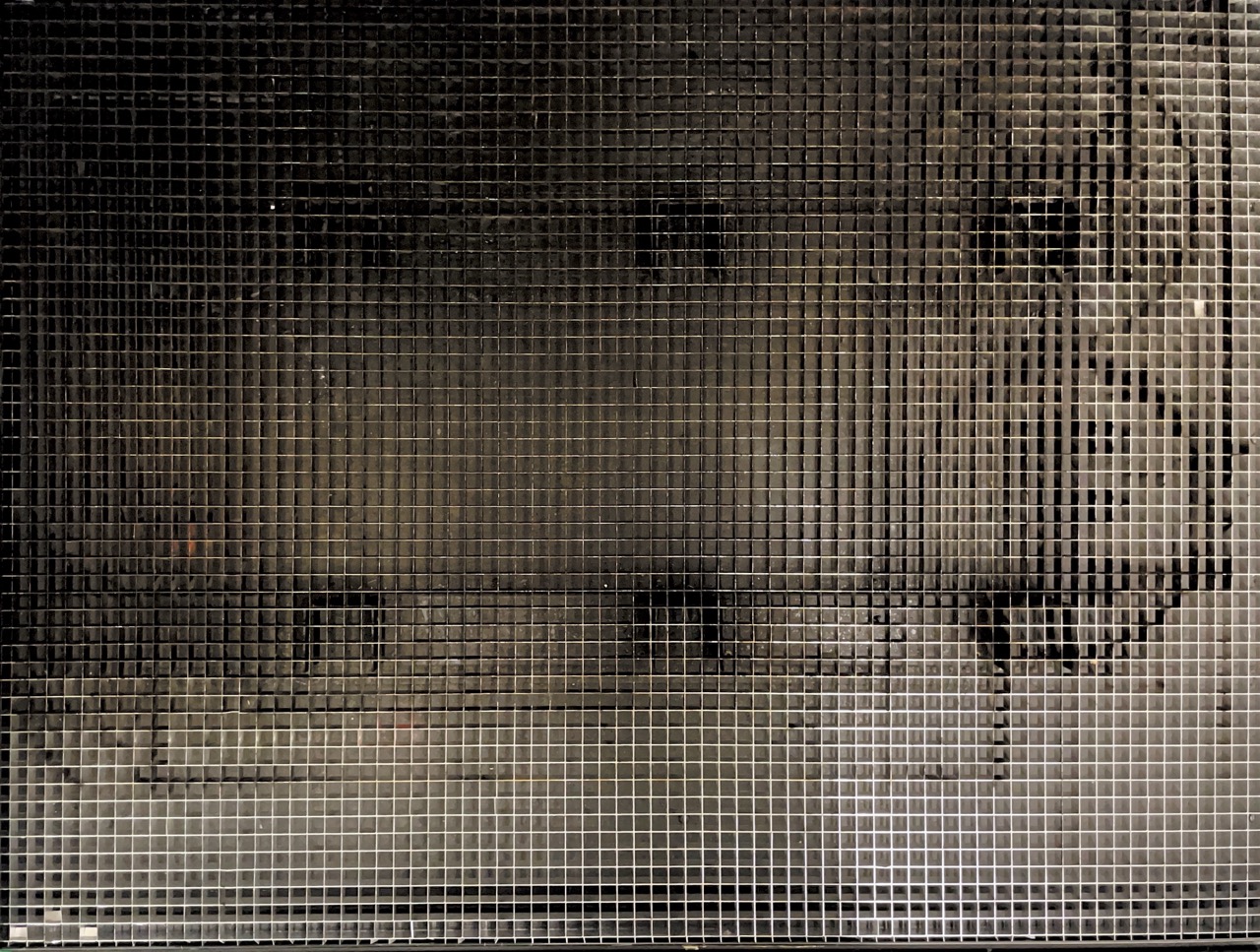

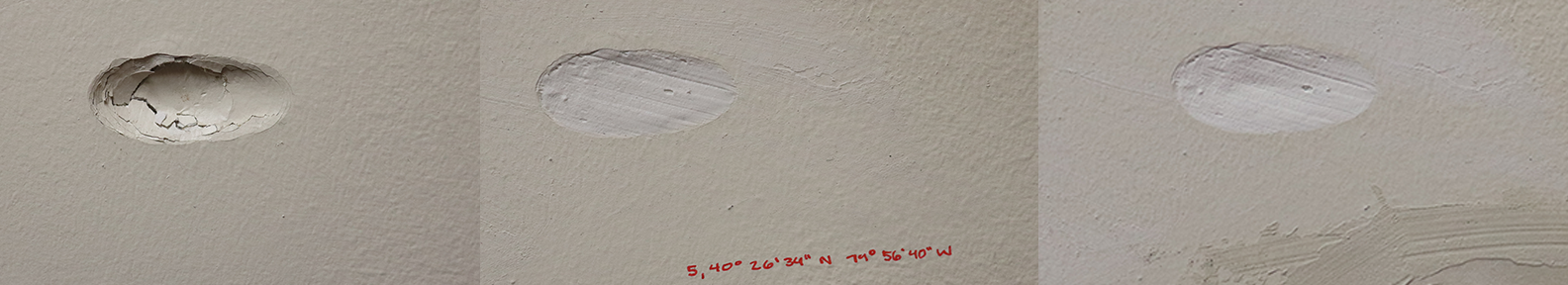

Slit-Scan Result:

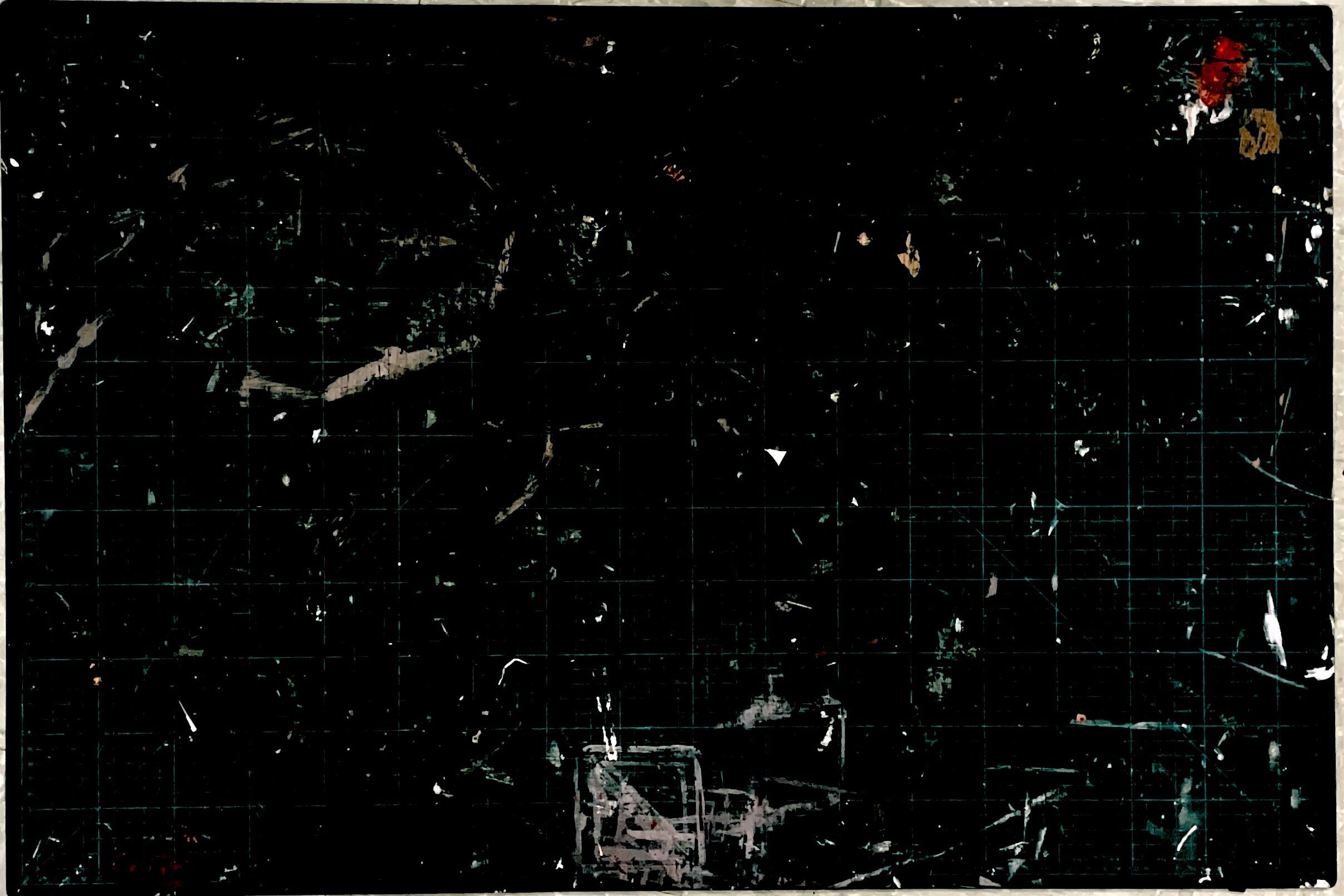

- Hydrangea Right-to-Left: The scan proceeds from right to left, scaning a slit everying 12 frames of the video, pulling a moment from the flower’s decay. The subtle, gradual transformation is captured in a single frame, offering a timeline of the flower’s life compressed into one image.

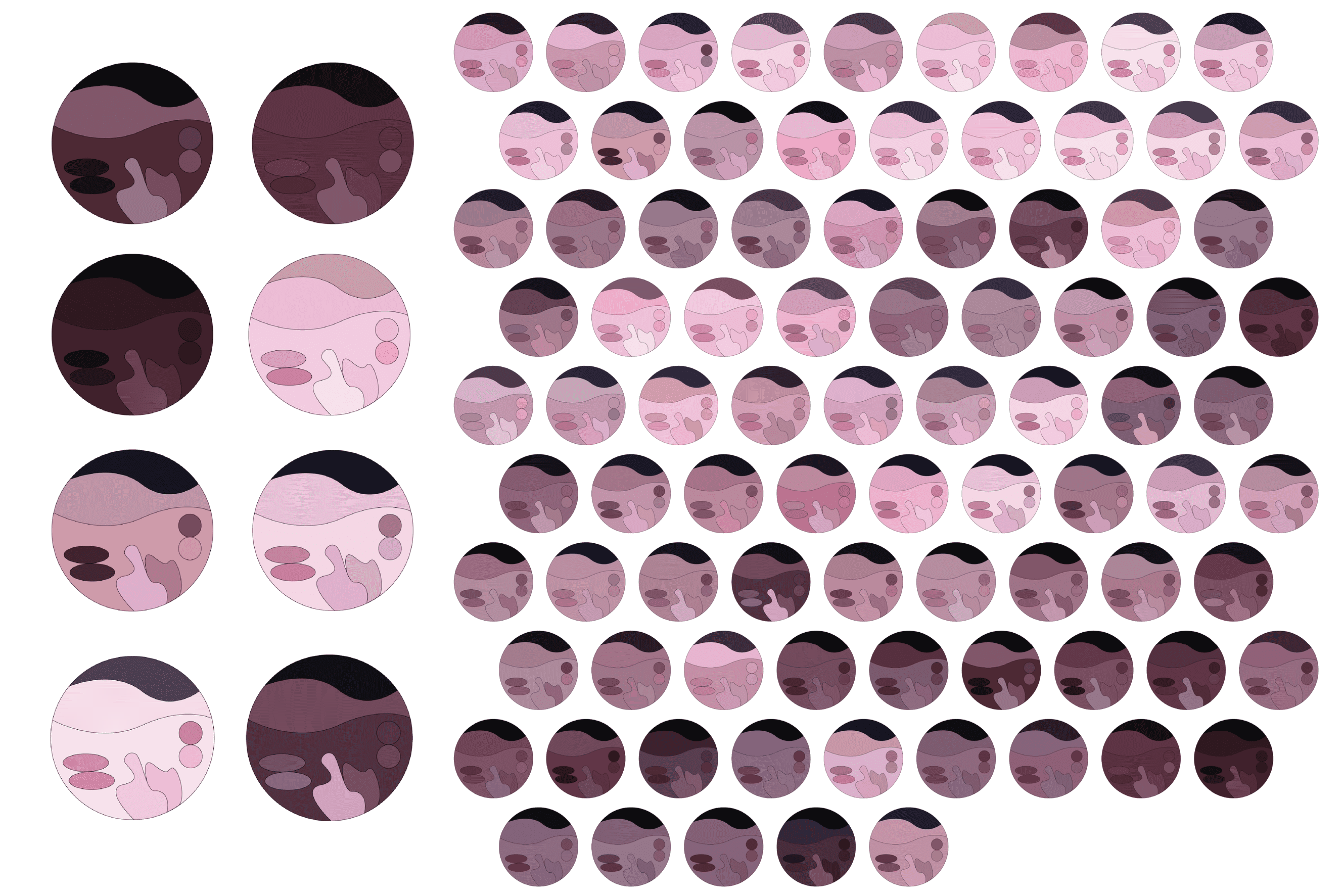

Expanding the Typology

I experimented with different scan directions and speeds to see how they changed the visual outcome. Beyond right-to-left scans, I tested left-to-right scans, as well as center-out scans, where the slits expand from the middle of the image toward the edges, creating new ways to compress time into form.

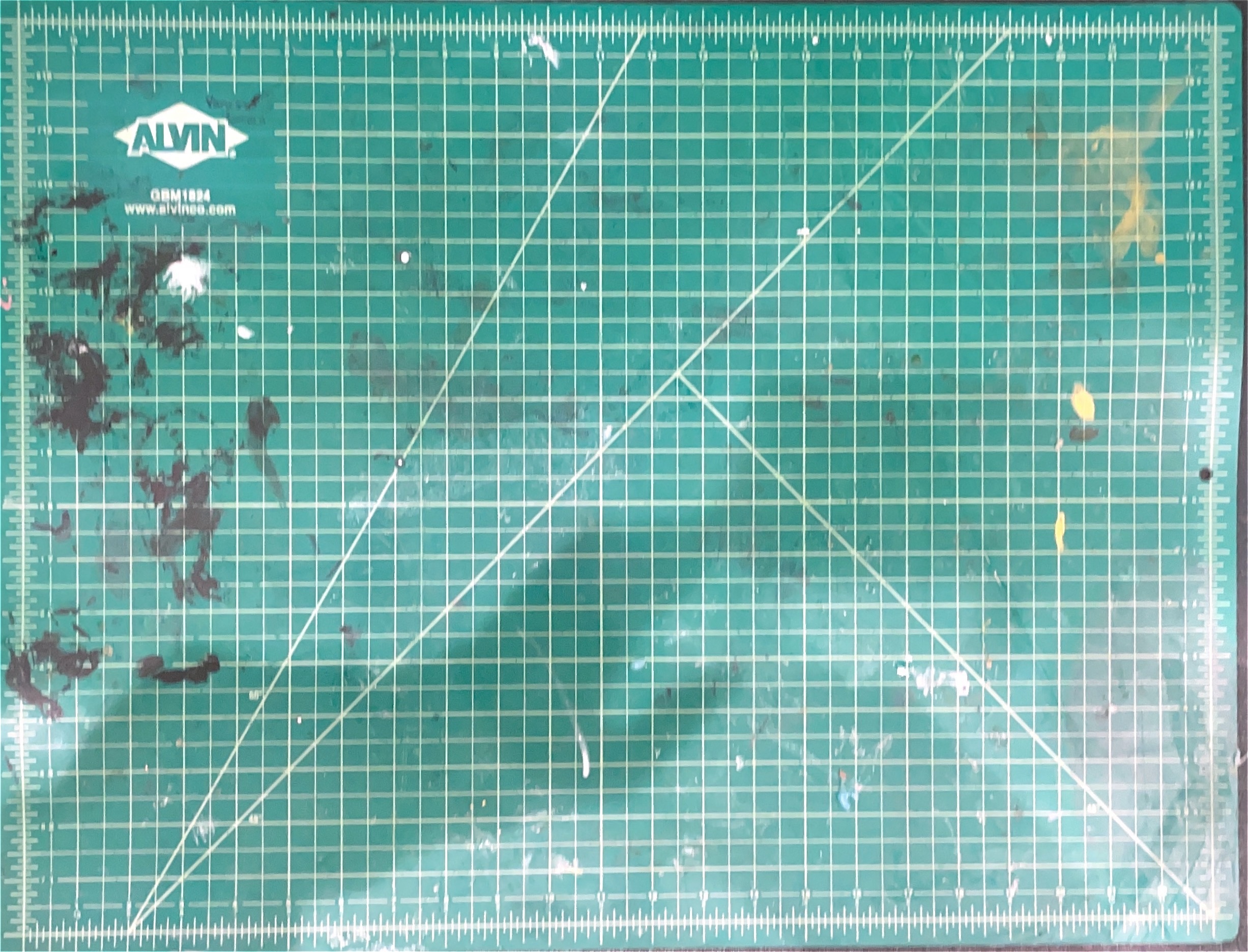

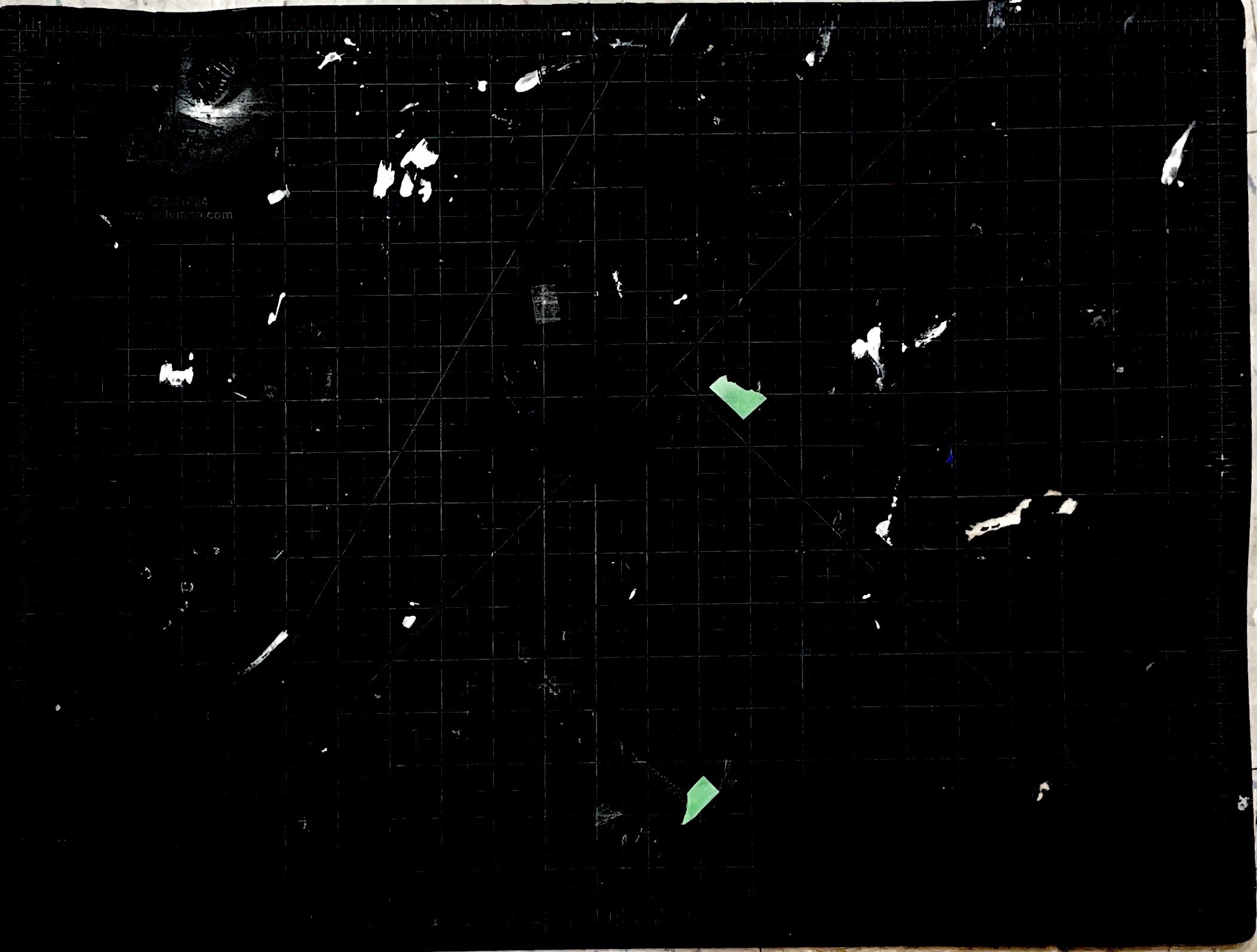

- Hydrangea Left-to-Right with Scanning every 6 Frames

- Hydrangea Center Out: This version creates a visual expansion from the flower’s center as it decays, offering an interesting play between symmetry and time. The top image is scanning in the speed of every 30 frames, and the bottom image is every 36 frames. We can also see the intersting comprision between different speed of scanning.

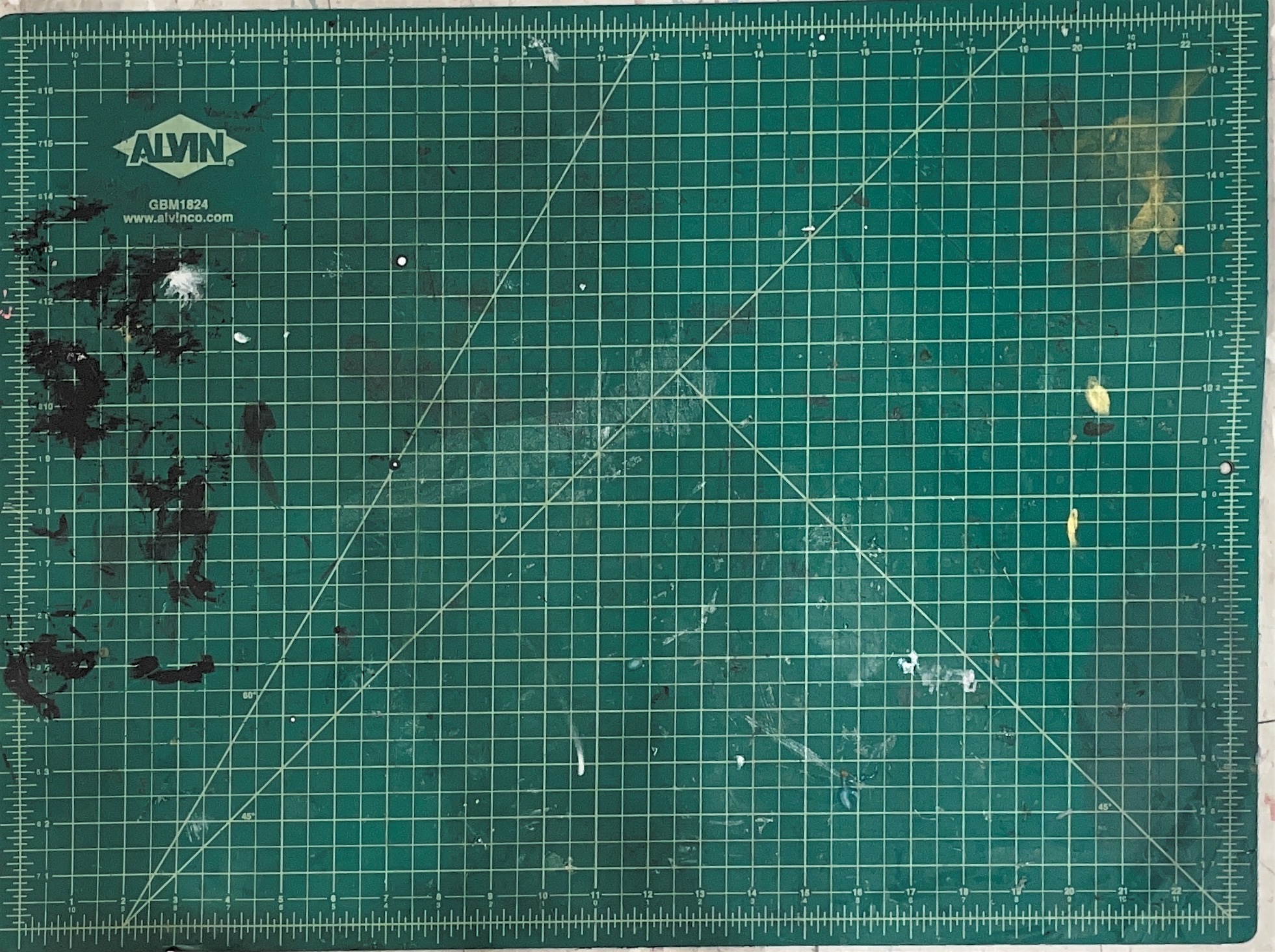

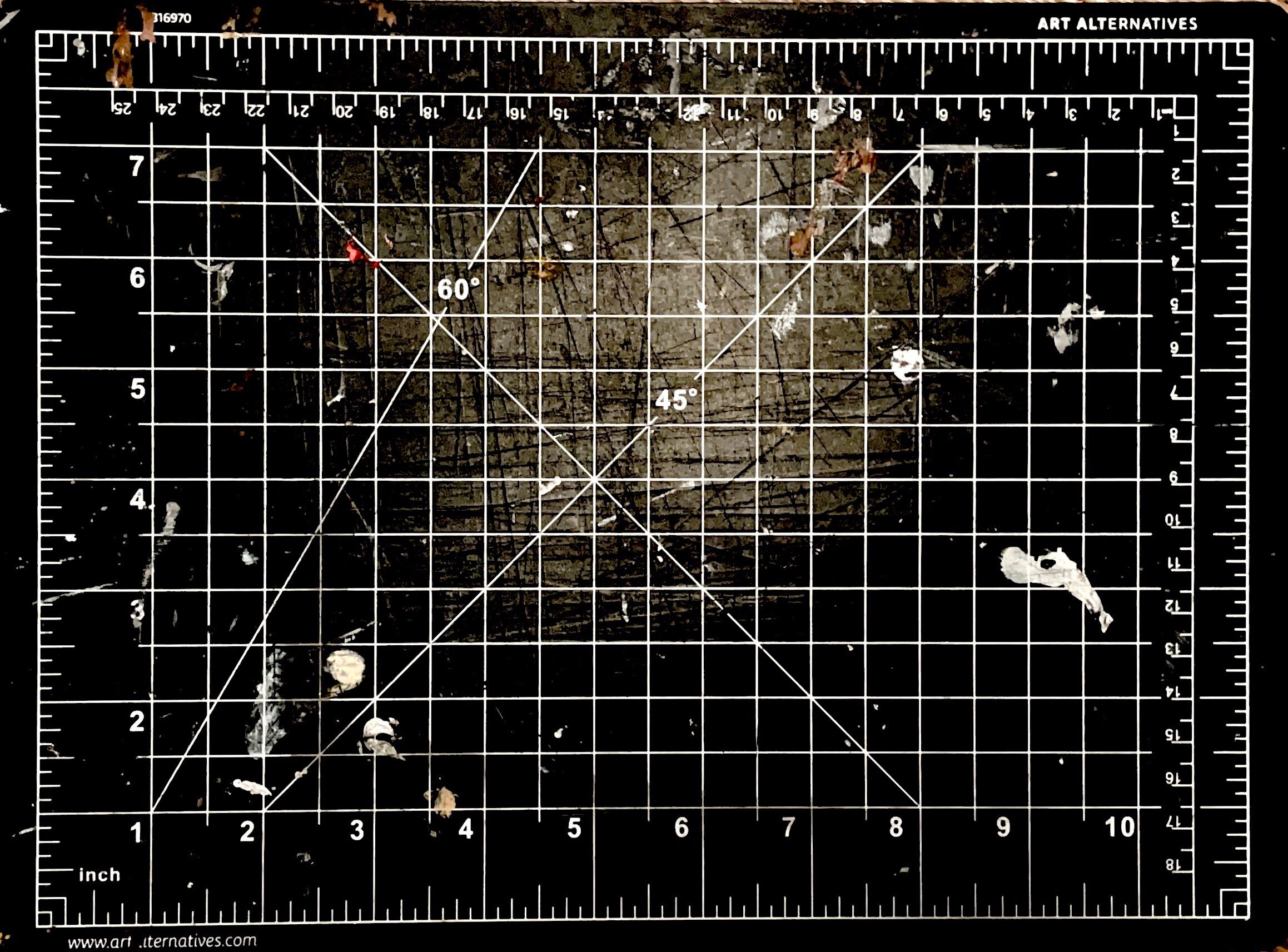

- Sunflower Center Out/ every 30 frames

- The sunflower fell off of the frame created this streching warping effects.

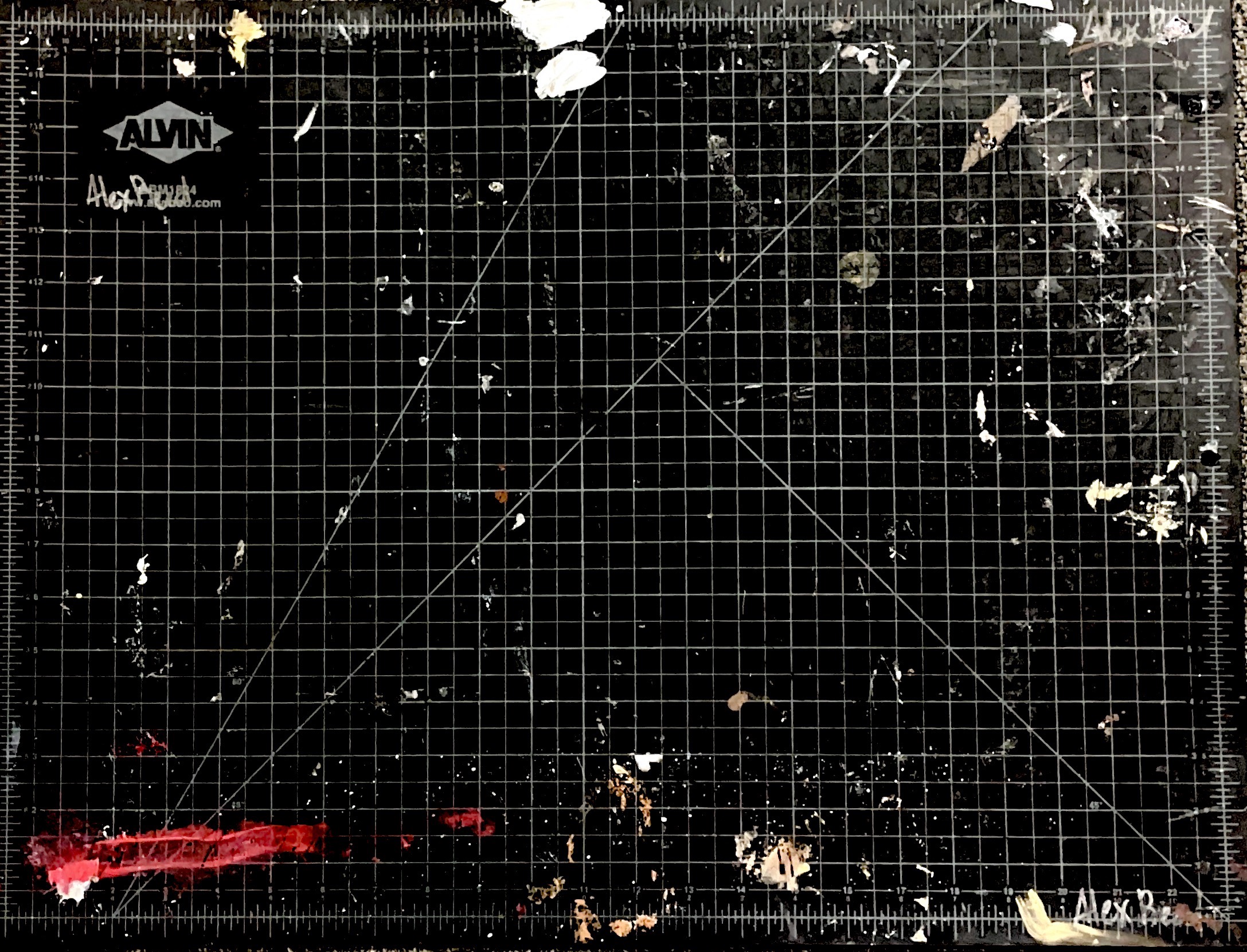

- Roses Left-to-Right/ every 12 frames

- Roses Right-to-Left/ every 18 frames

- While filming the time-lapse for the roses, they gradually fell toward the camera over time. Due to limited space and lighting, I set up the camera with a shallow apparatus and didn’t expect the fell, which resulted in some blurry footage. However, I think this unintended blur adds a unique artistic quality to the final result.

Conclusion

By adjusting the scanning speed and direction, I was able to create a variety of effects, turning the decay process into a typology of time-compressed visuals. This method can be applied to any time-lapse video to reveal the subtle, gradual changes in the subject matter. Additionally, by incorporating a Y-coordinate, I could extend this typology even further, allowing for multidirectional scans or custom-shaped images.

One challenge was keeping the flowers in the same position for 7 days, as any movement would result in distortions during the scan. Finding the right scanning speed also took some experimentation—it depended heavily on the decay speed and the changes in the flowers’ shapes over time.

Despite these challenges, the slit-scan process succeeded in capturing a beautiful, visual timeline. It condenses time into a single frame, transforming the subtle decay of flowers into an artistic representation of life fading away. This project not only visualizes time but also reshapes it into a new typology—a series of compressed images that track the natural decay of organic forms.

.

.

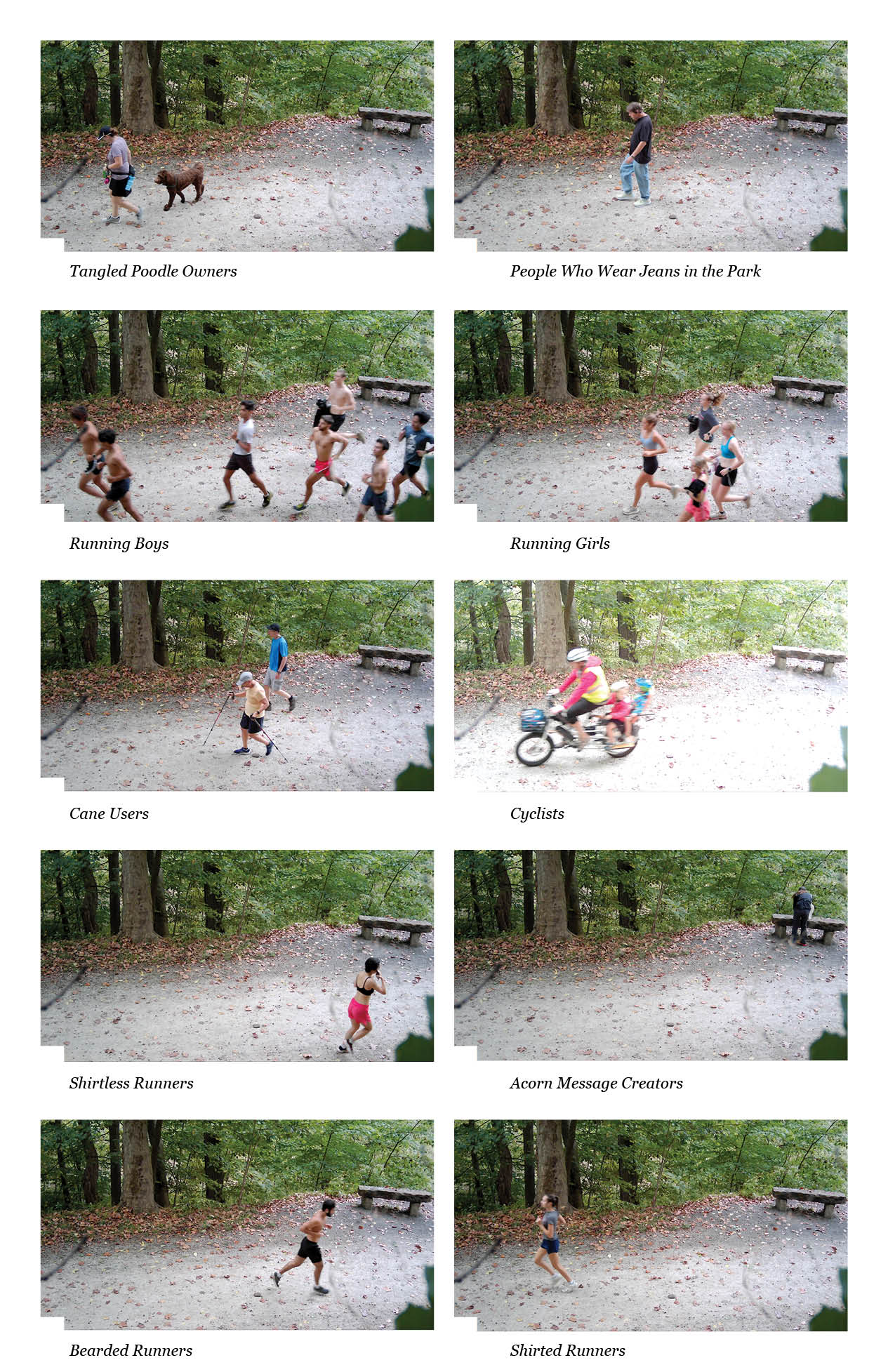

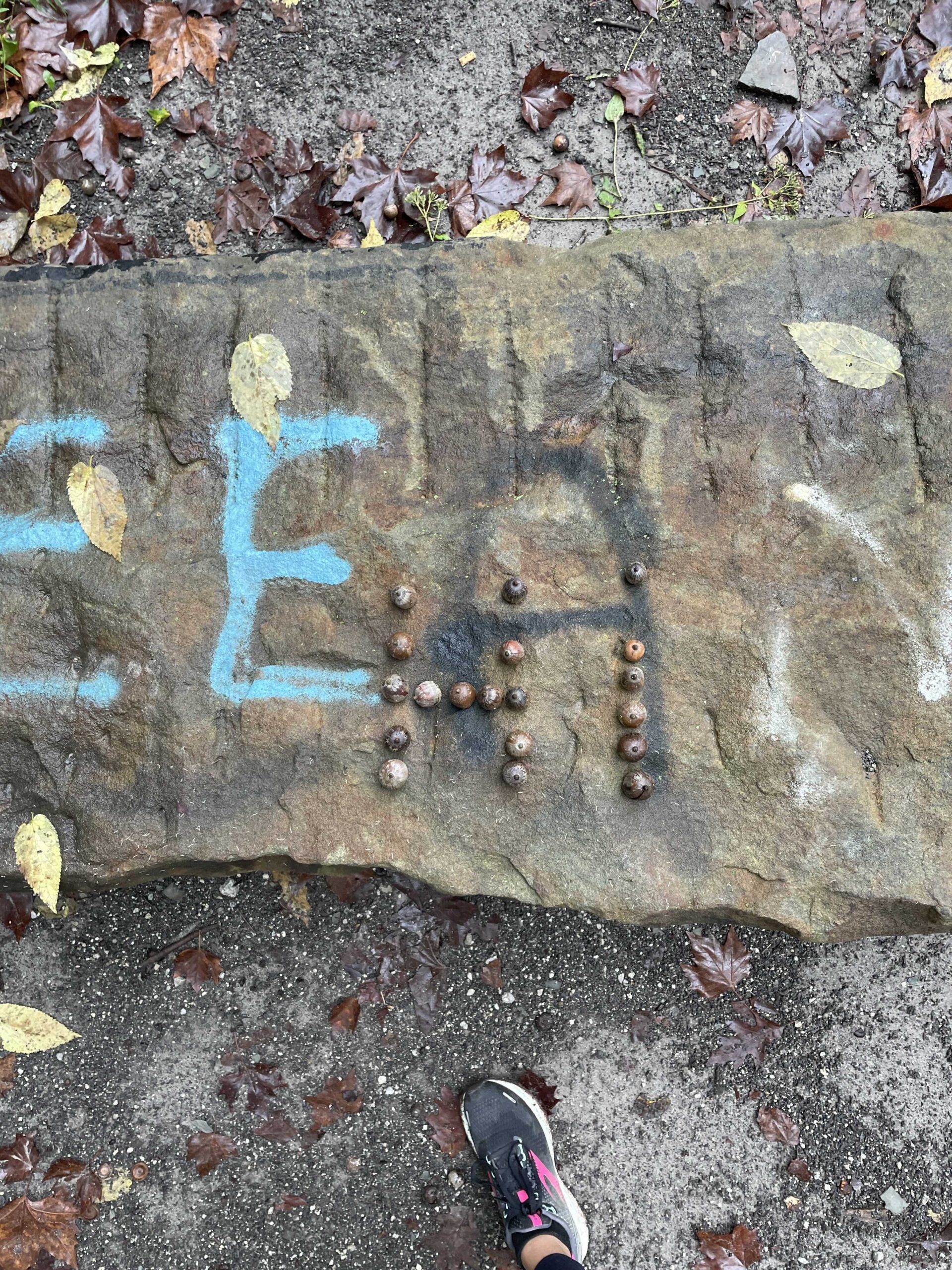

The acorn message that someone place on the bench on Tuesday morning.

The acorn message that someone place on the bench on Tuesday morning.