CW: Animal Death

I document through photo/geolocation and burn digital incense for roadkill–lives sacrificed for the current automotive-focused vision of human transit–encountered during routes driven in my car.

Inspiration:

Last summer to fall, I spent a lot of time driving long distances, and I was struck by the amount of roadkill I’d see. This strikes me every time I’m on the road because of several projects I’ve been working on these past two years around the consequences of the Anthropocene and the reconfigurations of our transit infrastructures for automobility.

Workflow

Equipment:

- Two GoPro cameras w/ recommended 64gb+, two GoPro mounts, two USB-C cables for charging

- Tracking Website: a webpage to track which locations you’ve burned digital incense for dead animals. I made this webpage because I realized I needed a faster way to mark geolocation (GPS logging apps didn’t allow left/right differentiation, voice commands were too slow) and to indicate whether to look left or right in the GoPro footage. Webpage also meant I could easily pull it up on my phone without additional setup.

- Blood, sweat, and tears?

Setup of the two roadside GoPro cameras mounted to the left and right sides of the car to film the road on both sides. The cameras are set to minimum 2.7K, 24fps; maximum 3K, 60fps.

My workflow is basically:

1) appropriately angling/starting both cameras before getting into the car

CONTENT WARNING FOR PHOTOS OF ANIMAL DEATH BELOW

Sample Images:

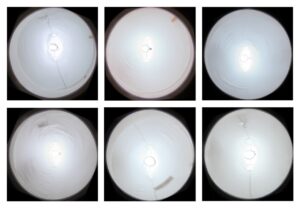

Test 1 (shot from inside of car, periodic photos–not frequent enough)

Memo: Especially after discussing with Nica, decided to move away from front-view shots since they’re so common/you don’t as good a capture.

Test 2 (shot from sides of car)

Memo: Honestly not bad and makes the cameras easier to access than putting them output, but I wanted to capture the road more directly/avoid reflection/stickers in the window, so I decided to put the cameras outside

Test 3: Outside

The final configuration I settled on. I did aim my camera down too low at one point and believe I missed some captures as a result. It was generally difficult to find a good combination of good angle + good breadth of capture. Some of these photos are quite gory, so click into the folder of some representative sample images below at your own caution.

Typology Machine Sample Images – Google Drive

2) using the incense burning webpage to document when/where I spotted/”burned incense” for roadkill

3) stopping the recording as soon as I end the route (and removing the cameras to place safely at home)

4) finding appropriate images by finding the corresponding time segment of the video. For now, I’ve also used Google My Maps to map out the routes and places along the routes where I’ve “burned digital incense” in this Roadkill Map. CW: Animal Death

Key principles:

A key principle driving this work is that I will only collect data for this project on trips that I already plan to make for other reasons; because by driving, I’m also contributing to roadkill (inevitably with, at minimum, insects–who were not included in this project due to time/scope). This both means that each route traveled is more personal and that I am led to drive in particular lanes and particular speeds/ways.

Design decisions:

My decision to add the element of “burning incense” for the roadkill I encountered arose out of three considerations:

1) acknowledging time limitations–algorithmic object detection simply takes too long on the quantity of data I have and I found the accuracy to be quite lacking

2) wanting a more personal relationship with the data; I always notice roadkill (also random dogs

3) due to both the limitations of detection models and my own visual detection (also in some ways it’s not the safest for me to be vigilantly scanning for roadkill all the time), I inevitably cannot see all roadkill (especially with some being no longer legible as animals due to being flattened/decayed). This means I cannot really hope to accurately portray the real “roadkill cost”—at best, I can give a floor amount. However, through the digital incense burning, I can accurately portray how many roadkill animals I burned “digital incense” for.

Future Work

In many ways, what I built for the Typology Machine project is only the beginning of what I see as a much more extensive future of data collection. Over time, I’d be curious about patterns emerging, and as I collect more data, I would be interested in constructing a specific training data set for roadkill. Further, I’d like to think about how to capture insects as well as part of future iterations (they would probably require a different method of capture than GoPro). I also think that for the sake of my specific project, it would be nice to have a scrolling window filming method–such that things are filmed in e.g. 5 second chunks and when the Left/Right button is pressed, the last 5 seconds prior to the button press are captured/saved, allowing for higher resolution and FPS without taking up an exorbitant amount of space. It would also be interesting to look through this data for other kinds of typologies–some ideas I had were crosses/shrines at the sides of the road, interesting road cracks, and images of my own car caught in reflections. I would also like to build out the design of the incense burning website more and create my own map interface for displaying the route/geolocation/photo data–which I didn’t have much time to do this time around.

.

.

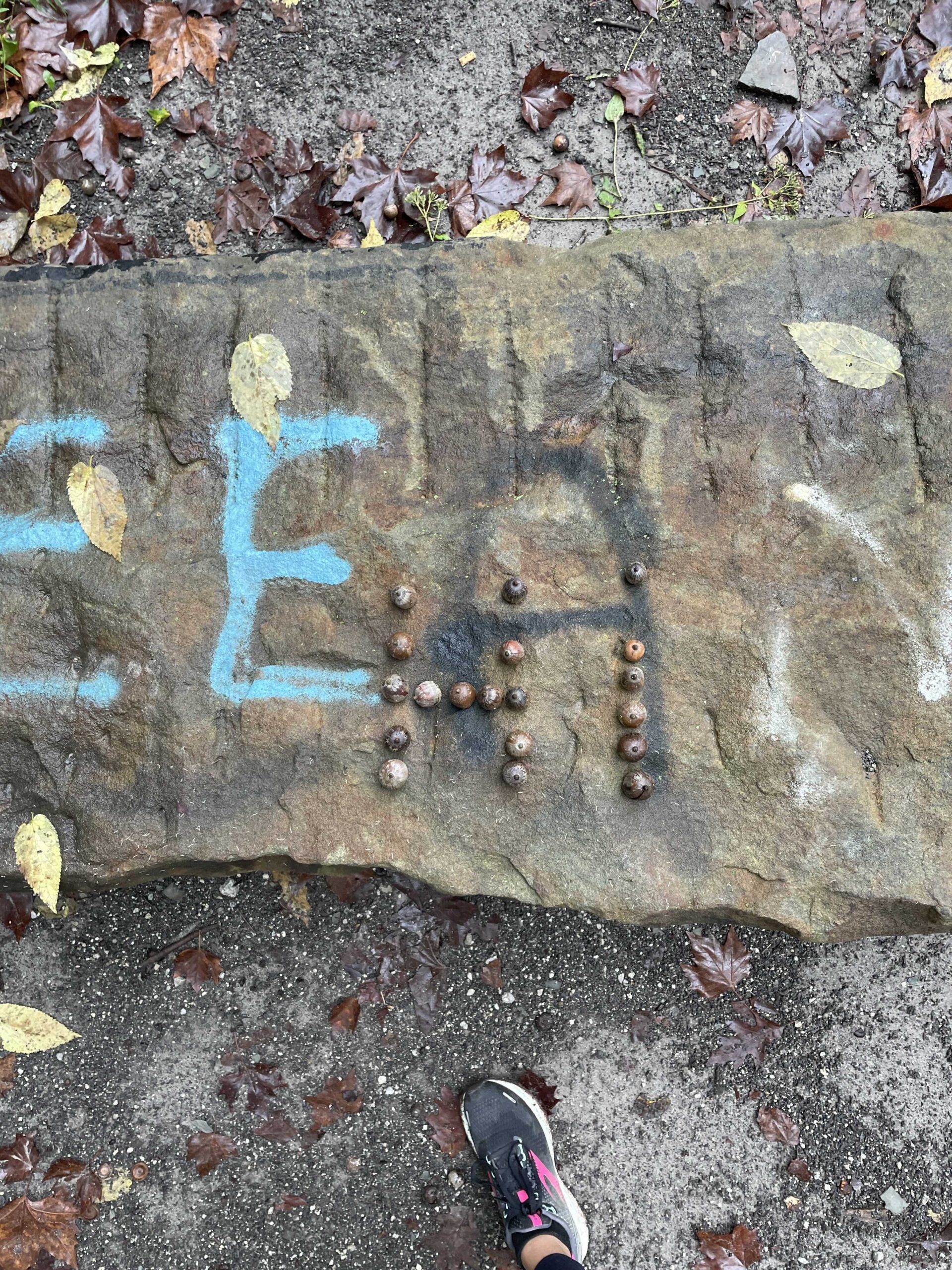

The acorn message that someone place on the bench on Tuesday morning.

The acorn message that someone place on the bench on Tuesday morning.