Many devices we saw Monday in class were ones I had never thought of as capture devices for creating art, including medical equipment like the ultrasound transducer we experimented with. This inspired me to research medical equipment, and here’s a short list of common technologies we have for scanning brain images, which I found very interesting:

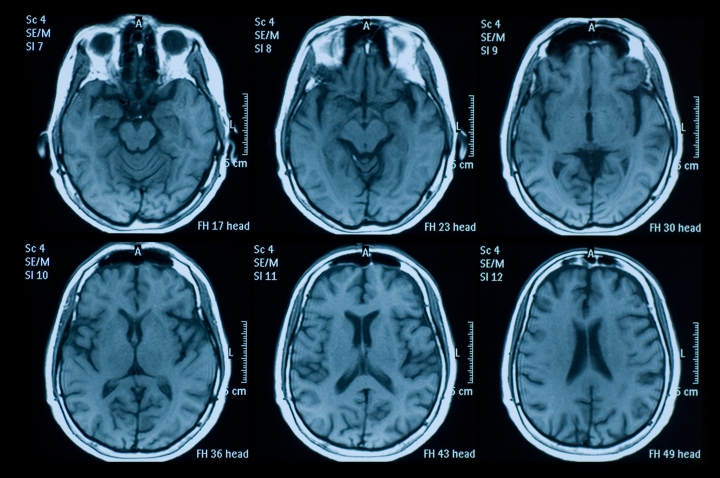

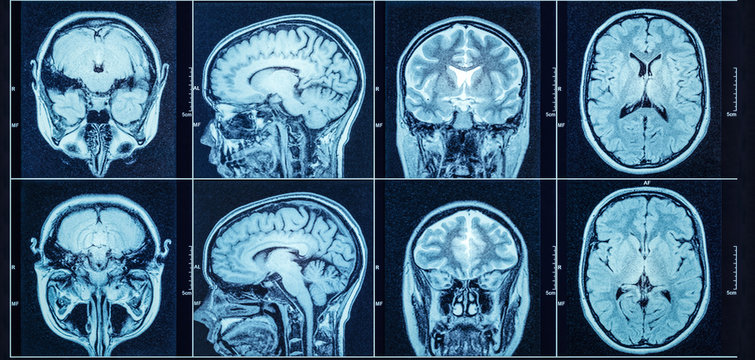

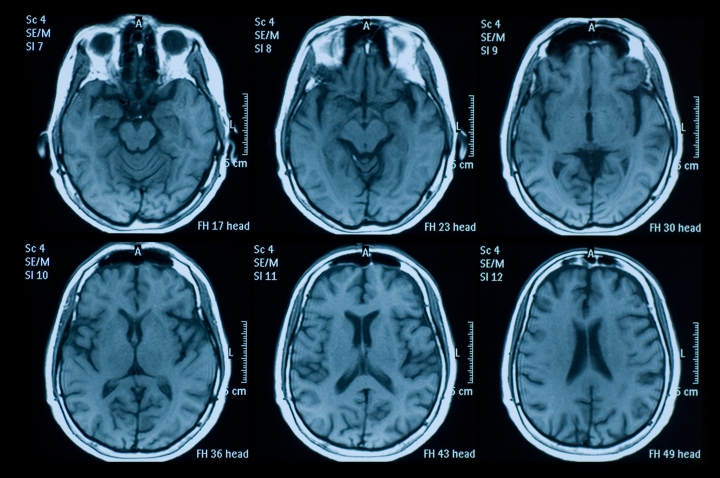

CT (Computed Tomography): An X-ray-based scan that beams X-rays through the head, producing a picture that looks like a horizontal slice of the brain.

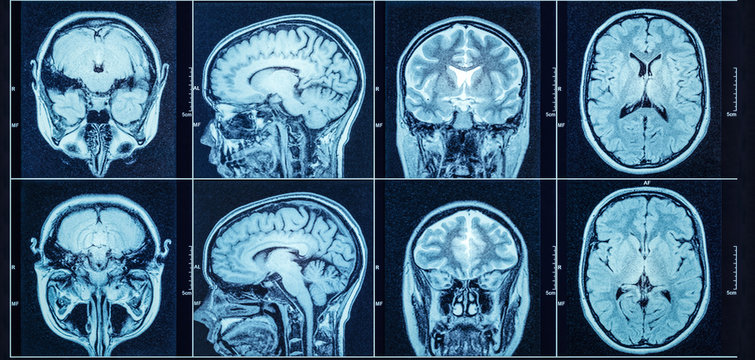

MRI (Magnetic Resonance Imaging): These scans construct an image of the brain by passing a magnetic field over the head. Hydrogen molecules in the brain react to this magnetic field by reverberating and sending a wave back. The scanner records this and turns it into a highly accurate image of the brain.

PET (Positron Emission Tomography): PET involves injecting a small amount of radioactive material into the body, which then accumulates in the brain. The scanner detects this radiation to create images that highlight areas of functional activity, producing a multi-color image of the brain that resembles a heat map.

PET technology is particularly interesting due to its ability to visualize brain activity, which I think it could be used to create dynamic, time-lapse pieces representing changes in brain activity over time. For example, visualizing changes in brain activity during different emotional states, and it could be translated into a series of animation.