This project is inspired by changing our perception of things in motion. By fixing something moving to be still, we can see relative motion previously unperceived. This project explores this idea with human eyes. What does it look like for a person to move around the pupils in their eyes?

Pupil Detection

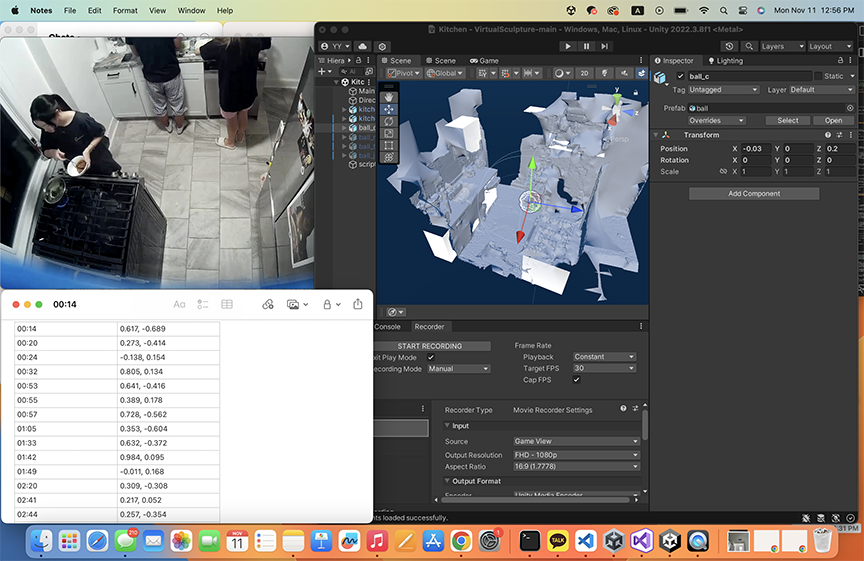

I knew I wanted video that extended beyond the eyeball, since the whole point was to see facial and head movements around the eye. I captured video that included the head and face and then used computer vision software to detect eye locations. Specifically, I used an open source computer vision library called dlib (https://github.com/davisking/dlib). This allowed my to detect the locations of the eyes. Once I had those points (shown in green), I then needed to detect the pupil location within these points. I was planning to use an existing piece of software for this but was disappointed with the results so I wrote my own software to predict pupil location. It essentially just looks for the darkest part of the eye. Noise and variability here are handled in later processing steps.

Centering

Once I processed the videos for the pupil locations, I had to decide how to use this data to further process the video. The two options were to 1. center the pupils of one of the eyes and 2. center the average of the two pupil locations. I tested both options. The first video below demonstrate using both eyes to center the image. I essentially took the line that connects both pupils and centered the middle point on this line. The second video is centered by the location of the right pupil only.

The second option here (centering a single eye) showed to allow for a little more visible movement especially when tilting your head, so I continued with this approach.

Angle Adjustment

I was decently pleased with how this was looking but couldn’t help but wish there was some sense of orientation around the fixed eye. I decided to test out 3 different rotation transformations. Option 1 was to make no rotation/orientation transformation. Option 2 was to correct for the eye rotation. This mean ensuring that the line between the two pupils was always perfectly horizontal. This required rotating the frame the opposite direction of any head tilt. This looks like this:

This resulted in what looked like a fixed head with a rotating background. I cool effect but not exactly what I was going for. The 3rd option was to rotate the frame the same extent and direction as the eye tilt. This meant the frame of the original video was parallel with the eye tilt as opposed to the frame of the output video being parallel to the eye tilt. This looks like this:

This resulted in what looked like a fixed head with a rotating background. I cool effect but not exactly what I was going for. The 3rd option was to rotate the frame the same extent and direction as the eye tilt. This meant the frame of the original video was parallel with the eye tilt as opposed to the frame of the output video being parallel to the eye tilt. This looks like this:

This one gave the impression of the face swinging around the eye, which was exactly what I was going for.

This one gave the impression of the face swinging around the eye, which was exactly what I was going for.

Noise Reduction

If we look back to the video of the eye from the pupil detection section above, you can see that there is a lot of noise in the location of the red dot. This because I opted to obtain some location in the pupil as opposed to calculating the center. The algorithms that do this require stricter environments i.e. close video shot by infrared cameras and I wanted the videos to be in color, so I wrote the software for this part myself. The noise that exists in the pupil location however, was causing small jitter movements in the output that weren’t related to the person’s movements, but rather the noise in the specific pupil location selected. To handle this, I essentially chose to take the pupil locations of every 3rd or 5th frame and interpolate the pupil locations for all the frames in between. This allowed for a much smoother video that still captured the movements of the person. Below is what the video looks like before and after pupil location interpolation.

Final results:

https://youtu.be/9rVI8Eti7cY