Right now, I am thinking of making a photo booth where the moment of capture is controlled by someone else.

I’m interested in making a photo booth. Capturing the candid.

TBD for Updates.

Experimental Capture – Fall 2024

Computational & Expanded ███ography

Right now, I am thinking of making a photo booth where the moment of capture is controlled by someone else.

I’m interested in making a photo booth. Capturing the candid.

TBD for Updates.

Pupil Tracking Exploration

I have two main ideas that would capture a person in time using pupil tracking and detection techniques.

1. Pupil Dilation in Response to Caffeine or Light

The first idea focuses on tracking pupil dilation as a measurable response to external stimuli like caffeine intake or changes in light exposure. By comparing pupil sizes before and after exposure to caffeine or varying light conditions, the project would aim to capture and quantify changes in pupil size and potentially draw correlations between stimulus intensity and pupil reaction.

2. Fixed Pupil Center with Moving Eye (The idea I am likely moving forward with)

Inspired by the concept of ‘change what’s moving and what’s still,’ this idea would create videos where the center of the pupil is always fixed at the center of the frame, while the rest of the eye and person moves around it.

Implementation Details

Both projects rely on an open-source algorithm that detects the pupil by fitting an ellipse to the pupil. Changes in pupil size will be inferred from the radius of the ellipse. The second idea will involve frame manipulation techniques to ensure that the center of the pupil/ellipse remains in the center of the image or video frame at all times.

https://github.com/YutaItoh/3D-Eye-Tracker

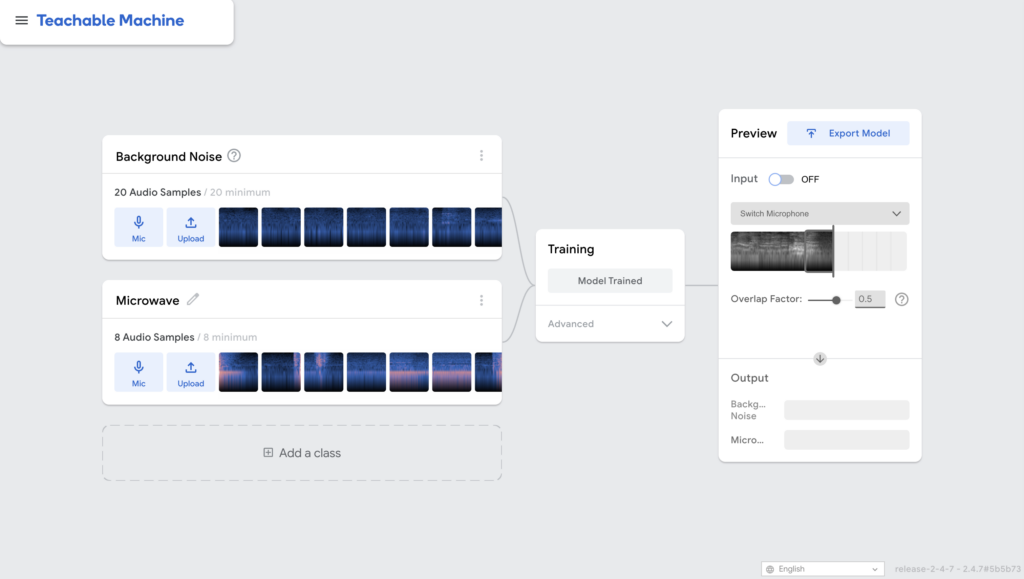

I sleep in the living room so I hear everything that is going on in the house… especially in the kitchen. If someone is making food, running the microwave, filling up their Brita water, etc. I can assume what they are doing in the kitchen by listening on the sound.

Goal of the project

Detect the sound coming from the kitchen and machine recognizes the activity and sends me a notification by logging my roommates activity while I am outside the house.

How

Test: microwave

Questions

My friend has a motorboat that he has had a strong connection with for most of his life. I wanted to help him say goodbye to the boat by making a piece that will be able to take him to the boat whenever he misses it after he sells the boat.

My plan is to make a multichannel (likely 8 channel audio capture) of the boat using a combination of recording techniques. This includes binaural microphone recording as well as using the ambisonic recorder. Small background – binaural audio replicates a particular person’s pov of hearing and binaural captures a 360 degree audio field that is completely unbiased which sounds quite different but I think are equally important in recreating the sound in a space. My idea is to amplify the recordings by panning them together in various ways spatially to create an effective soundscape. The documentation of this will be through comparing the outcomes of mixing down the multichannel sound.

I will be comparing the effectiveness of these techniques

How? – Boat trip – Recording out on a boat with both microphones while we have a small intimate chat about the boat and the history with it.

Concept

I’m planning to make an interactive installation that combines projection and live movement.

Workflow

The setup involves using TouchDesigner to create an interactive patch, which will be projected onto the wall. A dancer will perform in front of the projection, and I’ll use a kinetic or thermal camera to capture the dancer’s movements in real-time, feeding that data back into TouchDesigner to dynamically interact with the dancer’s motion.

Questions for improvement

How to make it more engaging and unique? and more relating to the prompt?

I’m still working on elevating the idea, like how can I use the captured movement to create a deeper, more immersive interaction between the dancer and the projection? Also, to be more related to the prompt, should the projection respond to specific types of movement, emotional intensity, or body part focus? I’m considering how the visuals could evolve based on the dancer’s movement patterns, creating a temporal “portrait” that reveals something hidden or essential about them.

I’d love suggestions on how to push this concept further.

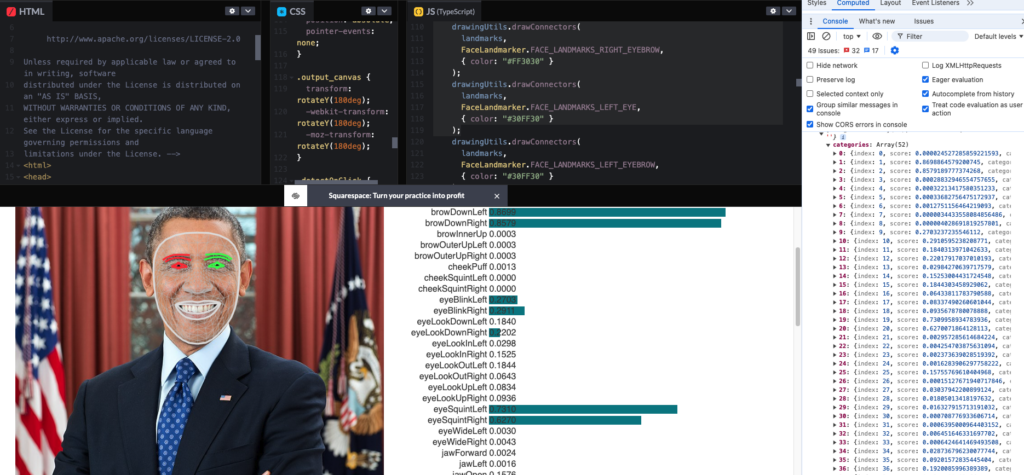

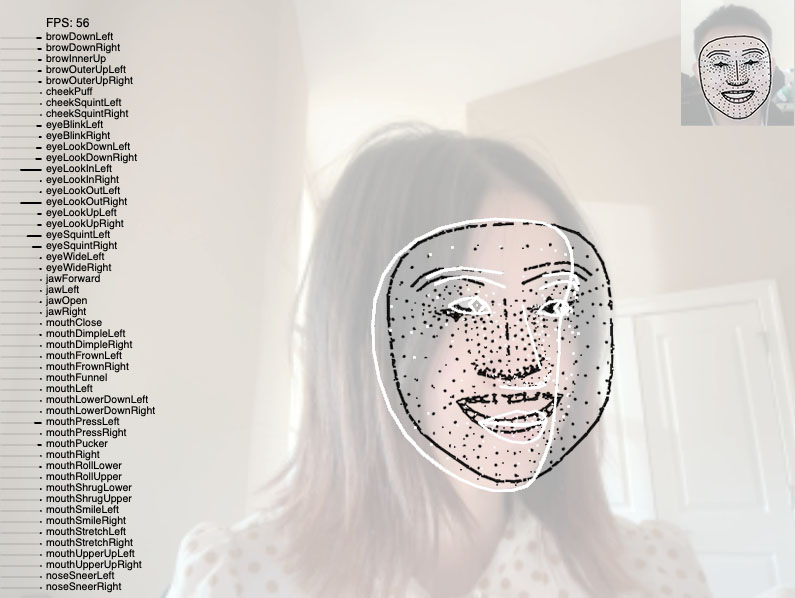

Topic1: Connection – Digital Mirror in Time

A website acting as a mirror as well as a temporal bridge between us. A real-time website, tentatively named ” Digital Mirror in Time”, aims to explore shared human expressions and interactions over time using advanced AI technologies like Google AI Edge API, MediaPipe Tasks, and Face Landmark Detection. The project transforms your computer’s camera into a digital mirror, capturing and storing facial data and expression points in real time.

Inspiration: Sharing Face, Visiting the installation at either location would match your expression and pose in real time with these photos of someone else who once stood in front of the installation. Thousands of people visited the work, and saw themselves reflected in the face of another person.

QA: can I get exactly the 478 landmarks results, currently I experimented and only can get face_blendshapes as output.

When a new user visits the website, their live camera feed is processed in the same way, and the system searches the database for expressions that match the current facial landmarks. When a match is found, the historical expression is retrieved and displayed on the screen, overlaying in the exact position.

This creates an interactive experience where users not only see their own reflection but also discover others’ expressions from different times, creating a temporal bridge between users. The website acts as a shared space where facial expressions transcend individual moments.

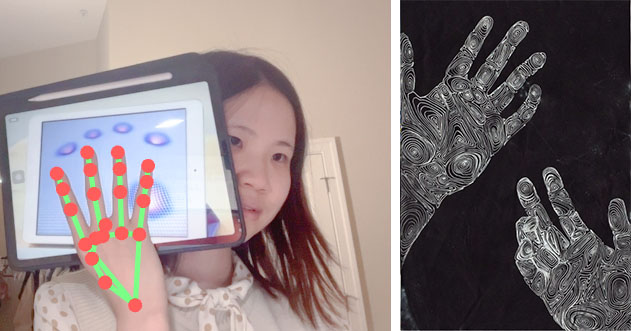

Concept: We often perceive the images of people on our screens as cold and devoid of warmth. This project explores whether we can simulate the sensation of touch between people through a screen by combining visual input and haptic feedback.

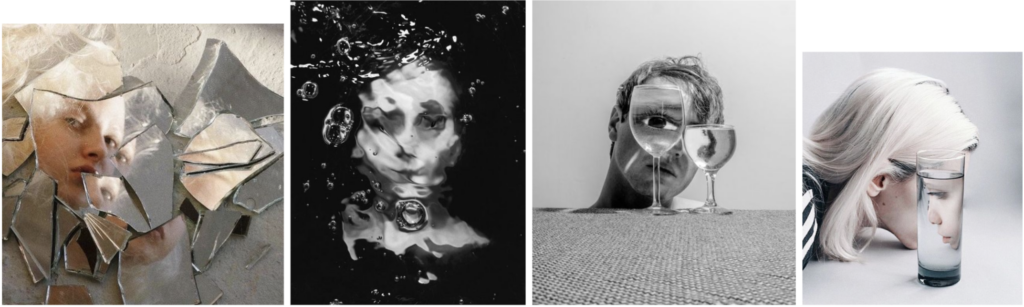

Topic2: Broken and Distorted Portrait

What I find interesting about this theme is the distorted portrait. I thought of combining it with sound. When adding water to a cup, the refractive index will change, and the portrait will also change. At the same time, the sound of hitting the container can be recorded.

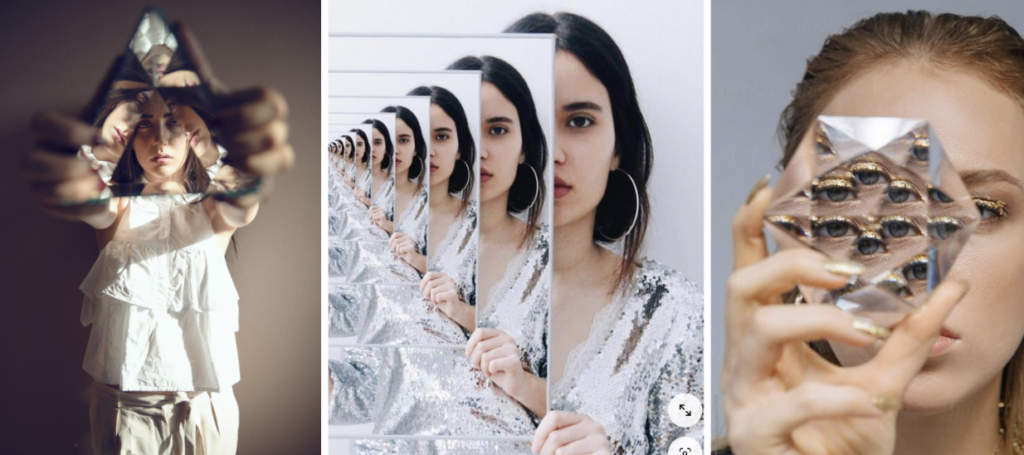

For this project, I’m really interested in creating a self portrait that captures my experience living with mental illness.

I ended up being really inspired by the Ekman Lie Detector that Golan showed us in class. While I’m not convinced by his application, I do think there’s a lot to be learned from people’s microexpressions.

I was also deeply inspired by a close friend of mine, who was hospitalized during an episode of OCD induced psychosis earlier this year. She shared her experience publicly on instagram, and watching her own and be proud of her own story felt like she lifted a weight off of my own chest. I hope that by sharing and talking about my experience, I might lift that weight off of someone else, and perhaps help myself in the process.

Throughout my life I’ve struggled with severe mental illness. Only recently have I found a treatment that is effective, but it’s not infallible. While lately I’ve been functional and generally happy, I would say I still spend on average 2-3 hours each day ruminating in anxiety and negative self talk, despite my best efforts. These thought patterns are fed by secrecy, embarrassment, and shame, so I would really like to start taking back my own power, and being open about what I’m going through, even if it’s really hard for me to tell a bunch of relative strangers something that is still so taboo in our culture.

So, personal reasons aside, I think it would be really interesting to use the high speed camera to take potrait(s) of me in the middle of ruminating, and potentially contrast that with a portrait of me when I’m feeling positively. Throughout my life folks have commented on how easy it is to know my exact feelings about a subject without even asking me, just based off my facial expressions, because I wear my feelings on my sleeve (despite my best efforts!). I’ve tried neutralizing my expressions in the past, but I’ve never really been successful, so I’m hoping that’s a quality that will come in handy while making this project. If being overly emotive is a flaw, I plan to use this project to turn it into a superpower.

I’ve also contemplated using biometric data to supplement my findings, like a heart rate or breathing monitor, but I’m not totally married to that idea yet. I think the high speed camera might be enough on it’s own, but the physiological data could be a useful addition.