I’d like to use the eye tracker to reveal the sequence that people look at images. When looking at something, your eyes move around and quickly fixate on certain areas, allowing you to form the impression of the overall image in your head. The image that you “see” is really made up of the small areas that you’ve focused on, the remainder is filled in by your brain. I’m interested in the order in which people move their eyes over areas of an image, as well as the direction that their gaze flows as they look.

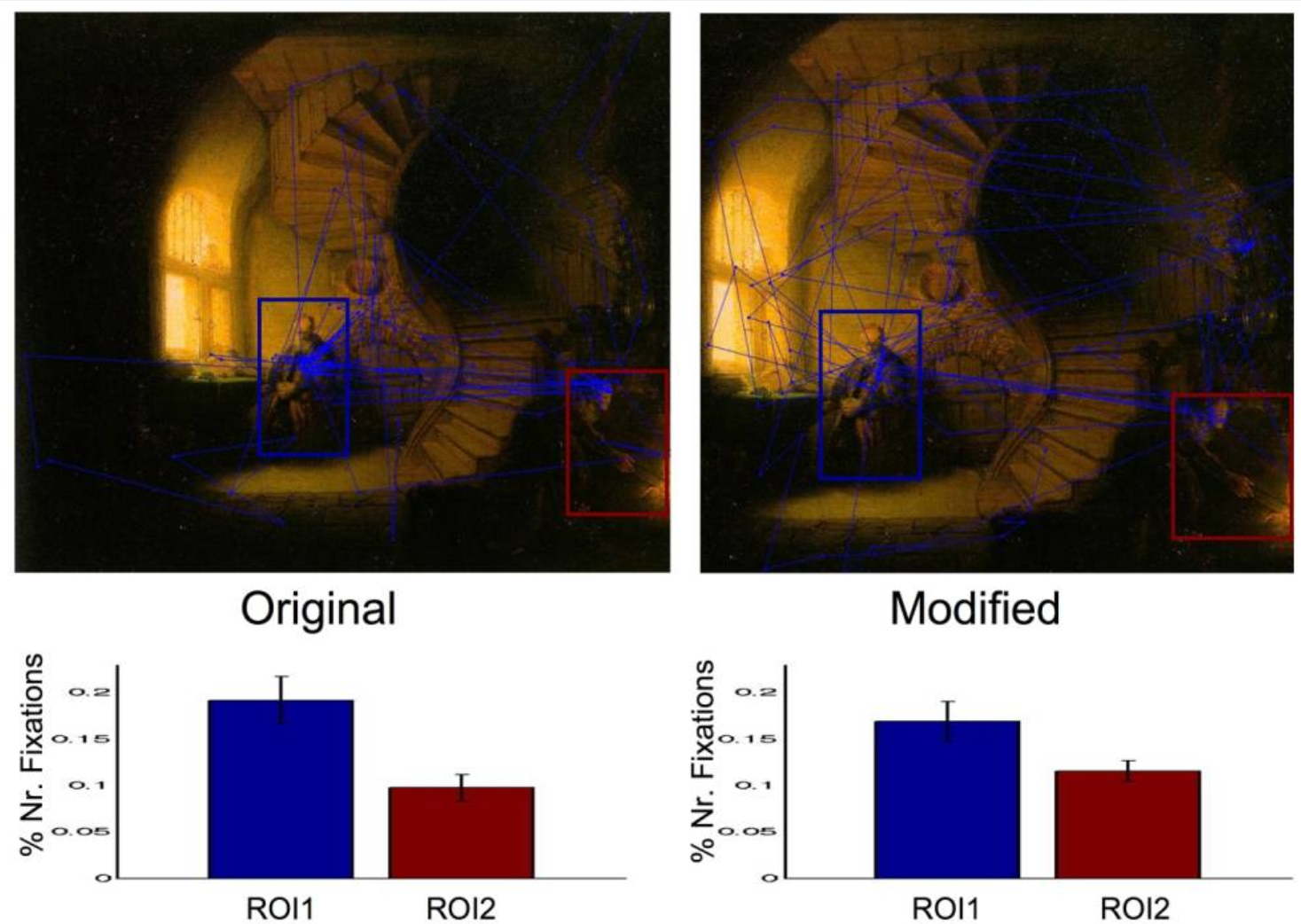

Eye tracking has been used primarily to determine the areas that people focus on in images, and studies have been conducted to better understand how aspects of images contribute towards where people look. In this example, cropping the composition changed how people’s eyes moved around the image.

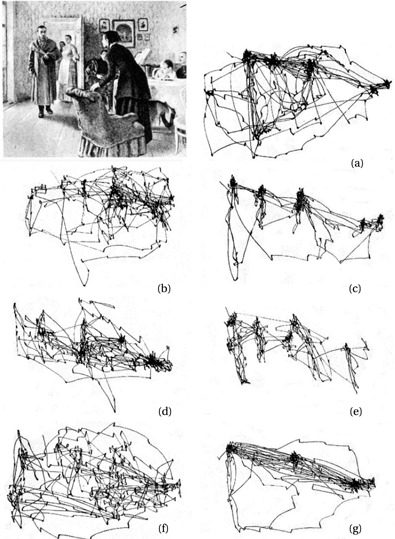

There is also a famous study by Yarbus that showed that there’s a top-down effect on vision; people will look different places when they have different goals in mind.

I haven’t been able to find any studies that examine the sequence in which people look around an image. I’d like to see if there are differences between individuals, and perhaps how the image itself plays a role.

I’m going to show people a series of images (maybe 5) and ask them to look at each of them for some relatively short amount of time (maybe 30 sec). I’ll record the positions of their eyes over the course of the time in openFrameworks. After doing this, I’ll have eye position data for each of the images for however many people.

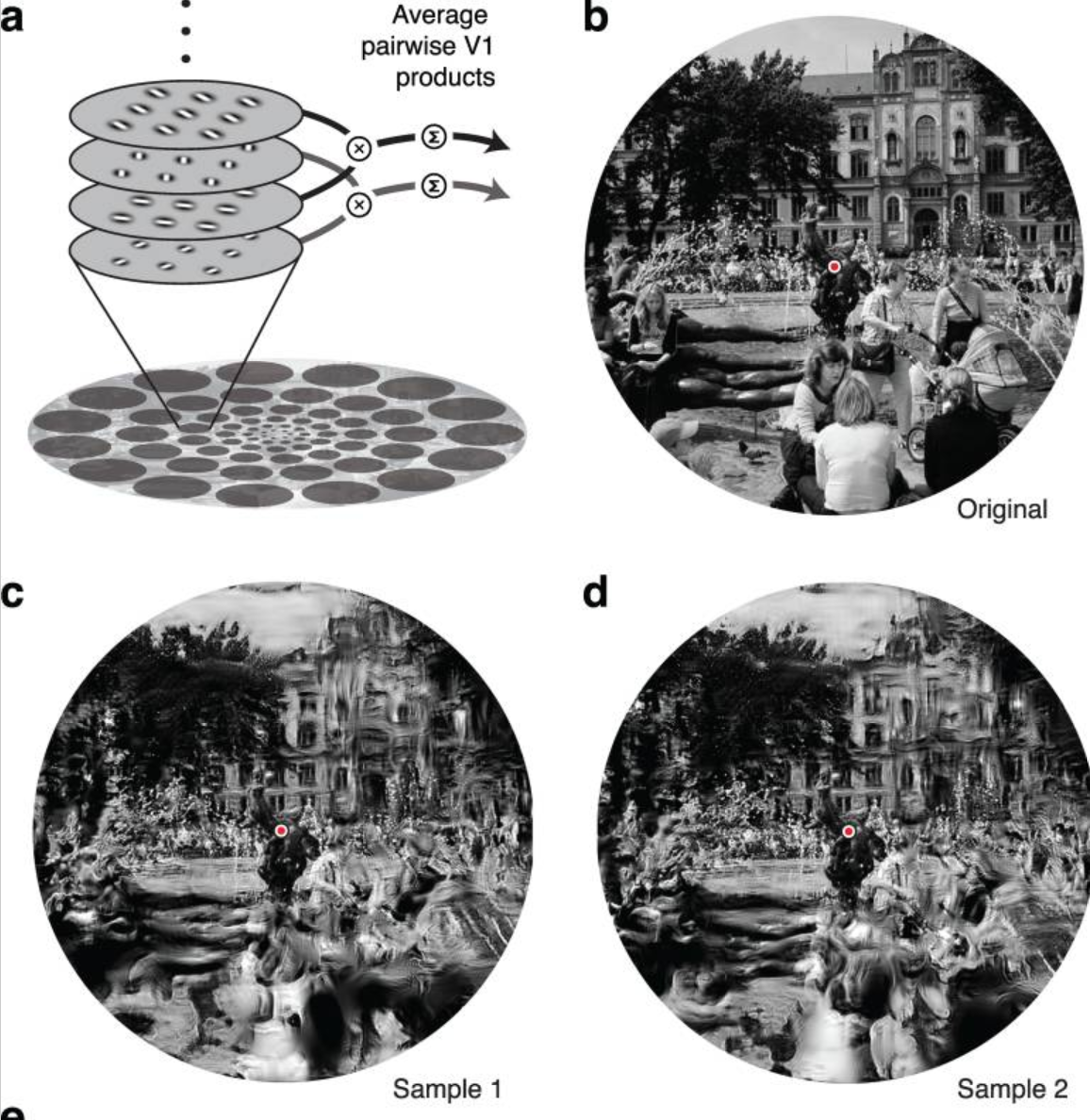

Next, it’s a question of presenting the fixation paths. I’m still not totally sure about this. I think I might reveal the area of the image that they fixated on and manipulate the “unseen” area to make it less prominent. There’s lots of interesting possibilities for how this might be done. I read a paper about a model of the representation of peripheral vision that creates distorted waves of information.

It could be nice to apply this kind of transformation to the areas of the image that aren’t focused on. The final product might be a video that follows the fixation points around the image in the same timescale as they’re generated, with the fixation in focus and area around distorted. A bit like creating a long exposure of someone’s eye movements around an image.