Technical Plan

Our objective for the proof of concept is the prototype for a single eye. Basic prototype for the eye tracking system.

One unknown contingency is what sort of mapping from eye movement to servo motor data will be used. Currently, I was planning on creating a central horizontal and vertical axis relative to the center of the eye. Since we are detecting the pupil, the distance between the central axis point and the pupil could be calculated. This information would then need to be scaled appropriately to represent servo angle movements. The concern with this approach is that it might be difficult to find the distance between the center point and pupil since it is on such a large scale. Furthermore, normalizing the information could only be done through a proof of concept test.

Another unknown contingency relates to the physical materials being used. We decided to use plywood for our box components. The current concerns are if it will be sturdy enough to support the weight of the device. Furthermore, we need to make cutouts for the clay eyes to go in, so we are trying to determine if plywood would be an easy material to manipulate in terms of cutting holes in the shape. Regarding the clay eyes, we are also unsure of what diameter to make them. Thus far, it seems to depend on the size of the drawing. This means that we can’t account for the diameter of the pipe tubing needed to create the 3 axis gimbal. We also can’t account for the sizes of the L brackets.

Project management

Our project will be split into three technical components: Hardware, Software, and a Communication Channel between these. Marione will be in charge of most of the hardware. She will create the CAD drawings and set up the structure so that the servos can control the eyes. She will create the physical prototype, and then the software will be tested. Max will work on the software component which will use python open CV for facial recognition. Open cv allows for the use of facial keypoint detectors to create bounding boxes for facial features. Once the facial recognition software is completed, Max will also work on sending this information to the arduino using the MQTT bridge program which will be normalized for servo movement on the arduino end. Once the arduino has processed and converted the data appropriately, it will be sent to the servos which will cause the eyes to move.

We plan to communicate via zoom and messaging. We can send pictures of the hardware/software over messages to keep each other updated on our progress, but we will need to test the actual design over zoom. We will meet at least once a week and ensure that we stay on top of our deadlines and goals that we have set for ourselves.

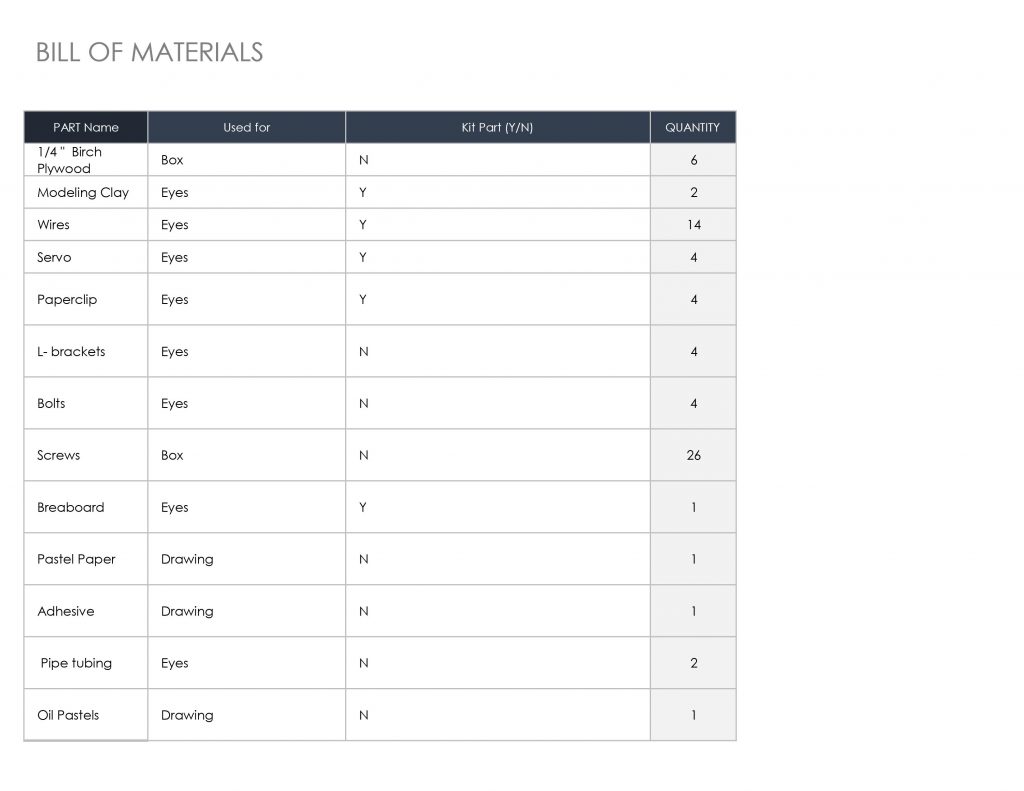

Draft of Bill of Materials

Leave a Reply

You must be logged in to post a comment.