Project Work Plan: Head in the Clouds

Wade Lacey, Amber Paige, Evan Hill

Our final project is titled “Head in the clouds”. This piece was inspired by a Zimoun piece, we enjoyed the expressive movements derived from simple mechanics. Our goal for the piece is to create a unique and individual experience for each user. We hope to convey a feeling of mesmerizing isolation, similar to how you would feel lying on the ground and looking up at the clouds.

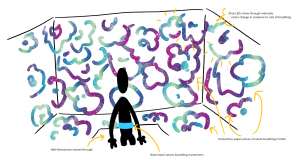

The viewer will first see the piece as a large plain enclosure from the outside. As they begin their interaction, they will crouch down and walk/crawl into the enclosure. Once inside they will be fully surrounded by abstract paper forms and lights that are stagnant and seemingly inanimate. The user will either grab a sensing handle or put on a torso strap to allow for their breathing to be monitored. Once the user is registered by the device, the paper forms and diffuse lights will begin to expand and glow in time with the user’s breathing. The space will be reactive in a way that creates a feeling of deep connection with the user. The surroundings will almost seem like an extension of the user’s own body. It will expand and contract as the user breathes creating a space with a constantly changing shape, size, and color. The depth of the space will almost seem greater than the physical dimensions of the enclosure. The theme of this year’s course revolves around using software and hardware techniques to create expressive dynamic behaviours, while encompassing the following question: what does it mean to be surprisingly animate? We believe that we contribute to this theme by creating a space which is surprisingly animate within the confines of the enclosure than you would assume by looking at it from the outside. By using simple materials, such as motors and paper, to create movement and by linking their movements to the user, our piece aims to develop a relationship between the user and the space.

To create this robot we will build an enclosure made out of plywood that will encompass the top half of the viewer and will be supported on 4 legs. Inside we will have lots of paper (typical white paper as well as tissue paper) that will be actuated. For this we need the paper, an actuation system comprised of hobby servo motors and multiple microcontrollers. To actuate the paper in a more animate way we will create a structure that will be moved by the motors and themselves push/pull on the paper in different ways. To build this component we will need plywood that will be laser cut into the desired designs. Behind the paper along some areas of the walls of the enclosure we will have LED strip lights to add more depth. The sensor system in our robot will be a breath sensor which will be a chest strap that will be worn by the person experiencing our piece, the input from this will feed into our microcontrollers to effect the movement of the paper.

The two most import parts of our project that will determine the overall success is the sensing of the user’s breathing and the mechanism to actuate the paper forms. Once these are designed, built, and tested we simply need to scale the mechanism to fill the entire enclosure. We plan to first experiment with paper to determine how we will make our forms and what mechanism we will use to create the expanding and contracting movement. Next, we will integrate a singular mechanism with the sensor to ensure we can create a sense of connection through monitoring the user’s breathing. The next step will be to design the structure of the enclosure in a way that makes it easy to attach the paper actuation mechanisms all over and run the wiring throughout. Our structure also needs to be easy to disassemble so that we can move it to the final gallery show. Once our structure is built and we have a working actuation design and sensing system, we will work to implement the actuation mechanism at scale.

A more detailed path analysis can be seen in the schedule linked below. Our B.O.M. and budget is linked below as well.

Comments are closed.