By Jordi Long and Zhecui Zhang (Doris)

Concept:

The specific feeling that we are trying to evoke is inter-species compassion. We are attempting to create empathy in the viewer toward the robot in different scenarios.

Performance Objective:

The robot needs the human’s help to fulfill its own purpose. The mannerisms and actions of the robot when presented with different challenges in the scene will hopefully make the viewer want to help and protect the robot.

Design:

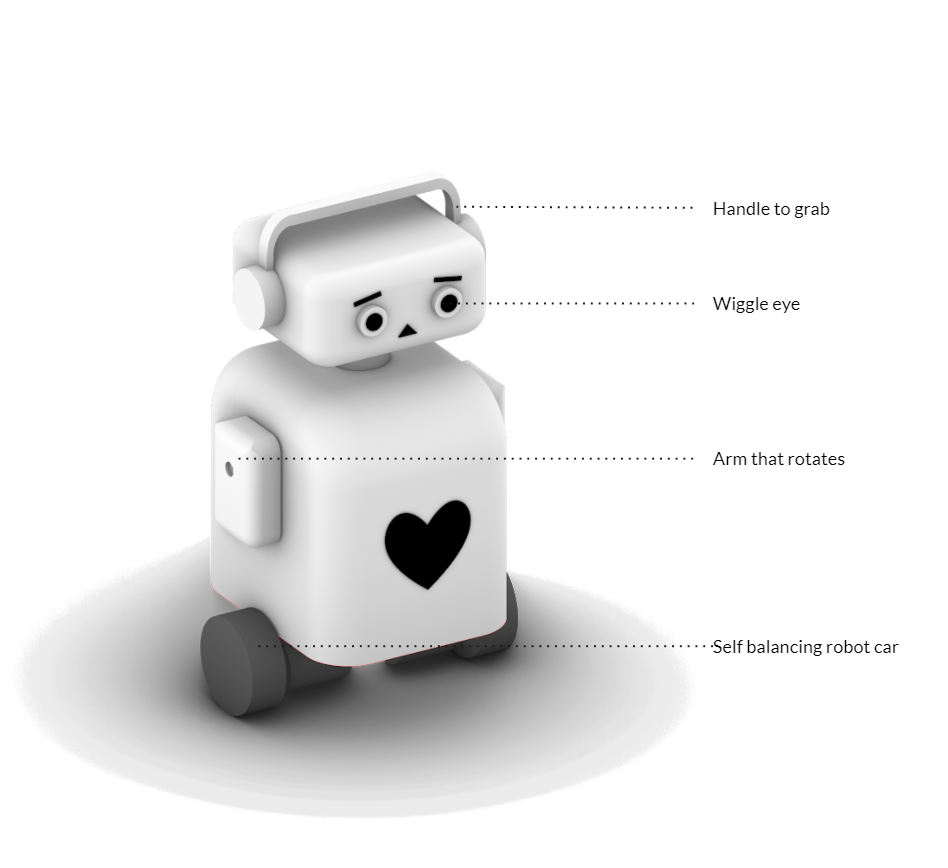

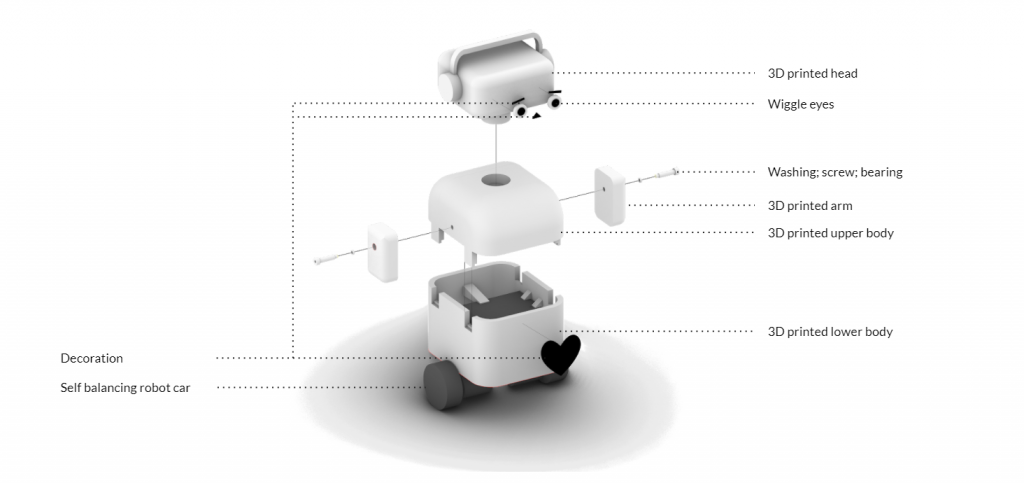

Since we are trying to create a helpless robot that needs human’s attention and help, we decided to made the robot with a sad and cute facial expression. The self balancing robot car kit we purchased online features clumsy movements, which is in line with our design concept – a robot that is not super capable of doing things. In order to make it easy for human to help the robot to stand up, a headphone is added as a handle for people to grab. There are three degrees of freedom for dynamic movements, it is made possible by wiggle eyes; arm that rotates and self balancing robot car.

Fabrication

Coding

- Arduino IDE: Code from robot developer

- Python Remote Control Interface: allows us to

- Alter gains of PID controller of robot for balance

- Drive robot like a remote control using keyboard

- Act out different scenarios without writing hundreds of lines of code

- The remote control aspect of our robot gives it a sense of personality, as it makes mistakes and falls over often.

Scenarios

- Bump into foam blocks

- Bump into futon

- Walk around a plant

- Walk through a stool

- Transition between timber flooring and carpet

Evaluation

It was successful with the two person we tested on, however, they are both graduate students from the ETC programme, which means they are familiar with robot human interaction and interactive devices in general. One of them, the male participant worked on a much more advanced robot project with a Boston Dynamics’s robot, that is probably why he is so responsive to the robot we built. (See the video documentation) Therefore, we should get people from different age groups and diverse background to react to it.

Through the human-robot interaction in the documentation we can see that it would be more interesting if the robot is more responsive, for example, the robot moves its head while the person says hi or stairs at the participant while moving around to attract more attention.

Citations

- https://ieeexplore.ieee.org/abstract/document/677408?casa_token=l-8PTx_sTGMAAAAA:dNA-X7wK_4fqobxPQ1q8Jk15pSa9X_8NA8uiwvIKJgyT9p-ANHi_9M8Ctd2bB_ZoJtWJFGP1

- http://simonpenny.net/works/petitmal.html

- https://www.youtube.com/watch?v=ntlI-pDUxPE

- https://www.youtube.com/watch?v=_wc9AJ5FuWY

- https://www.youtube.com/watch?v=UIipbi0cAVE

Contribution

Jordi: Coding + Driving + Video Editing

Doris: Design + Fabrication + Video Recording

Documentation

***Our video documentation is super long because the male participant was super responsive to the robot and the interaction is super interesting that we did not want to cut the interaction and interview short.

***The documentation for the male participant is very blurry because we forgot to check the focus of the camera before recording and we think it would not be authentic if we re-record it.

Comments are closed.