ehyang // xiaoranl

01_Title

Audience

02_Statement

Designed to disrupt traditional piece-viewer exhibition dynamics, Audience focuses their attention on what it decides is most interesting, turning willing viewers into performers. Audience also taps into the context of surveillance and camera technology in modern society to further impact viewers.

Audience recognizes individual viewers, judges which is most interesting, and locks on to the chosen subject with all three of its cameras. The performer is now aware that the Audience attention, and resultingly the attention of other surrounding viewers, is focused on them- the goal is to highlight the reaction to this attention.

The piece in itself is quite uninteresting, what was interesting was when it is able to interact with the audience. In which case the name of the project can be viewed in two different ways. The first is that it in itself is an observer and audience to the people around it. The second is that it requires an audience to really be able to function interestingly. We found this to be a quite interesting theme, as the observed and the observing roles are quite vague in this particular scenario. What the audience makes out of this interaction can be up to their own interpretation.

03_Relation to Course Themes

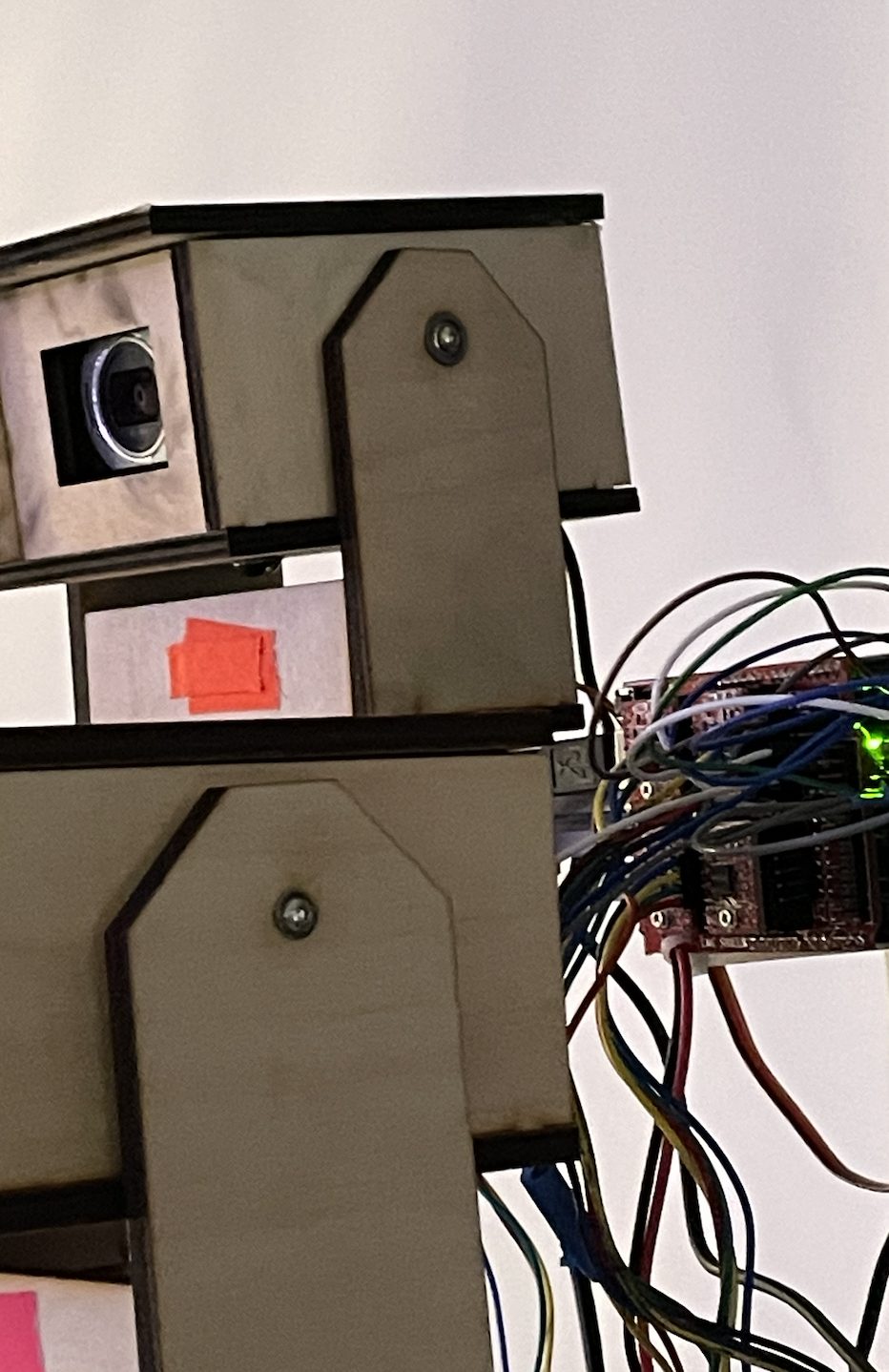

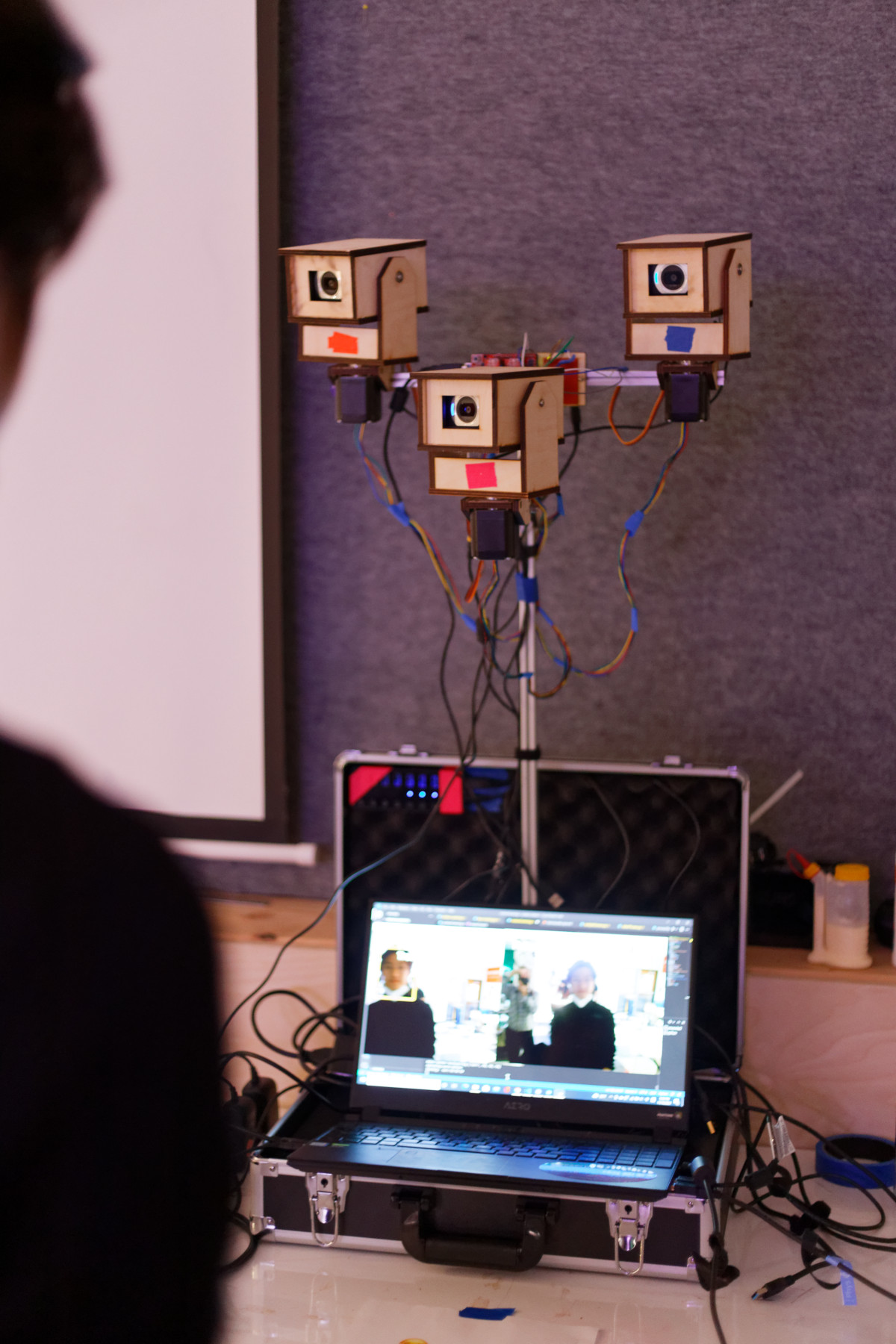

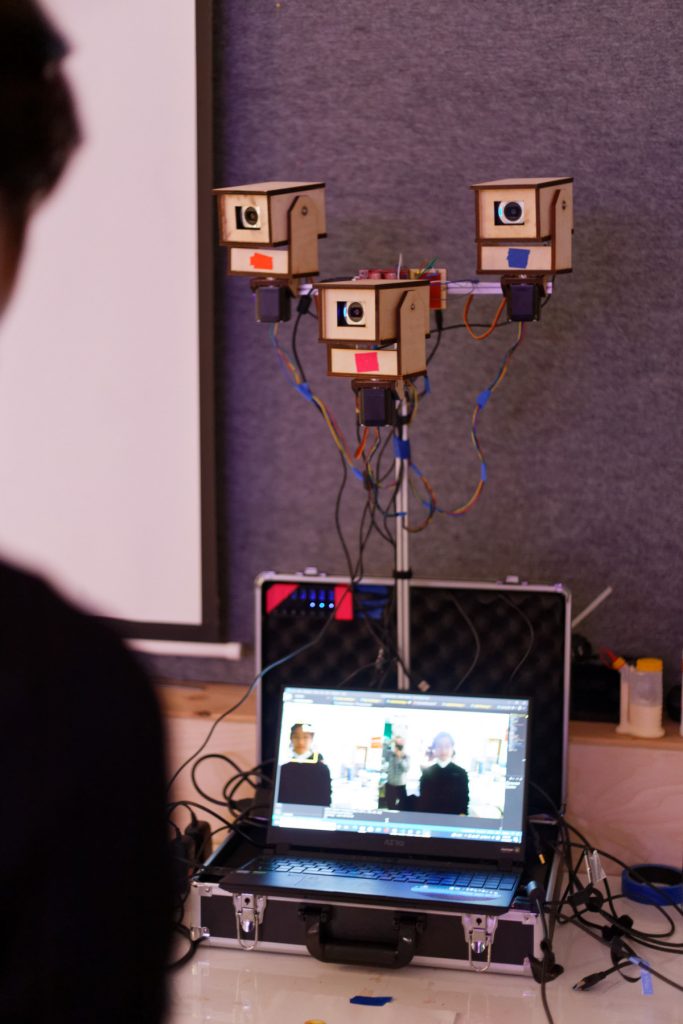

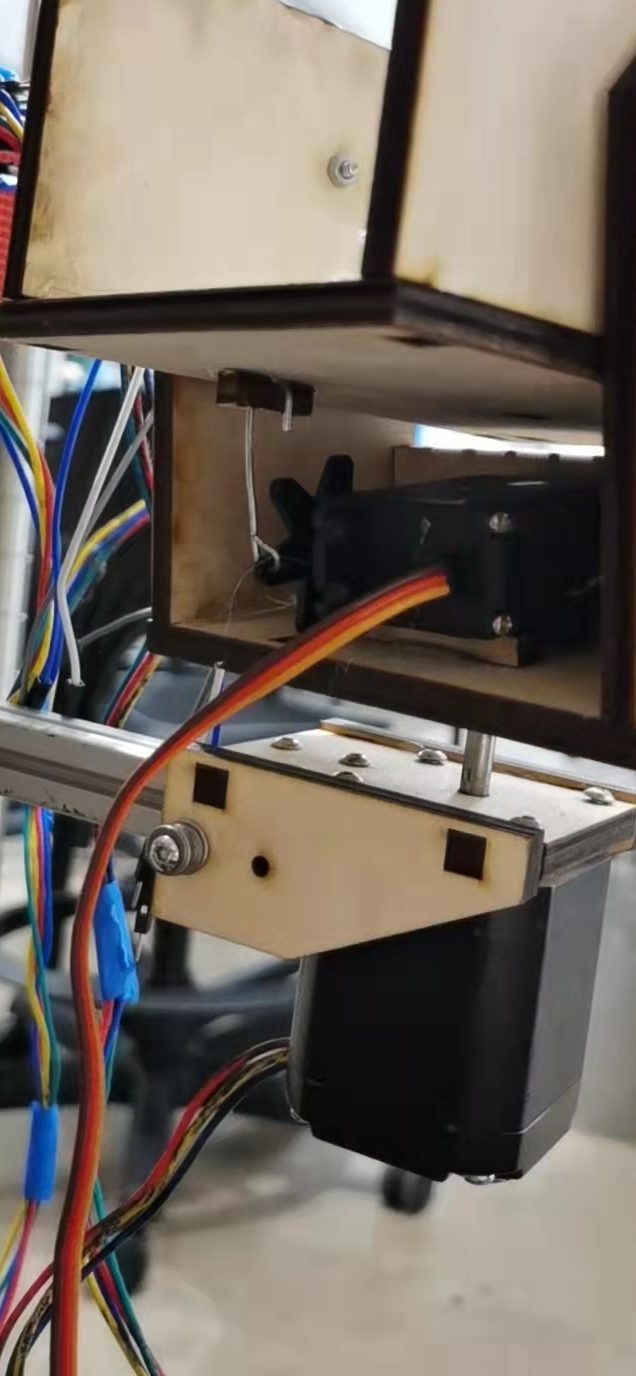

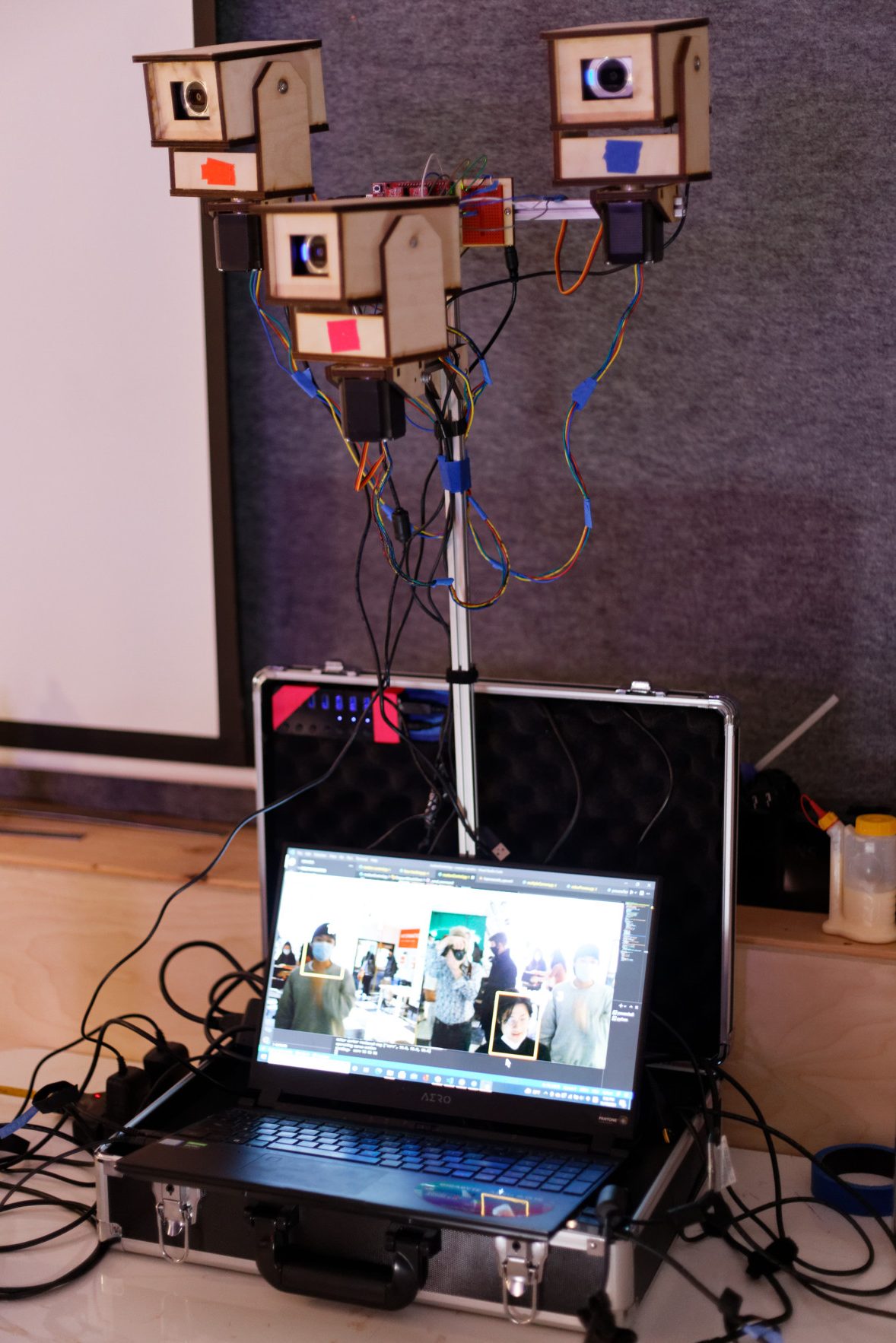

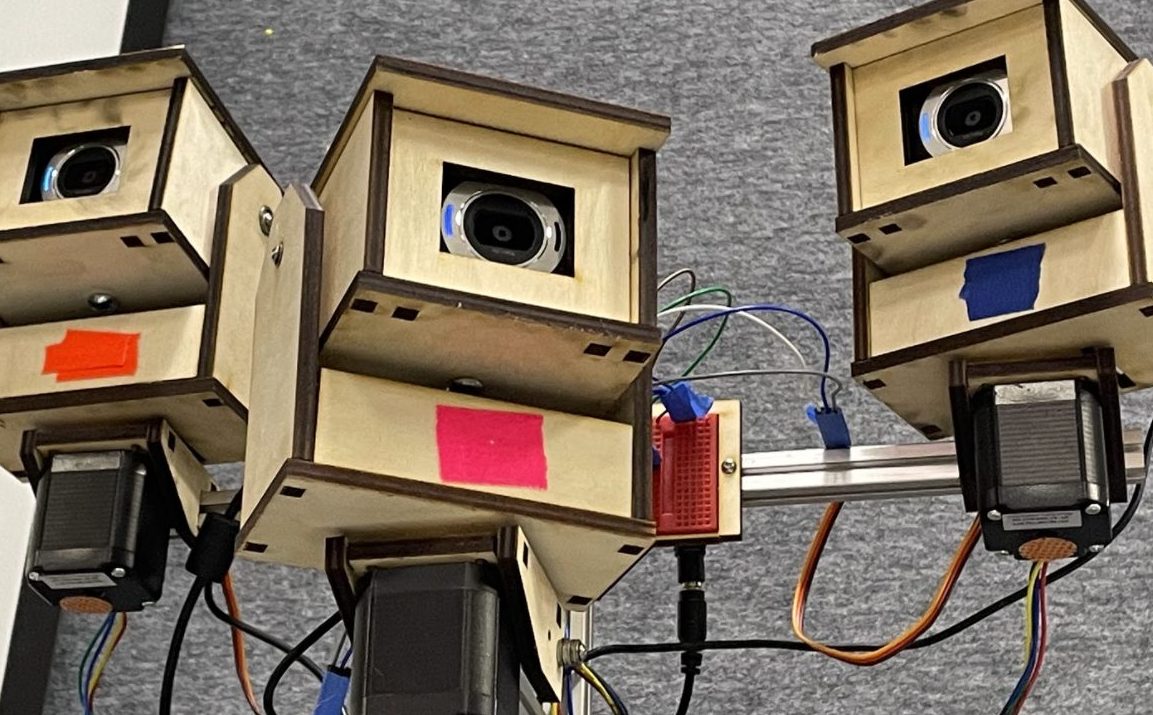

Essentially, Audience is a 3-camera tree apparatus mounted in a suitcase, with each camera capable of pan and tilt motions. However, with such simple motions, Audience creates the impression of a foreign object with a purpose to research and observe, hopefully inspiring curiosity and caution within viewers.

Audience can serve as an interesting experiment demonstrating the significance of context of utilized technology, behavior, appearance, and the synthesis of the three. Furthermore, robotic sculpture in itself carries a connotation that can’t be ignored, and contributes to the impact of this particular piece in a major way. Generally speaking, while complexity adds a unique dimension to robotic sculpture, it is not essential to creating an artistic narrative / message.

04_Outcomes

Hardware:

Audience was generally successful as a physical piece. The decision to have three cameras, arguably the stand-out characteristic of the piece, was extremely important, as the impression of “multiple viewers” within the “Audience” would not have been nearly as impactful otherwise (two cameras can give off the impression of eyes, and don’t communicate a “crowd”). Stainless steel beams support the structure as it towers out from the suitcase and over viewers- the scale of the piece successfully established the intended subtle power dynamic / surveillance feeling. Laser cut braces, exposed Arduino, loose wiring, and suitcase form communicate an autonomous and “scavenged tool used in a dystopian future”, suggesting a higher power utilizing the tool, and tying into the fears associated with surveillance.

Software:

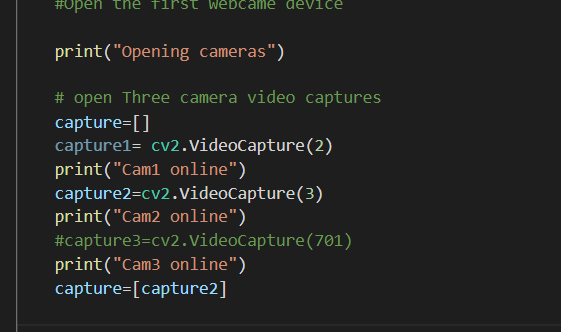

The software part is the essential driving force behind the moving motion of the cameras. For this project, the code contains mainly of two parts: the openCV python code that captures video feeds and attempts to do facial recognition, and the Arduino script that receives commands and drive the motion for the 6 motors.

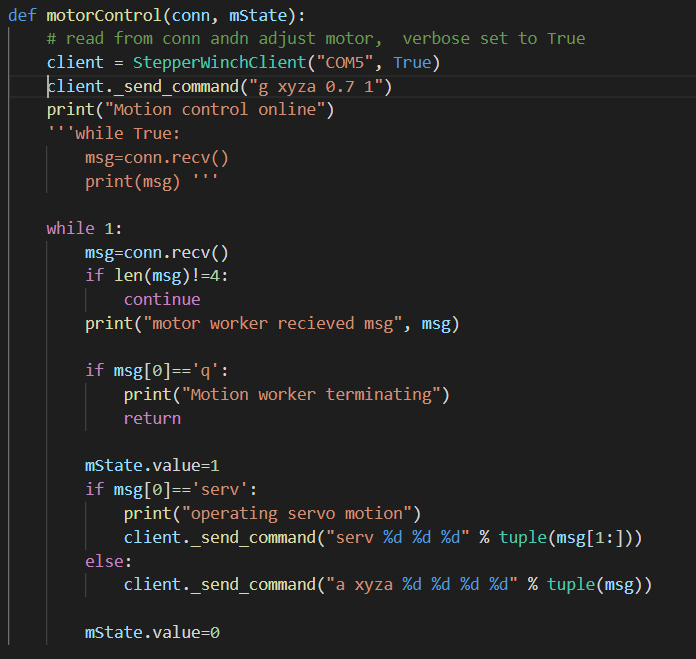

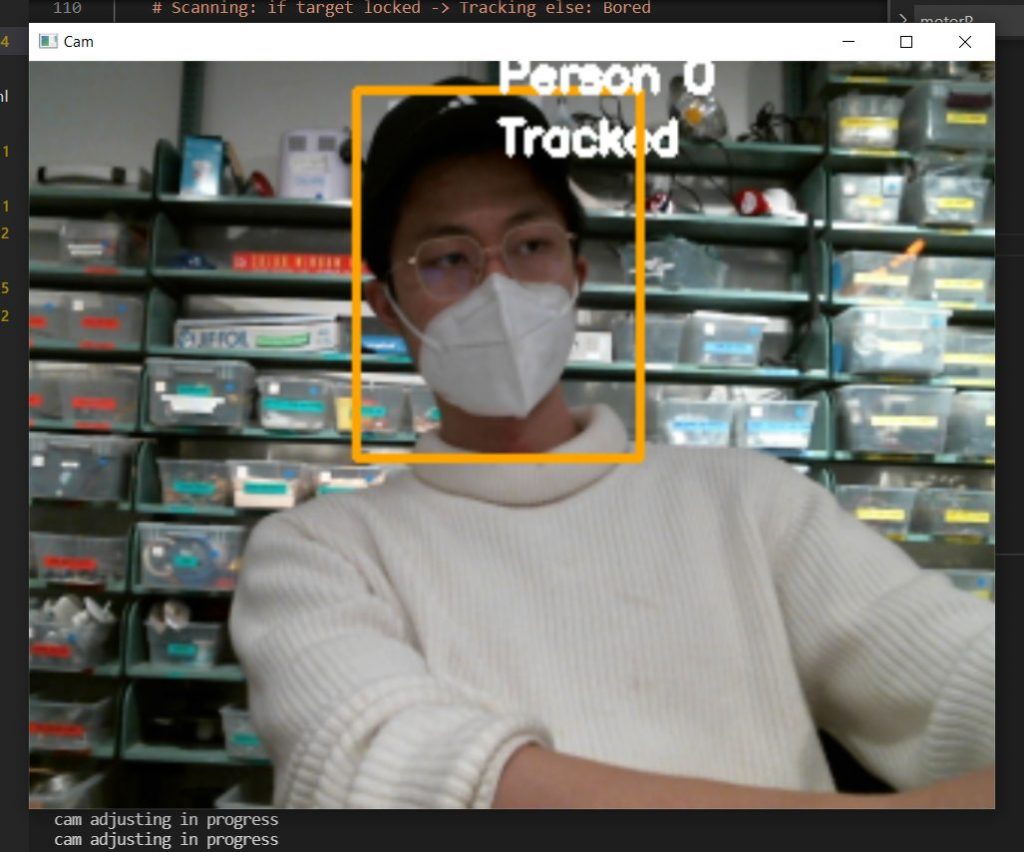

For the openCV part, the program opens all three cameras and produces a script that runs facial recognition to capture all possible faces. The general behavior would be when a face is captured, the camera would try to focus on the person. The program would send the Arduino commands through a concurrent worker, which in turn drives the Nema or servo motors to turn to the correct position. Thus the camera would follow the person around.

The more interesting behavior is invoked with state assignment and randomness. Each of the cameras shifts between multiple states, Bored, Tracking, Following, Scanning. Each of the states involves different behavior, of which the most notable is following. While each camera is essentially controlled separately, it is possible for them to coordinate. When a certain tracking camera decides that the person is quite interesting, it may pull the other two cameras to follow its movement, creating the idea that you are being observed by all three cameras.

The Arduino script simply receives commands and provoke the correct motion in the six motors.

05_Video Documentation

06_Reflections and Challenges

The main shortcoming that comes with the system was in fact the ability to upscale the project to three cameras. Rather early into the development, we had a rather fluent working model with a single camera. However, the OpenCV code with three HD camera feeds consumed a large proportion of the USB bandwidth of the laptop running the system. We had to downscale the image quality and separate to different USB ports for each camera. Which in fact provided difficulties for Arduino control as it had to share a port with one of the cameras

The second challenge was with Arduino port writing in real-time with OpenCV video control. It was very difficult to make the Arduino control reflected in real-time, and the project was short-lived because of some port write capacity problems in the Arduino, which blocks any further write attempts about 2 minutes into the project being turned on.

07_Citations

Source code:

https://towardsdatascience.com/face-detection-in-2-minutes-using-opencv-python-90f89d7c0f81 OpenCV facial recognition reference

https://courses.ideate.cmu.edu/16-375/f2021/text/code/StepperWinch.html#stepperwinch Arduino script

https://courses.ideate.cmu.edu/16-375/f2021/text/code/suitcase-scripting.html#suitcase-motion-demo-py Pyserial script reference

CAD files:

08_Photo Documentation:

09_Contributions

Ethan:

Original brainstorm / ideation

Visual / artistic direction

Design research / BoM

Prototype fabrication / iteration

Final fabrication

Alex:

“Behavior” brainstorm / ideation

“Behavior” research

Software iteration

Final software