by Manuel Rodriguez & Jett Vaultz

13 May, 2018

https://vimeo.com/269571365

Abstract

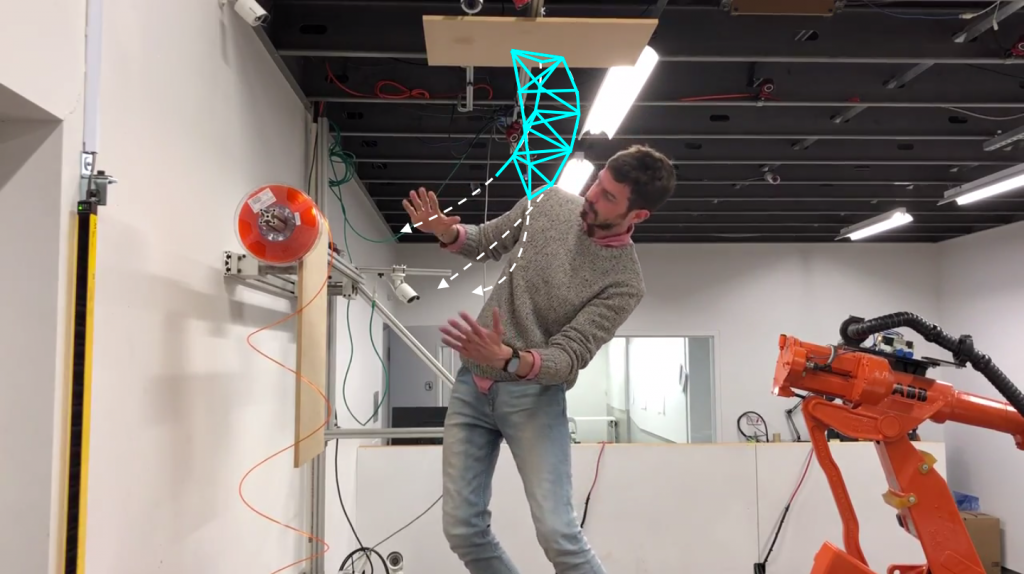

Agent Conductor is an interactive fabrication process where one can control virtual autonomous agents to develop organic meshes through MoCap technology and 3D spatial printing techniques. The user makes conducting gestures to influence the movements of the agents as they progress from a starting-point A to end-point B, using real-time visual feedback provided by a projection of the virtual workspace. The resulting artifact is a 3D printed version of the organic tunnel-like structure generated based on the agents’ paths.

Objectives

Our goals for this toolkit were to be able to fabricate an organic and structurally sound truss that could most likely be used for decorative and non-structural architectural elements, given the weakness of the material.

We wanted to develop a few key features:

- Generate a virtual 3D mesh that is printable with the spatial printing tool

- User control of the path the agents take to get to the target to some degree

- User control of the diameter/size of the mesh at any given point

- Responsive view of the virtual workspace for real-time feedback

Implementation

With this list of key control elements in mind, we wanted the interaction to be simple and intuitive. For affecting the path the agents take to the target, it seemed most effective to control this with swiping gestures in the direction the user would like the agents to move, like throwing a ball or swinging a tennis racket.

We originally had a flock of autonomous agents for generating the truss, each with their own individual movements. However, we wanted to avoid the flock making any translational movements when each gesture force is applied, so we eventually changed the structure of the agents from a cluster of boids to a single autonomous frame with an adjustable number of satellites that represents the agents. With this method, we can ensure smooth rotations as well as avoid most of the self-intersecting issues that come with having a flock of agents generating the truss lines. The truss surface would lose some of its organic nature, but in its place we gain ease of control and greater printability with controlling a frame.

We needed the user to be able to understand what they were seeing, and be able to change the view to gain meaningful visual feedback. We added a rigid body for the camera to be controlled by the user’s position, so that it would be easier to see the shape and direction of the movement of the agents on the screen. The camera eases over to the user’s position, as opposed to having jarring camera movements matching every motion of the user.

After the conducting process, the generated virtual 3D mesh is printed with the spatial printing tool, using the fabrication method detailed here.

Possible structures:

With this system, users can create a few different kinds of tunnel-like structures based on the type of conducting movements. Without any influence or interaction from the user, the agents will move smoothly from the starting point to the finish. This results in mesh that looks much like a uniform pipe:

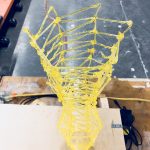

The distance between the user’s left and right hand determines the diameter of the mesh, from about 10cm to half a meter. Varying this can either cinch or create balloons in the pipe:

The user’s right hand can affect the direction the agents progress in, by making repeated swiping gestures in the desired direction. This should cause the agents to move in that direction, creating curves in the mesh:

The start and end positions can also be set anywhere to match the physical space if needed, as shown above. To generate a more smooth and dense mesh, one can increase the number of agents and reduce the pace at which the truss segments are generated. Likewise, to get a more open and low-poly mesh, the number of agents can be reduced to as low as three with a higher pace.

See here for a chart of possible variations one can generate with our toolkit.

Outcomes

Overall, the toolkit creation was successful, and we were able to generate mostly-printable meshes through this conducting process. It was interesting watching users work with our toolkit, and how they would interpret the instructions to create the virtual structures. This helped us work on making the interaction more intuitive and usable, as well as develop a better explanation for the toolkit and how to use it.

In regards to generating the virtual 3D mesh, there are still some self-intersecting issues that we have not been able to completely sort out, because of the way we have implemented the gestural interaction. Additionally, there is a twisting issue that sometimes occurs at the base of the structure; this becomes more visible with a shorter pace.

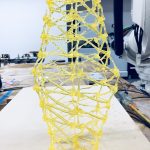

Manuel and his thesis partner were able to develop the tool such that we were able to print one of the generated structures, shown below. The fabrication was mostly successful, although there were a few small issues with the extrusion and air flow that required repeated intervention to ensure that the plastic would stick and hold its shape.

Contribution

Manuel: GH/Python algorithm (gestural interaction, truss generator, agent definition), user interface, 3D printing

Jett: GH/Python algorithm (gestural interaction, camera motion, agent definition), user interface, blog post, video