Project Description

Our design system allows us to reinterpret 2D drawings and extract additional information through stroke and line analysis. This new data allows the 2D art to be visualized in a 3D space, which can aid the artist in creating new further artwork.

Motivation and Background

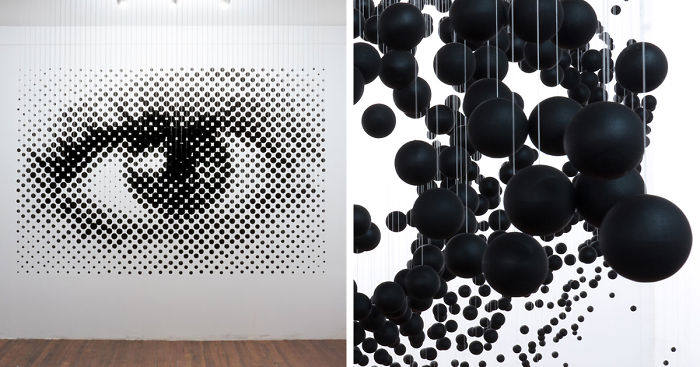

Our team originally formed around a nebulous idea of transforming and reinterpreting 2D artwork. Some existing examples of this that inspired us included perspective-based sculptures and computational string art.

We were not sure what transformations would produce the most meaningful results, and began experimenting with different ways to transform images in Grasshopper.

We also wanted to leverage the fact that two of the three members were art and design students, and an important consideration became choosing the source materials for our transformations. In the process of designing the system, Jon and Tay’s artwork and different styles informed the transformations that were done to the image.

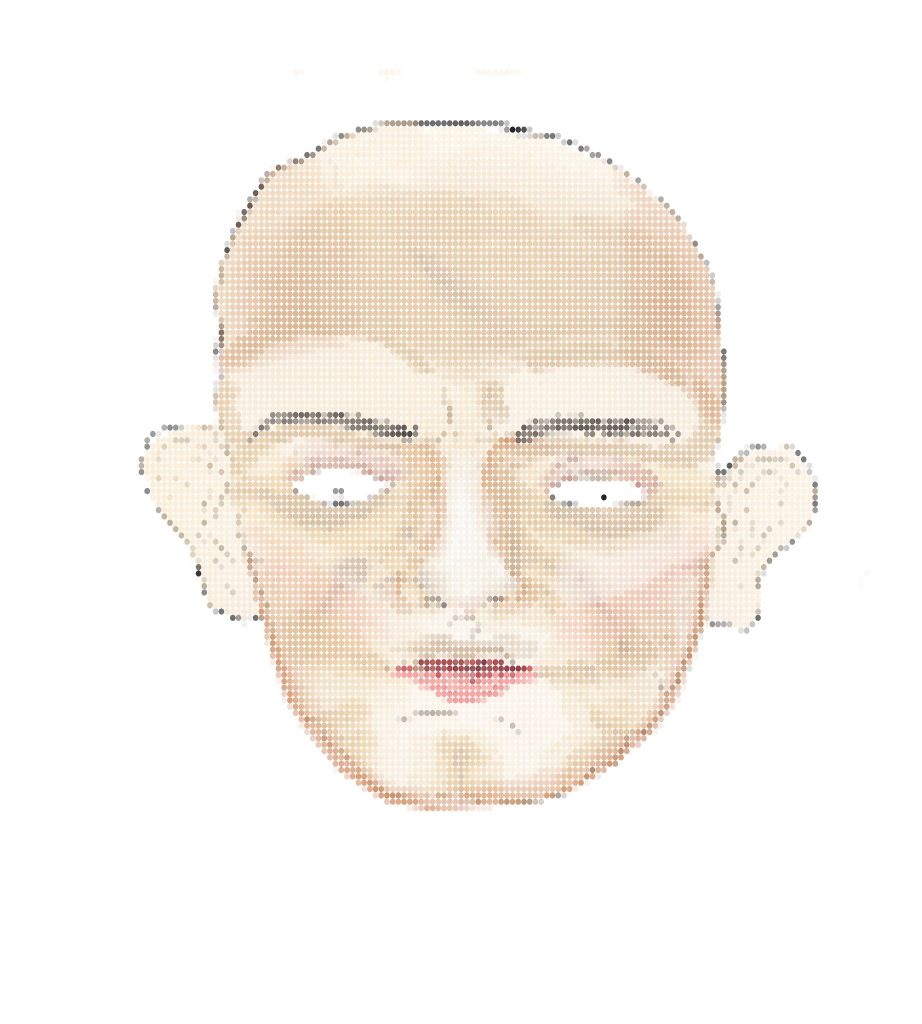

Stacked layers of “discs”

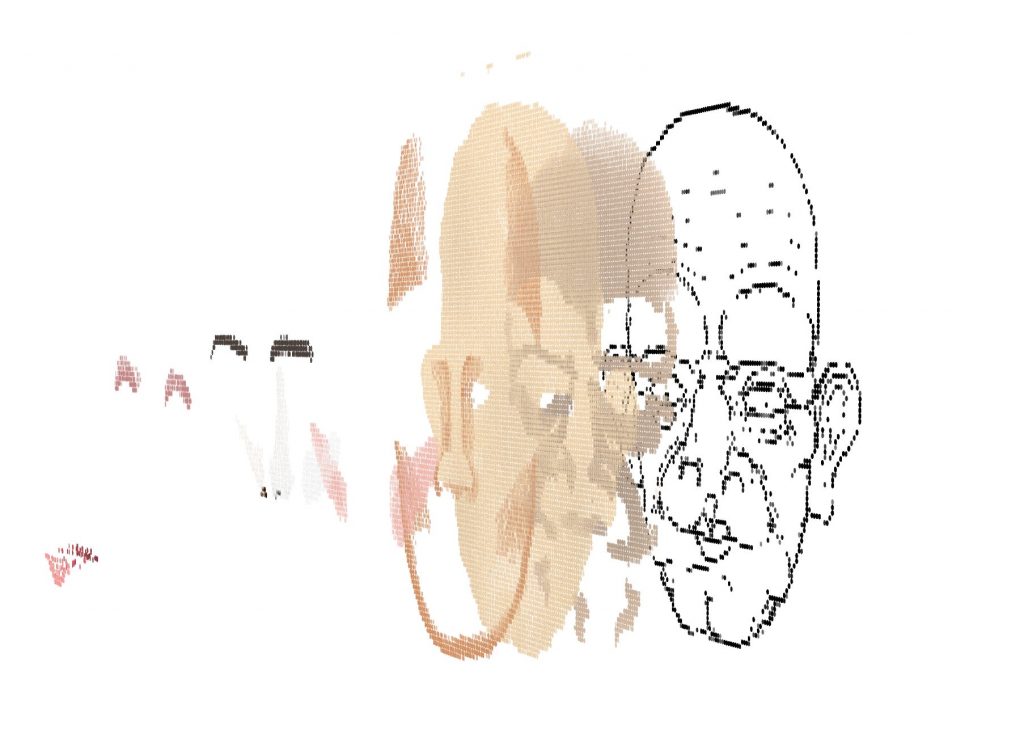

The true “face” is revealed from a different angle

Initial tests involved using different layers in Jon’s digital drawings, and arranging them such that certain parts of the drawing are only visible from oblique perspectives.

These transformations relied on how the artist deliberately chooses the arrangement of layers, which is not extensible to non-digital mediums where layers cannot be easily extracted. We began looking at image processing techniques that can “infer” features of the artwork, whether or not it was intended by the artists.

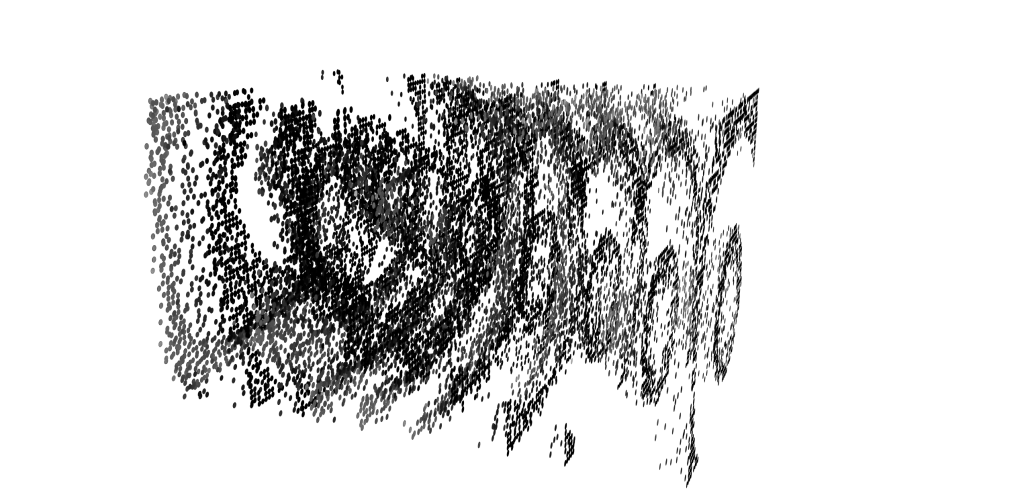

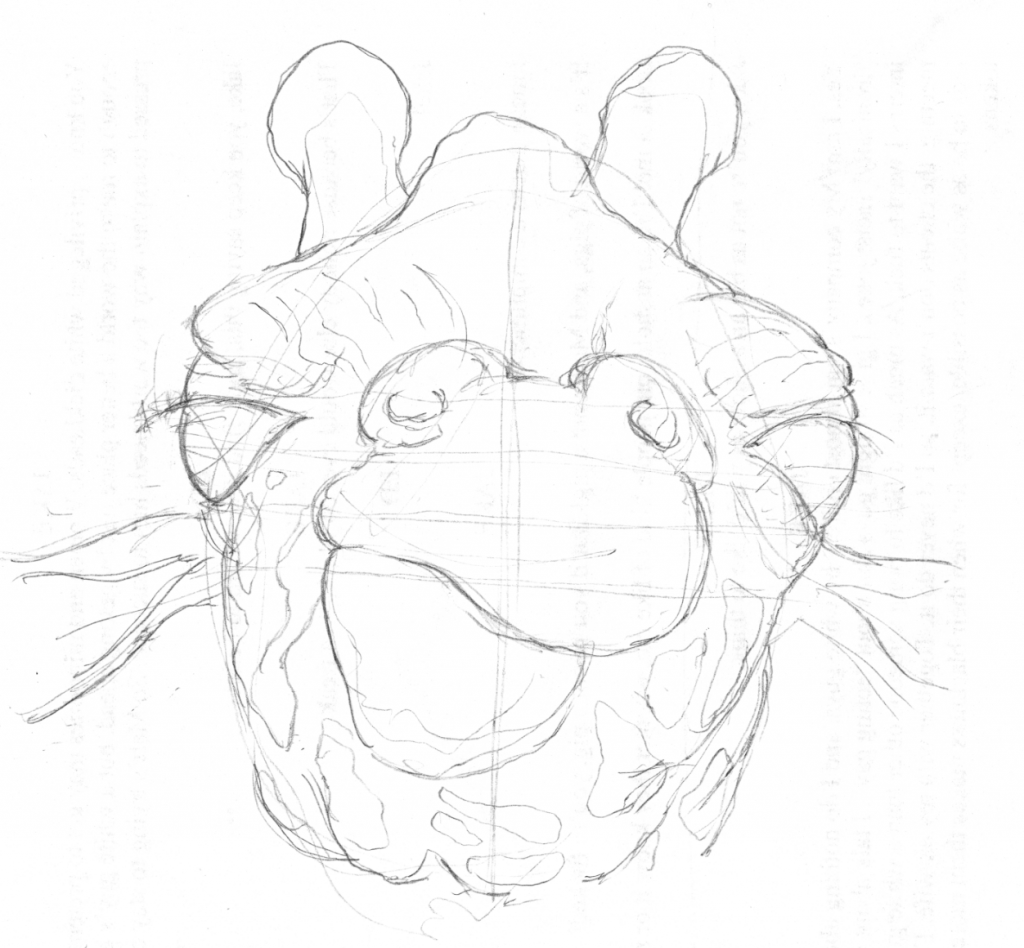

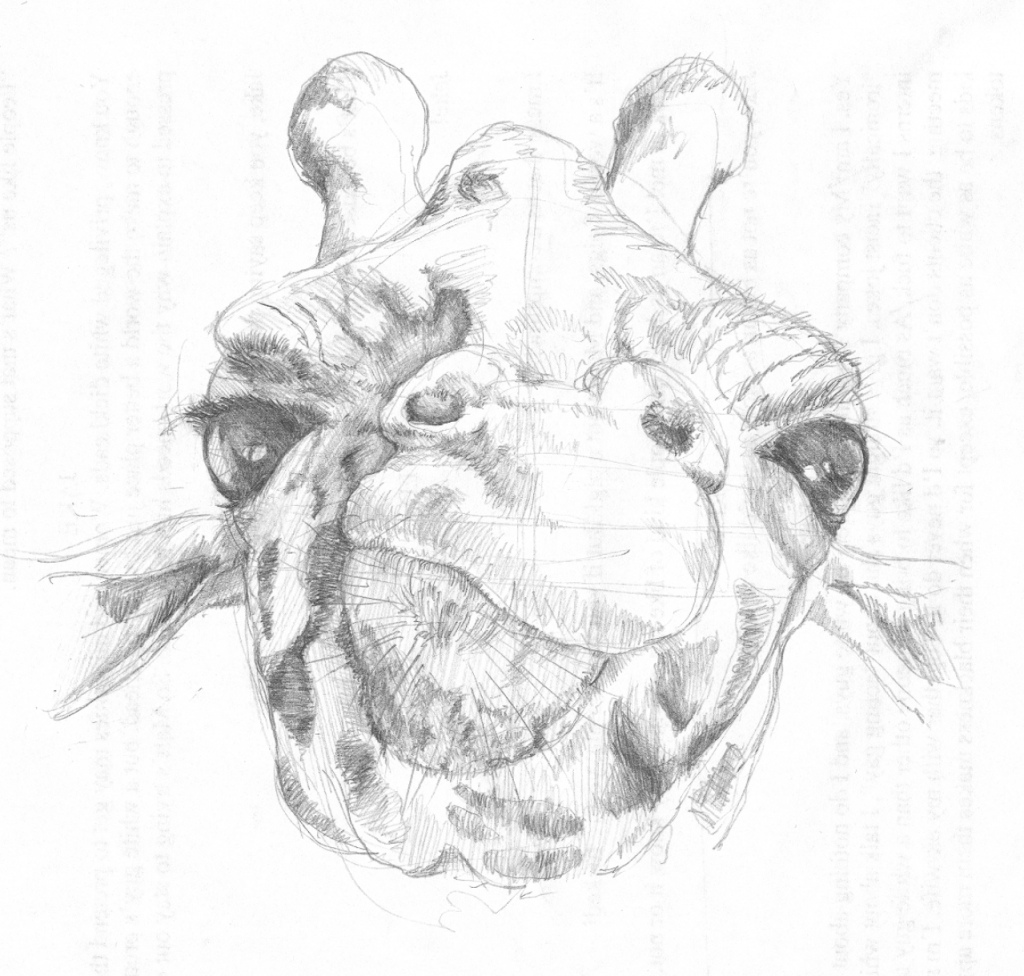

We used high resolution scans of pencil sketches for the analysis. The interaction of the graphite and the texture of the paper, as well as the techniques of shading used, is a much richer set of features than layers in the previous experiment.

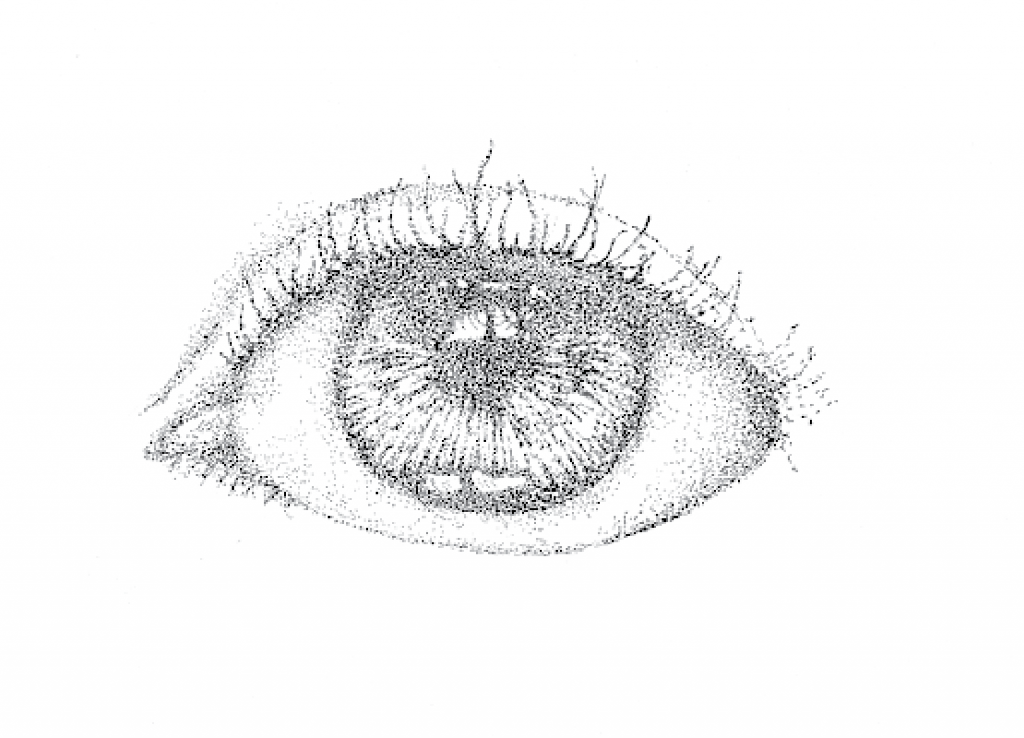

Draft sketch

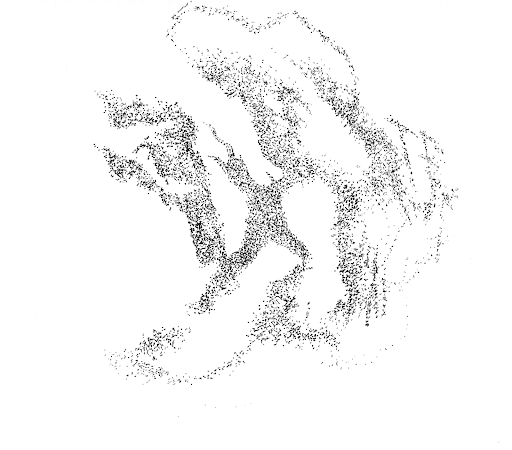

Shaded sketch

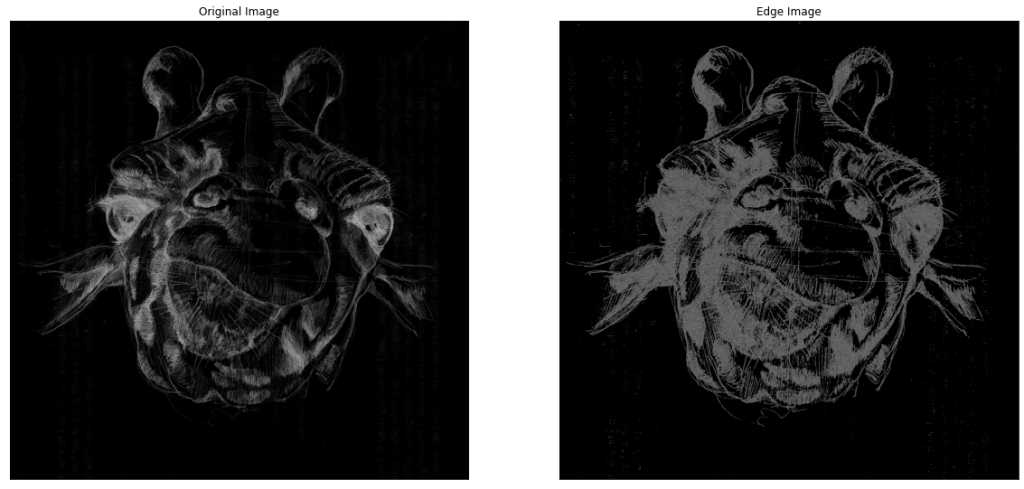

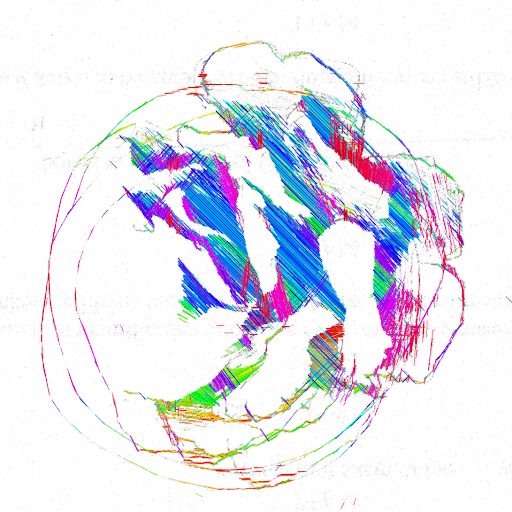

Clustering of pixels based on neighboring density.

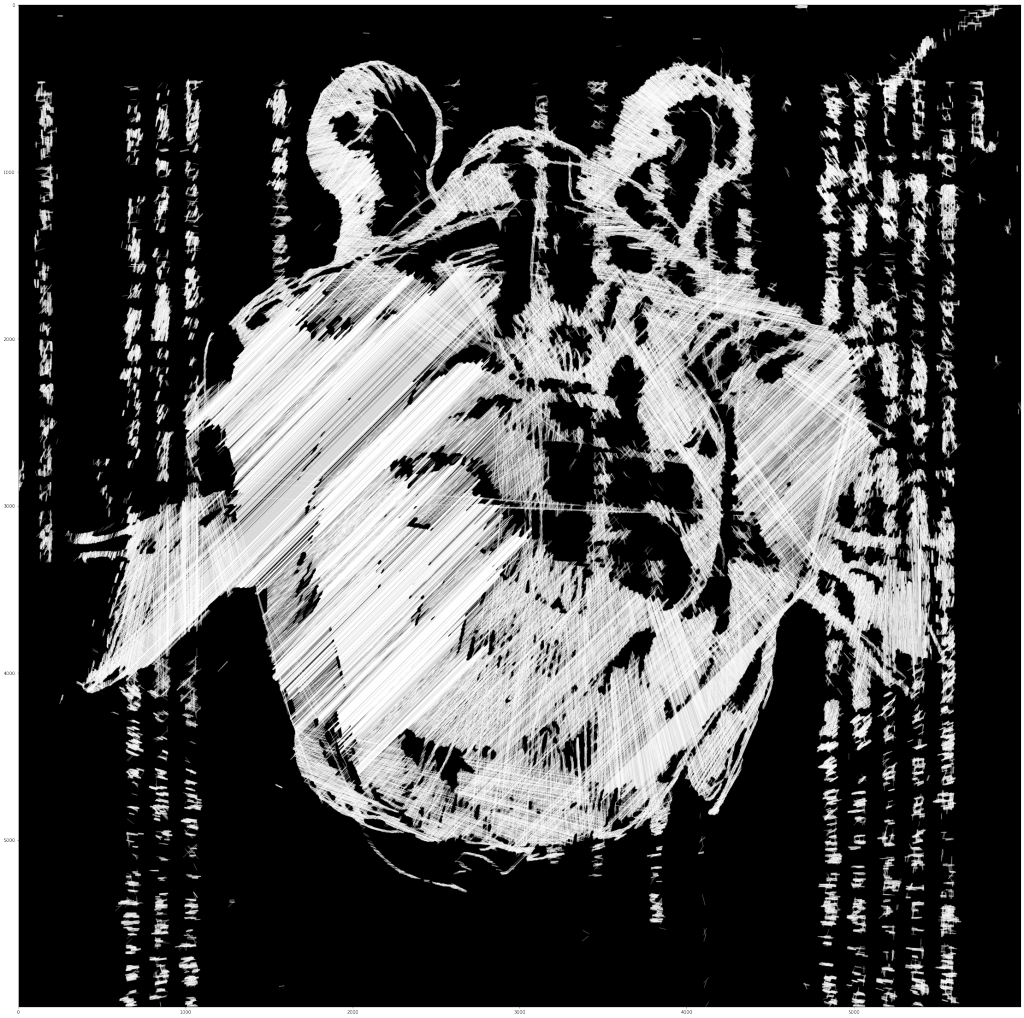

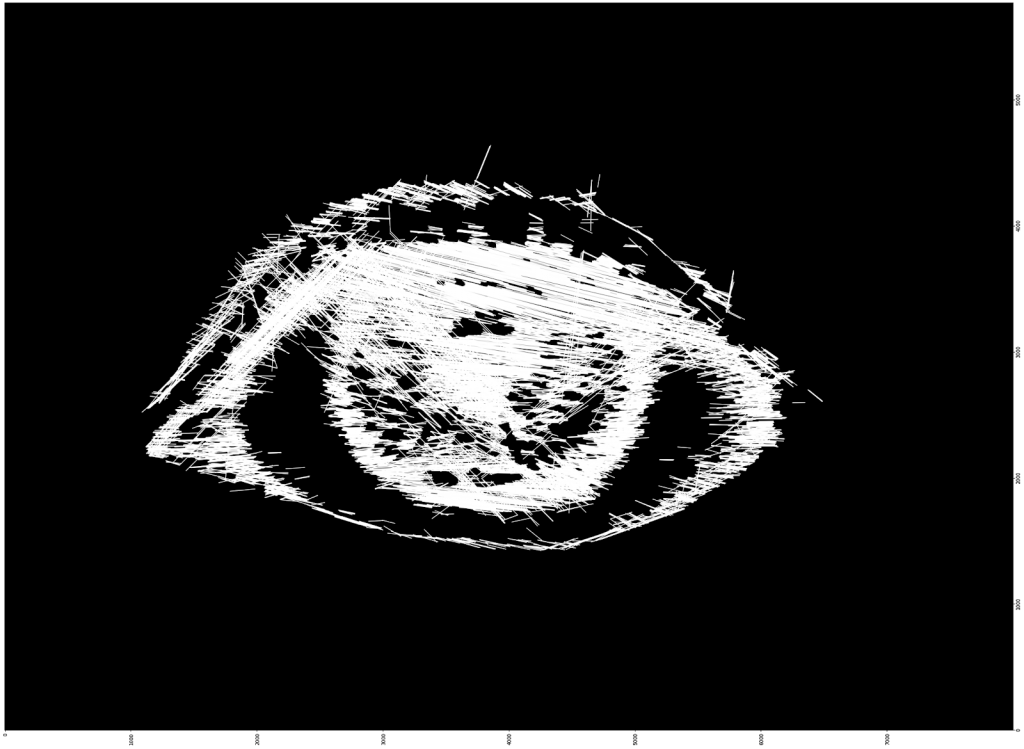

Hough Line Transform inferred the general direction of Jon’s pencil strokes.

System Overview

Our finalized pipeline begins with a source artwork, which could be created specifically for this system or any piece that the artists want to take a second look at. Any type of drawing would work, but we found that pencil, pen, and stippling drawings worked the best. We then scanned the artwork (in the highest resolution possible) and processed it in Python using the OpenCV library’s Hough Line Transformation. The script would probabilistically infer the lines and strokes in an artwork.

Original Stipple Drawing

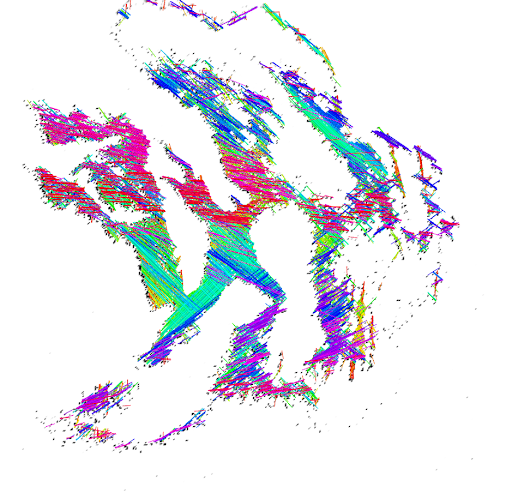

Detected lines from OpenCV

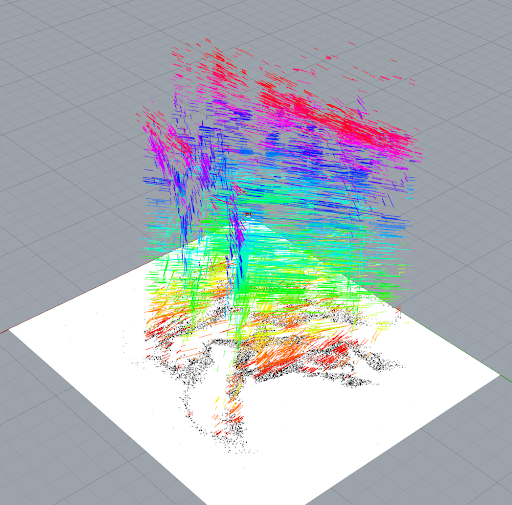

The line data and the image is then imported into Rhino and Grasshopper. The lines are superimposed on the image. To achieve the 3D effect, the direction of a given line is used to determine how far “up” from the original drawing that the line is moved.

Outcomes

Animated

We then animated the lines to show the extraction of the “strokes” from the artwork. From the side, we can sometimes see that different regions of the drawings can be almost exclusively in a similar angle. For the artist, these inferred lines can reveal intentions that they might not have been aware of, as well as generate new ideas from lines that were inferred but not intended.

Whether it be the strokes exploding out of the drawing, or lines emerging in a general direction, we believed that an animated 3D sculpture could be both beneficial to the artist and a breathtaking experience for anyone experiencing the art.

AR

Although we initially stayed away from Augmented Reality due to fear or using it as just a gimmick, we realized that it would be an effective mode to view the work in a new context. By putting the newly created 3D models in a physical 3D space, artists would be able to easier explore their work and be able to look at it through literal new perspectives within a new context. The models could also be scaled up and down which could also achieve different effects. Artists could view the models through AR in real time and work in parallel to create new concepts and art.

We were not able to show color in these models due to hardware limitations.

Takeaways and Next Steps

We hope that the digital pipeline and generated visualizations enable Artists to extract a new view of their work. The resultant artifacts are by no means an end product. In fact, the artist could then create new work based on the new forms and abstractions the they identify within the visualization. This new drawing could then be taken back into the system to generate a new image and experience. This iterative process could just be a small part of an artist’s process.

The original sketch with line overlay

A new drawing created based on visualization

New line overlay computed

3D visualization of new drawing

The pathway could also serve as a learning tool for artists. Artists can view the exploded layers of strokes from any drawing and recreate the drawing using the inferred orders and strokes they see in AR.

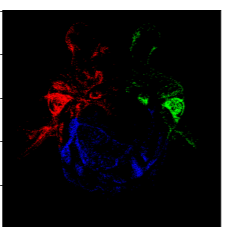

Since our system did not work well for colored images, a possible next step could be isolating the individual color channels and running each through the system. After each layer has been run through the Hough Line Transformation, they could be superimposed onto each other in Rhino to generate one 3D sculpture.