For our project (Joshua Brown, Garrett Osbourne, Stone Butler), we created a system of Markov chains which were able to learn from various inputs – MIDI information imported and analyzed through the following subpatch, as well as live performance on the Ableton pad.

Our overall concept was to create a patch which would take in a MIDI score, analyze it, and utilize the Markov systems to learn from that score and output something similar, using the captured and edited sounds we created.

Markov chains work in the following way:

Say there are three states: A B C

When you occupy state A, you have a 30% chance of moving to state B, 60% of moving to state C, and 10% chance of remaining in A. A Markov chain is an expression of this probability spectrum.

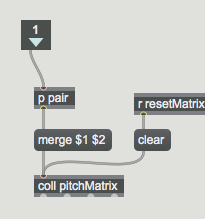

In a MIDI score, each of the “states” is a pitch in the traditional western tuning system. The patch analyzes the MIDI score and counts each instance of the note C, for instance. It then examines each note that comes after C in the score. If there are 10 C’s in the score, and 9 of those times, the next note is Bb, and the 1 other time is D, the Markov chain posits that when a C is played, 90% of the time, the next note will be Bb, and the other 10% of the time, the next note will be D. The Markov chain does this for each note in the score and develops a probabilistic concept of the overall movement patterns among pitches. Here is the overall patch:

The sounds were sampled from CMU voice students. We then chopped up the files such that each sound file had one or two notes; these notes corresponded to the notes in the c harmonic minor scale. The Markov chain system would use the data it had analyzed from the MIDI score (Silent Hill 2’s Promise Reprise, which is essentially an arpeggiation of the c minor scale) to influence the order / frequency of these files’ activations. We also had some percussive and atmospheric sounds which were triggered via the chain system as well. We played with a simple spatialization element, so as not to overwhelm.

This is what we created:

The audio-visuals patch was made by taking the patch we made in class (the cube visualization which responded to loudness of a mic input) and expanding it to include a particle system that was programmed to change position when a certain peak was reached. the positions were executed using the Ease package from Cycling ’74.